- The Environmental Impact of IT Infrastructure

- DevOps and Sustainability

- Cloud Computing and Sustainability

- Effective Infrastructure Management and Sustainability

- Green Code and DevOps Go Hand-in-Hand

- How Businesses Are Using DevOps, Cloud, and Green Code to Thrive

- Partner with Gart for IT Cost Optimization and Sustainable Business

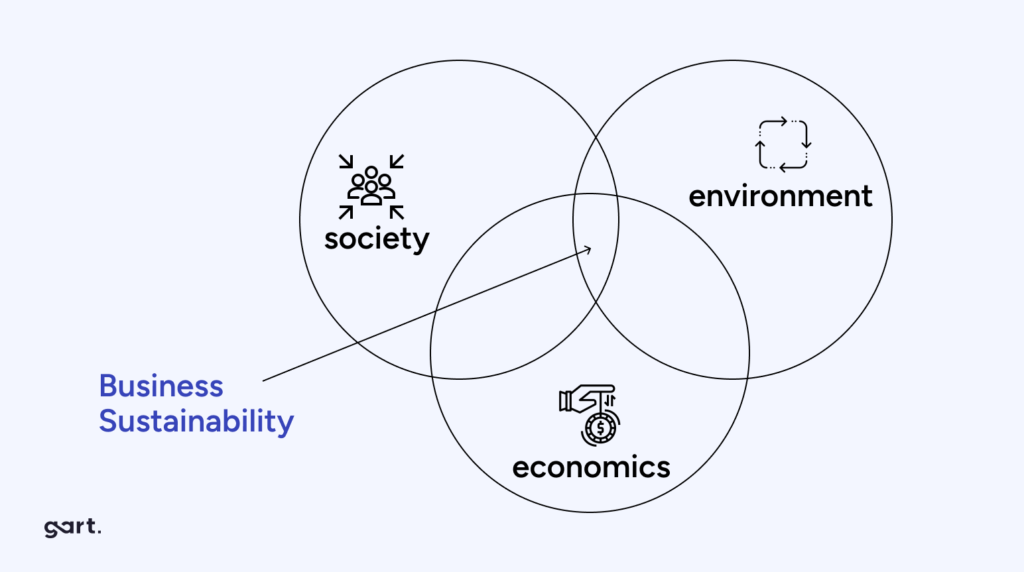

As climate change, resource depletion, and environmental issues loom large, businesses are turning to technology as a powerful ally in achieving their sustainability goals. This isn’t just about saving the planet (although that’s pretty important), it’s also about creating a more efficient and resilient future for all.

Data is the new oil, and when it comes to sustainability, it’s a game-changer. Technology empowers businesses to collect and analyze vast amounts of data, allowing them to make informed decisions about their environmental impact. By automating processes, streamlining operations, and enabling data-driven decision-making, businesses can minimize waste, reduce energy consumption, and optimize resource utilization.

Digital technologies, such as cloud computing, remote collaboration tools, and virtual platforms, have the potential to reduce the need for physical infrastructure and travel, thereby minimizing the associated environmental impacts.

One of the primary challenges is striking a balance between sustainability goals and profitability. Many businesses struggle to reconcile the perceived trade-off between environmental considerations and short-term financial gains. Implementing sustainable practices often requires upfront investments in new technologies, infrastructure, or processes, which can be costly and may not yield immediate returns. Convincing stakeholders and shareholders of the long-term benefits and value of sustainability can be a complex task.

The Environmental Impact of IT Infrastructure

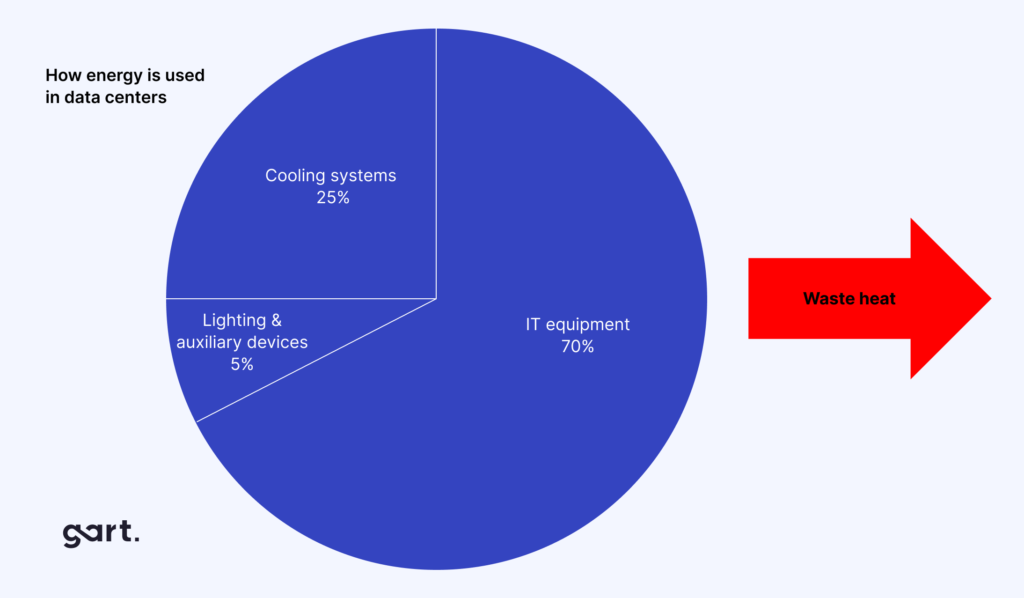

One of the primary concerns regarding IT infrastructure is energy consumption. Data centers, which house servers, storage systems, and networking equipment, are energy-intensive facilities. They require substantial amounts of electricity to power and cool the hardware, contributing to greenhouse gas emissions and straining energy grids. According to estimates, data centers account for approximately 1% of global electricity consumption, and this figure is expected to rise as data volumes and computing demands continue to grow.

Furthermore, the manufacturing process of IT equipment, such as servers, computers, and other hardware components, involves the extraction and processing of raw materials, which can have detrimental effects on the environment. The mining of rare earth metals and other minerals used in electronic components can lead to habitat destruction, water pollution, and the depletion of natural resources.

E-waste, or electronic waste, is another pressing issue related to IT infrastructure. As technological devices become obsolete or reach the end of their lifecycle, they often end up in landfills or informal recycling facilities, posing risks to human health and the environment. E-waste can contain hazardous substances like lead, mercury, and cadmium, which can leach into soil and water sources, causing pollution and potential harm to ecosystems.

By addressing the environmental impact of IT infrastructure, businesses can not only reduce their carbon footprint and resource consumption but also contribute to a more sustainable future. Striking a balance between technological innovation and environmental stewardship is crucial for achieving long-term sustainability goals.

DevOps and Sustainability

DevOps practices play a pivotal role in optimizing resources and reducing waste, making them a powerful ally in the pursuit of sustainability. By seamlessly integrating development and operations processes, DevOps enables organizations to achieve greater efficiency, agility, and environmental responsibility.

At the core of DevOps is the principle of automation and continuous improvement. By automating repetitive tasks and streamlining processes, DevOps eliminates manual efforts, reduces human errors, and minimizes resource wastage. This efficiency translates into lower energy consumption, decreased hardware utilization, and a reduced carbon footprint.

CI/CD for Improved Eco-Efficiency

Continuous Integration and Continuous Delivery (CI/CD) are essential DevOps practices that contribute to sustainability. CI/CD enables organizations to rapidly and frequently deliver software updates and improvements, ensuring that applications run optimally and efficiently. This approach minimizes the need for resource-intensive deployments and reduces the overall environmental impact of software development and operations.

Moreover, CI/CD facilitates the early detection and resolution of issues, preventing potential inefficiencies and resource wastage. By integrating automated testing and quality assurance processes, organizations can identify and address performance bottlenecks, security vulnerabilities, and other issues that could lead to increased energy consumption or resource utilization.

Monitoring and Analytics for Identifying and Eliminating Inefficiencies

DevOps emphasizes the importance of monitoring and analytics as a means to gain insights into system performance, resource utilization, and potential areas for improvement. By leveraging advanced monitoring tools and techniques, organizations can gather real-time data on energy consumption, hardware utilization, and application performance.

This data can then be analyzed to identify inefficiencies, such as underutilized resources, redundant processes, or areas where optimization is required. Armed with these insights, organizations can take proactive measures to streamline operations, adjust resource allocation, and implement energy-saving strategies, ultimately reducing their environmental footprint.

For a deeper dive into how monitoring and analytics can drive efficiency and sustainability, explore this case study of a software development company that optimized its workload orchestration using continuous monitoring.

Our case study: Implementation of Nomad Cluster for Massively Parallel Computing

Cloud Computing and Sustainability

Cloud computing has emerged as a transformative technology that not only enhances efficiency and agility but also holds significant potential for promoting sustainability and reducing environmental impact. By leveraging the power of cloud services, organizations can achieve remarkable energy and resource savings, while simultaneously minimizing their carbon footprint.

Energy and Resource Savings through Cloud Services

One of the primary advantages of cloud computing in terms of sustainability is the efficient utilization of shared resources. Cloud service providers operate large-scale data centers that are designed for optimal resource allocation and energy efficiency. By consolidating workloads and leveraging economies of scale, cloud providers can maximize resource utilization, reducing energy consumption and minimizing waste.

Additionally, cloud providers invest heavily in implementing cutting-edge technologies and best practices for energy efficiency, such as advanced cooling systems, renewable energy sources, and efficient hardware. These efforts result in significant energy savings, translating into a lower carbon footprint for organizations that leverage cloud services.

Flexible Cloud Models for Cost Optimization for Sustainable Operations

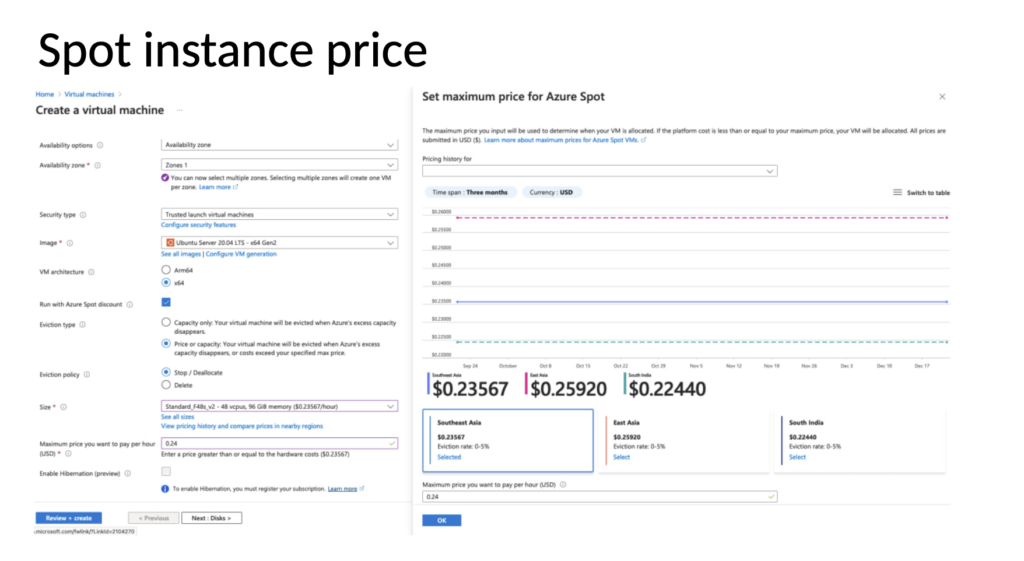

Cloud computing offers flexible deployment models, including public, private, and hybrid clouds, allowing organizations to tailor their cloud strategies to meet their specific needs and optimize costs. By embracing the pay-as-you-go model of public clouds or implementing private clouds for sensitive workloads, businesses can dynamically scale their resource consumption, avoiding over-provisioning and minimizing unnecessary energy expenditure.

Cloud providers offer a diverse range of compute and storage resources with varying payment options and tiers, catering to different use cases and requirements. For instance, Amazon Web Services (AWS) provides Elastic Compute Cloud (EC2) instances with multiple pricing models, including Dedicated, On-Demand, Spot, and Reserved instances. Choosing the most suitable instance type for a specific workload can lead to significant cost savings.

Dedicated instances, while the most expensive option, are ideal for handling sensitive workloads where security and compliance are of paramount importance. These instances run on hardware dedicated solely to a single customer, ensuring heightened isolation and control.

On-demand instances, on the other hand, are billed on an hourly basis and are well-suited for applications with short-term, irregular workloads that cannot be interrupted. They are particularly useful during testing, development, and prototyping phases, offering flexibility and scalability on-demand.

For long-running workloads, Reserved instances offer substantial discounts, up to 72% compared to on-demand pricing. By investing in Reserved instances, businesses can secure capacity reservations and gain confidence in their ability to launch the required number of instances when needed.

Spot instances present a cost-effective alternative for workloads that do not require high availability. These instances leverage spare computing capacity, enabling businesses to benefit from discounts of up to 90% compared to on-demand pricing.

Our case study: Cutting Costs by 81%: Azure Spot VMs Drive Cost Efficiency for Jewelry AI Vision

Additionally, DevOps teams employ various cloud cost optimization practices to further reduce operational expenses and environmental impact. These include:

– Identifying and deleting underutilized instances

– Moving infrequently accessed storage to more cost-effective tiers

– Exploring alternative regions or availability zones with lower pricing

– Leveraging available discounts and pricing models

– Implementing spend monitoring and alert systems to track and control costs proactively

By adopting a strategic approach to resource utilization and cost optimization, businesses can not only achieve sustainable operations but also unlock significant cost savings. This proactive mindset aligns with the principles of environmental stewardship, enabling organizations to thrive while minimizing their ecological footprint.

Read more: Sustainable Solutions with AWS

Reduced Physical Infrastructure and Associated Emissions

Moving to the cloud isn’t just about convenience and scalability – it’s a game-changer for the environment. Here’s why:

Bye-bye Bulky Servers

Cloud computing lets you ditch the on-site server farm. No more rows of whirring machines taking up space and guzzling energy. Cloud providers handle that, often in facilities optimized for efficiency. This translates to less energy used, fewer emissions produced, and a lighter physical footprint for your business.

Commuting? Not Today

Cloud-based tools enable remote work, which means fewer cars on the road spewing out emissions. Not only does this benefit the environment, but it also promotes a more flexible and potentially happier workforce.

Cloud computing offers a win-win for businesses and the planet. By sharing resources, utilizing energy-saving data centers, and adopting flexible deployment models, cloud computing empowers organizations to significantly reduce their environmental impact without sacrificing efficiency or agility. Think of it as a powerful tool for building a more sustainable future, one virtual server at a time.

Effective Infrastructure Management and Sustainability

Effective infrastructure management plays a crucial role in achieving sustainability goals within an organization. By implementing strategies that optimize resource utilization, reduce energy consumption, and promote environmentally-friendly practices, businesses can significantly diminish their environmental impact while maintaining operational efficiency.

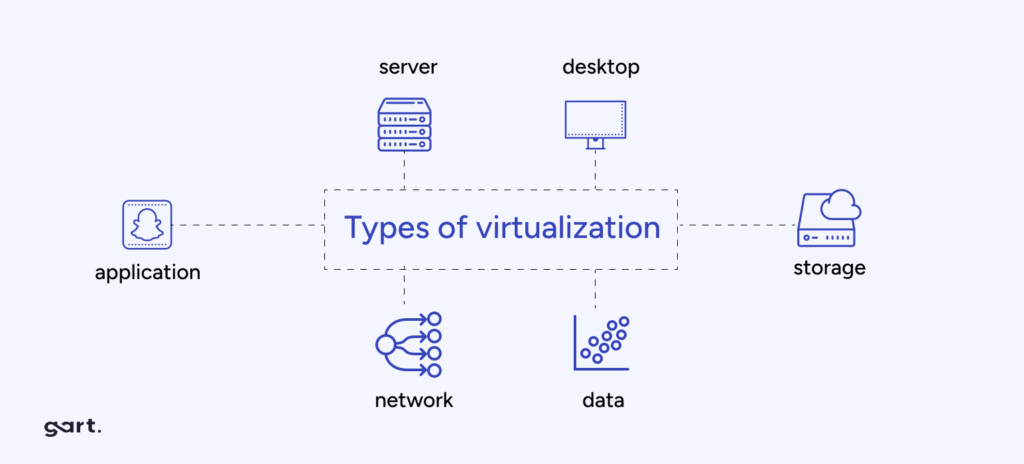

Virtualization and Consolidation Strategies for Reducing Hardware Needs

Virtualization technology has revolutionized the way organizations manage their IT infrastructure.

By ditching the extra servers, you’re using less energy to power and cool them. Think of it like turning off all the lights in empty rooms – virtualization ensures you’re only using the resources you truly need. This translates to significant energy savings and a smaller carbon footprint.

Fewer servers mean less hardware to manufacture and eventually dispose of. This reduces the environmental impact associated with both the production process and electronic waste (e-waste). Virtualization helps you be a more responsible citizen of the digital world.

Our case study: IoT Device Management Using Kubernetes

Optimizing with Third-Party Services

In the pursuit of sustainability and resource efficiency, businesses must explore innovative strategies that can streamline operations while reducing their environmental footprint. One such approach involves leveraging third-party services to optimize costs and minimize operational overhead. Cloud computing providers, such as Azure, AWS, and Google Cloud, offer a vast array of services that can significantly enhance the development process and reduce resource consumption.

A prime example is Amazon’s Relational Database Service (RDS), a fully managed database solution that boasts advanced features like multi-regional setup, automated backups, monitoring, scalability, resilience, and reliability. Building and maintaining such a service in-house would not only be resource-intensive but also costly, both in terms of financial investment and environmental impact.

However, striking the right balance between leveraging third-party services and maintaining control over critical components is crucial. When crafting an infrastructure plan, DevOps teams meticulously analyze project requirements and assess the availability of relevant third-party services. Based on this analysis, recommendations are provided on when it’s more efficient to utilize a managed service, and when it’s more cost-effective and suitable to build and manage the service internally.

For ongoing projects, DevOps teams conduct comprehensive audits of existing infrastructure resources and services. If opportunities for cost optimization are identified, they propose adjustments or suggest integrating new services, taking into account the associated integration costs with the current setup. This proactive approach ensures that businesses continuously explore avenues for reducing their environmental footprint while maintaining operational efficiency.

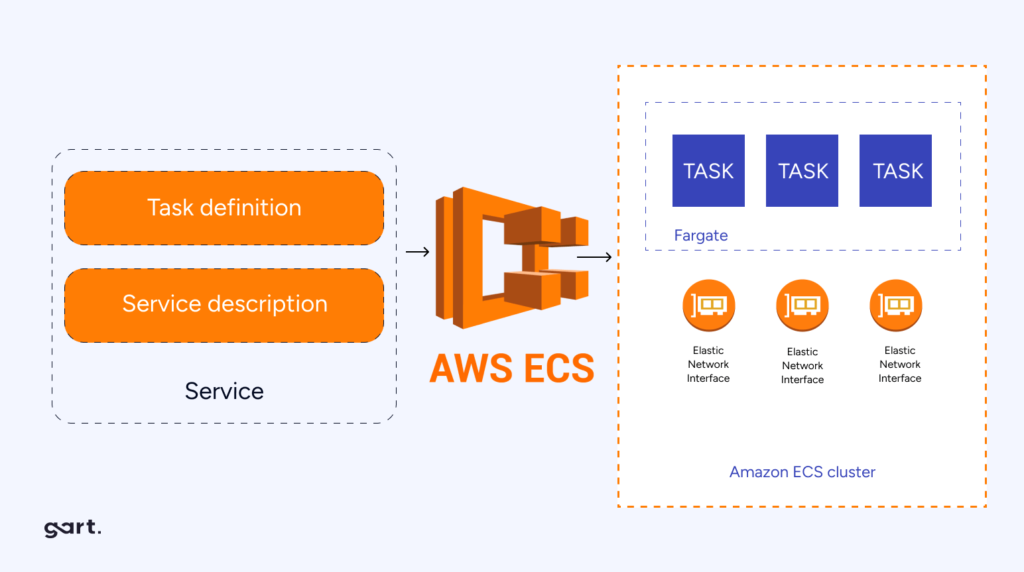

One notable success story involves a client whose services were running on EC2 instances via the Elastic Container Service (ECS). After analyzing their usage patterns, peak periods, and management overhead, the DevOps team recommended transitioning to AWS Fargate, a serverless solution that eliminates the need for managing underlying server infrastructure. Fargate not only offered a more streamlined setup process but also facilitated significant cost savings for the client.

By judiciously adopting third-party services, businesses can reduce operational overhead, optimize resource utilization, and ultimately minimize their environmental impact. This approach aligns with the principles of sustainability, enabling organizations to achieve their goals while contributing to a greener future.

Our case study: Deployment of a Node.js and React App to AWS with ECS

Green Code and DevOps Go Hand-in-Hand

At the heart of this sustainable approach lies green code, the practice of developing and deploying software with a focus on minimizing its environmental impact. Green code prioritizes efficient algorithms, optimized data structures, and resource-conscious coding practices.

At its core, Green Code is about designing and implementing software solutions that consume fewer computational resources, such as CPU cycles, memory, and energy. By optimizing code for efficiency, developers can reduce the energy consumption and carbon footprint associated with running applications on servers, desktops, and mobile devices.

Continuous Monitoring and Feedback

DevOps promotes continuous monitoring of applications, providing valuable insights into resource utilization. These insights can be used to identify areas for code optimization, ensuring applications run efficiently and consume less energy.

Infrastructure Automation:

Automating infrastructure provisioning and management through tools like Infrastructure as Code (IaC) helps eliminate unnecessary resources and idle servers. Think of it like switching off the lights in an empty room – automation ensures resources are only used when needed.

Containerization

Containerization technologies like Docker package applications with all their dependencies, allowing them to run efficiently on any system. This reduces the need for multiple servers and lowers overall energy consumption.

Cloud-Native Development

By leveraging cloud platforms, developers can benefit from pre-built, scalable infrastructure with high energy efficiency. Cloud providers are constantly optimizing their data centers for sustainability, so you don’t have to shoulder the burden alone.

DevOps practices not only streamline development and deployment processes, but also create a culture of resource awareness and optimization. This, combined with green code principles, paves the way for building applications that are not just powerful, but also environmentally responsible.

How Businesses Are Using DevOps, Cloud, and Green Code to Thrive

Case Study 1: Transforming a Local Landfill Solution into a Global Platform

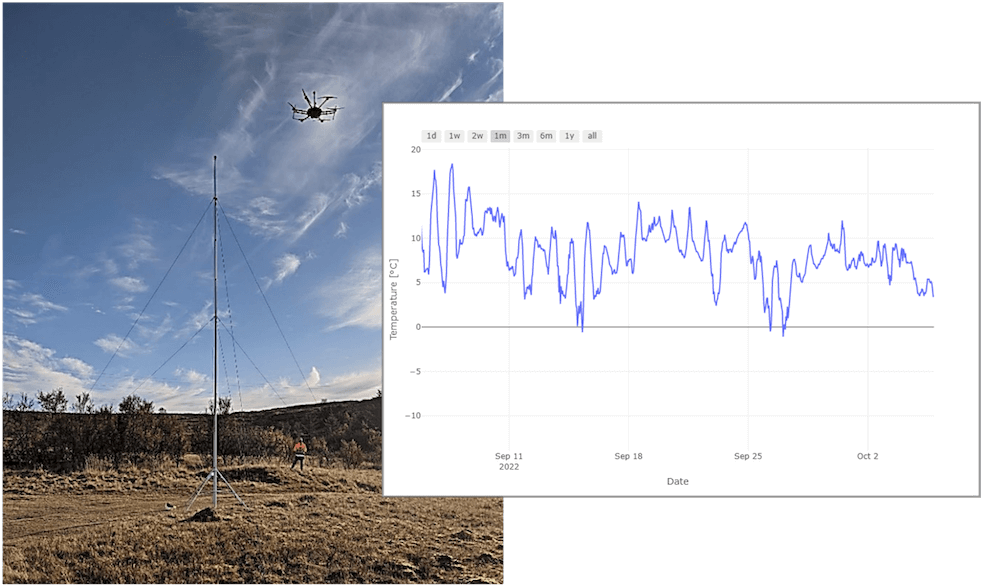

ReSource International, an Icelandic environmental solutions company, developed elandfill.io, a digital platform for monitoring and managing landfill operations. However, scaling the platform globally posed challenges in managing various components, including geospatial data processing, real-time data analysis, and module integration.

Gart Solutions implemented the RMF, a suite of tools and approaches designed to facilitate the deployment of powerful digital solutions for landfill management globally.

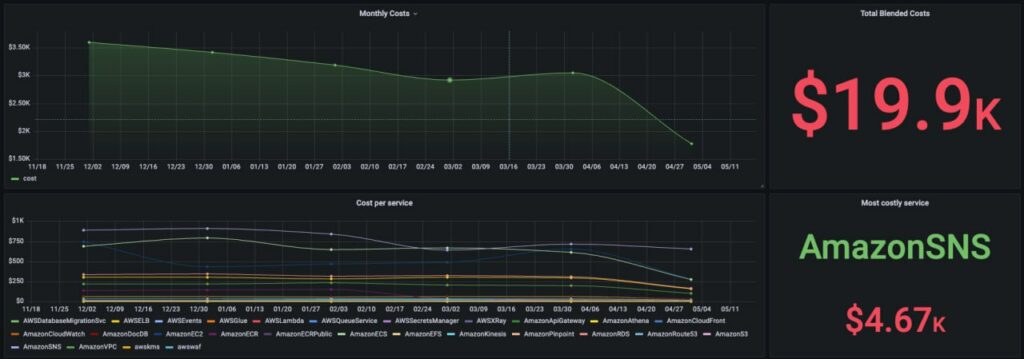

Case Study 3: The #1 Music Promotion Services Cuts Costs with Sustainable AWS Solutions

The #1 Music Promotion Services, a company helping independent artists, faced rising AWS infrastructure costs due to rapid growth. A multi-faceted approach focused on optimization and cost-saving strategies was implemented. This included:

- Amazon SNS Optimization: A usage audit identified redundant notifications and opportunities for batching messages, leading to lower usage charges.

- EC2 and RDS Cost Management: Right-sizing instances, utilizing reserved instances, and implementing auto-scaling ensured efficient resource utilization.

- Storage Optimization: Lifecycle policies and data cleanup practices reduced storage costs.

- Traffic and Data Transfer Management: Optimized data transfer routes and cost monitoring with alerts helped manage unexpected spikes.

Results: Monthly AWS costs were slashed by 54%, with significant savings across services like Amazon SNS and EC2/RDS. They also established a framework for sustainable cost management, ensuring long-term efficiency.

Partner with Gart for IT Cost Optimization and Sustainable Business

As businesses strive for sustainability, partnering with the right IT provider is crucial for optimizing costs and minimizing environmental impact. Gart emerges as a trusted partner, offering expertise in cloud computing, DevOps, and sustainable IT solutions.

Gart’s cloud proficiency spans on-premise-to-cloud migration, cloud-to-cloud migration, and multi-cloud/hybrid cloud management. Our DevOps services include cloud adoption, CI/CD streamlining, security management, and firewall-as-a-service, enabling process automation and operational efficiencies.

Recognized by IAOP, GSA, Inc. 5000, and Clutch.co, Gart adheres to PCI DSS, ISO 9001, ISO 27001, and GDPR standards, ensuring quality, security, and data protection.

By partnering with Gart, businesses can optimize IT costs, reduce their carbon footprint, and foster a sustainable future. Leverage Gart’s expertise to align your IT strategies with environmental goals and unlock the benefits of cost optimization and sustainability.

See how we can help to overcome your challenges