To make your project as resilient and scalable as Spotify, you need to migrate it to Kubernetes. Migrating to Kubernetes is key for scaling modern applications, but the process involves many moving parts. There has been much debate around Kubernetes, but it is currently one of the best tools for containerizing large-scale projects. Though, transitioning to Kubernetes is no easy task.

Our team has accumulated substantial experience working on projects that required Kubernetes implementation or support at different stages. However, to properly integrate Kubernetes from any starting point, it’s not enough to simply ask the client’s team what’s ready or what’s needed. It’s crucial to have an understanding of all the work stages to avoid overlooking anything important.

If you’re planning to containerize your project using Kubernetes, we recommend reviewing our article that outlines the key steps in this process. This guide outlines our proven 11-step migration process and three real-life case studies — so you know exactly what to expect and how long it takes.

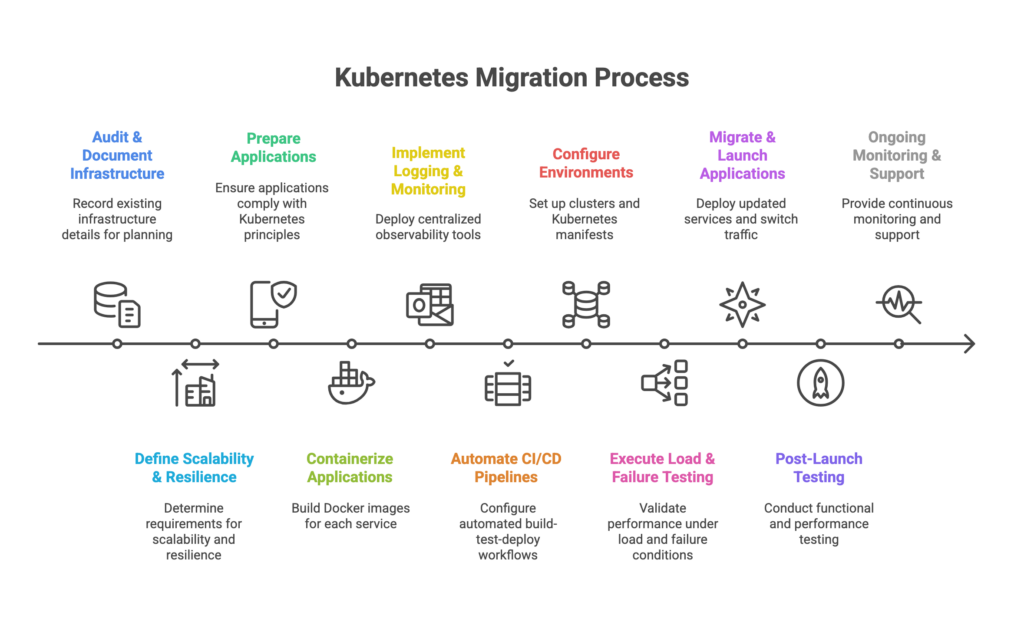

11 Steps of Kubernetes Migration

Descriptions of complex processes like this are often just “idealised examples.” Our list is based on our own practical experience, which we present as an optimal approach from our perspective. Successful migration should follow these steps:

▪️ Audit and document the project’s existing server infrastructure

This involves identifying which applications run on which servers, and the technology stack in use. If we’re joining an ongoing Kubernetes migration, we’ll assess the progress made and reusable scripts. The audit output is a document detailing the current infrastructure, relationships, and the migration’s status.

▪️ Define requirements for application scalability and resilience.

We determine if automatic scaling of application instances is needed to handle increased loads. We also consider whether backup application instances are required to enhance system reliability.

▪️ Prepare applications for the Kubernetes cluster

We verify the applications meet 12-factor app requirements. If needed, we finalise application setup to align with the requirements. Our DevOps team will need answers to key questions from the development team about stateful/stateless behavior, user session handling, dependencies, configuration, and launch process.

▪️ Containerize each application

We create Docker containers, leveraging pre-built base images where possible.

▪️ Design and implement centralized logging and monitoring for the applications and cluster

Deploy centralized observability (e.g., Prometheus + Grafana, ELK, Fluentd) to track cluster and application health.

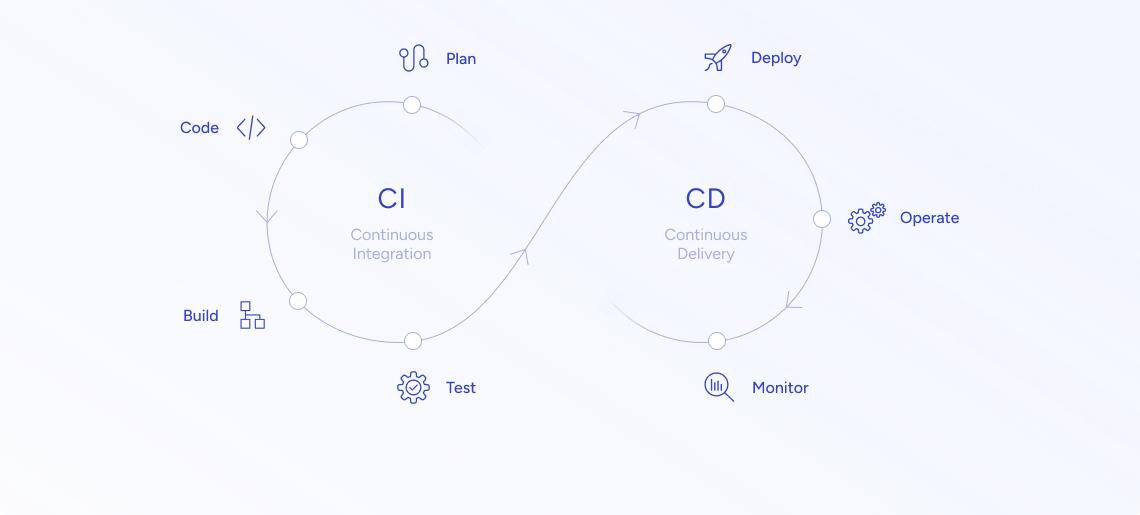

▪️ Automate CI/CD

This enables developers to commit code changes that are then promptly deployed to the cluster, allowing applications to be properly rebuilt and restarted.

▪️ Prepare environments, migrations, and Kubernetes manifests

We configure the Kubernetes cluster, set up required databases, queues, and other supporting software. Deployment manifests are created for each service.

▪️ Conduct load and failure testing

We validate the cluster functions as designed, and that the infrastructure can handle the required load and failure scenarios.

▪️ Migrate and launch applications

We update service deployments to the latest versions, synchronize data between old and new environments. This may involve temporarily suspending the application, or a switchover approach with database replication.

▪️ Perform final post-launch testing

Testers comprehensively verify application functionality, addressing any critical issues found before going live.

▪️ Monitor and support the Kubernetes cluster

Ongoing monitoring checks the health of cluster components and application services. We handle any problems identified through monitoring. For evolving projects, this includes adding new services, integrating testing into CI/CD, and addressing vulnerabilities.

Tip:

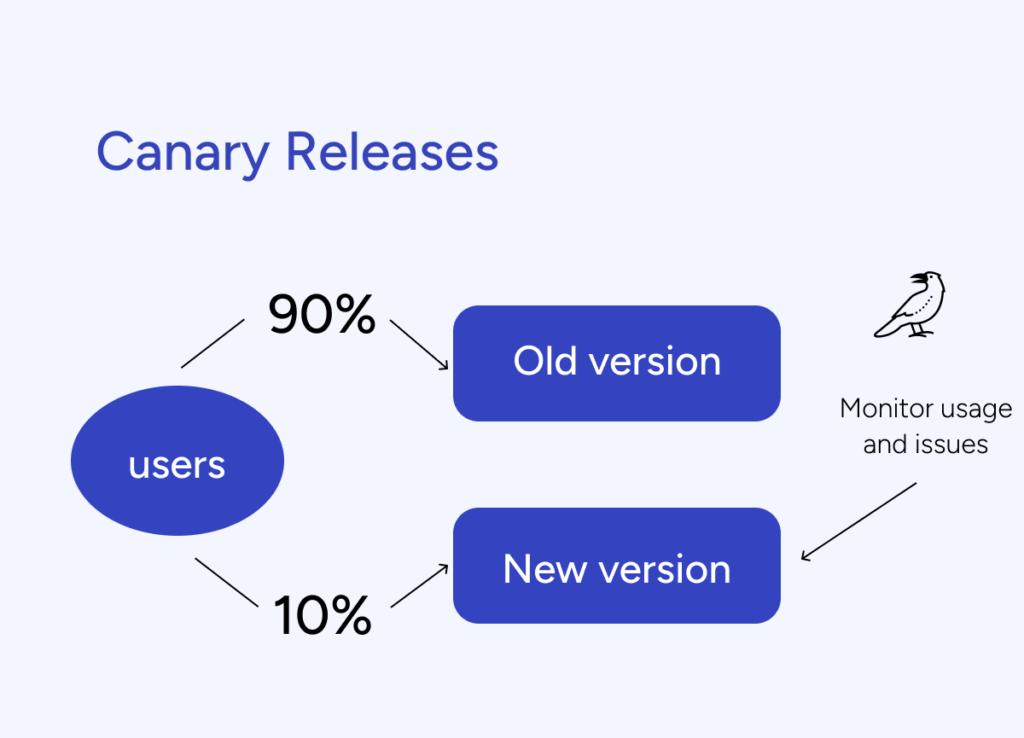

- Use canary releases to gradually shift traffic to new versions and enable quick rollback if issues arise.

- Automate rollback conditions based on error-rate thresholds.

Canary Releases

To mitigate the risks of major changes during application migrations and launches, Kubernetes enables deploying and rolling back new service versions. This allows us to perform “canary releases” – partially deploying a new version and migrating a subset of users to it. This approach helps assess changes in load, track errors, monitor the project’s status, and if all is well, smoothly continue the transition to the new version.

The process is automated. In the event of critical issues, you can automatically roll back to the previous version to address the problems.

Real‑World Case Studies: Kubernetes Migrations in Action

| Project | Challenge | Solution | Results |

|---|---|---|---|

| IoT Device Management (Microchip Industry) | Centralized platform, no multi‑tenant deployment | Designed cloud-agnostic Kubernetes + Helm + custom Ingress for UDP protocols + autoscaling + monitoring | Multi-infrastructure deployment, automated builds, robust observability, delivered in 1.5 years |

| Mobile Photography SaaS (>10M users) | Dockerized microservices on separate EC2s → cost/infrastructure waste | Full Kubernetes migration with gRPC, Envoy, Kubernetes cluster design, phased migration | Deployment time down from 2 days to 3 hours, annual deployments rose from 1,800 to 3,100, 45% EC2 cost reduction |

| Travel Portal | Development setup only; needed robust production deployment with fault tolerance | Designed production-ready cluster, CI/CD pipeline, and architectures for DB, queue, and cache resilience | Designed production-ready cluster, CI/CD pipeline, and architectures for DB, queue, and cache resilience |

Let’s take a look into more details:

Project #1: IoT Device Management Platform

The client, a leading product company in the microchip market, had an IoT platform for managing devices with their microchips in customer infrastructure, such as mobile phones, cars, and other electronic equipment. The challenge was to distribute this platform and make it possible for customers to install it on their own infrastructure. The existing solution was complex, centralized, and only worked on the client’s side.

- Industry: Microchip manufacturing

- Technologies: AWS, Azure, Docker, GitLab, Grafana, Helm, Kubernetes, Prometheus

What we did:

▪️ Built a cloud-agnostic Kubernetes solution that could be installed on any customer infrastructure and scaled on demand.

▪️ Configured the internal network to work with COAP and MQTT protocols for device connectivity.

▪️ Used Helm charts as the primary configuration and delivery tool.

▪️ Developed a custom Ingress controller and load balancing solution to handle the IoT-specific UDP protocol requirements.

▪️ Implemented a horizontal autoscaling approach based on basic and application-specific metrics.

Results:

- Provided a universal Kubernetes-based IoT device management platform that could be deployed on the customer’s infrastructure.

- Automated the build, release, and management processes.

- Implemented robust monitoring and observability solutions.

- Delivered the project in 1.5 years, following industry best practices for security.

Project #2: Optimizing Kubernetes Costs on AWS for a Mobile Photography SaaS

Our customer was a rapidly growing SaaS application for mobile photography, with over 10 million users. Initially, they operated a single PHP monolith application with a MySQL database. As the user base expanded, the team began creating Node.js and Go microservices, containerized with Docker. However, these microservices were hosted on separate EC2 instances, leading to excessive resource waste and inefficiency.

- Industry: IT/SaaS Software

- Technologies: AKS (Kubernetes), Amazon EC2, Amazon ECS, AWS, AWS DMS, Docker, Envoy, Go, gRPC, Kubernetes

What we did:

▪️ Gart Solutions conducted an infrastructure audit and provided cloud consulting services to address the challenges of inefficient AWS EC2 instances.

▪️ Based on the assessment, the team determined that the most suitable solution was the implementation of Kubernetes, complemented by cloud-native technologies like gRPC and Envoy.

▪️ The choice of Kubernetes was influenced by its ease of use, robust support, and open-source nature, aligning with the company’s adoption of AWS, Go, and gRPC for their microservices.

▪️ The company established its own Kubernetes cluster and developed custom components to meet specific deployment requirements.

▪️ Gart assisted in migrating the services to Kubernetes, starting with small, low-risk services and progressing to a full-scale migration.

Results:

- The time required for the first deployment was reduced from 2 days to 3 hours.

- The number of deployments increased from 1800 to 3100 annually, a 60% reduction in deployment time.

- The company achieved a remarkable 45% reduction in overall EC2 costs, thanks to the efficiency gains from Kubernetes adoption.

- By embracing gRPC and Envoy, the application enhanced security across multiple programming languages and improved communication between services.

- Envoy enabled the company to directly serve gRPC and HTTP/2 to mobile clients through edge load balancers, improving observability and standardization of dashboards.

The transition to cloud-native technology, spearheaded by Kubernetes, gRPC, and Envoy, not only enhanced efficiency and cost-effectiveness but also enabled the scaling of their services.

Project #3: Travel Portal Industry

- Travel planning service

- Tech Stack: Node.js for front-end and back-end, Java for back-end.

- Timeline: 6 months

What We Did:

▪️ Tasked with deploying a production cluster from an existing development environment, considering load and resilience. The application was already built.

▪️ Designed server and service (DB, queues, caching) architecture for production.

▪️ Configured fault tolerance for databases, queues, and caching.

▪️ Set up the k8s cluster servers.

▪️ Established CI/CD for the production cluster.

In this case, each microservice application functionality is handled by a separate service. The front-end is its own service, accessing numerous back-end services. The website may have hotels, theaters, flights as distinct services, all integrated through the front-end. This microserviced architecture is well-suited for Kubernetes.

The back-end services have no UI, responding to requests with text data for the front-end to render.

When Should You Migrate to Kubernetes?

There’s no straightforward answer on whether to make the move to Kubernetes. The decision really depends on the specifics of the project.

One can confidently recommend Kubernetes for projects handling large data volumes and a growing number of applications. As the application count increases, server capacity can become a bottleneck, making containerization the logical solution. Enterprises planning expansion, or those already pushing the limits of their infrastructure, should seriously consider transitioning to a Kubernetes cluster, which can run on any platform.

Consider Kubernetes when your project:

- Runs multiple services or microservices

- Requires auto-scaling / resilience

- Targets multi-cloud or hybrid deployments

- Needs improved resource utilization and cost efficiency

If your project requires scaling, improved resilience, or Kubernetes cluster support in general, we encourage you to reach out to us. We can assess your specific needs and advise on the best path forward.

Read more:

- How Does the Kubernetes API Work? Demystifying the Inner Workings of the Kubernetes API

- Multi-Cloud Kubernetes: The Power and Peril

- Kubernetes as a Service (KaaS)

- Hire Kubernetes Experts

See how we can help to overcome your challenges