- Kubernetes – The So-Called Orchestrator

- How does a Headless service benefit stateful applications?

- What are the key differences between NodePort and LoadBalancer services?

- Why is ClusterIP typically the default service type?

- What are Docker containers?

- Pod

- Node Overview

- Kubernetes Cluster

- Streamlining Container Management with Kubernetes

- Why Kubernetes Became a Separate Service Provided by Gart

- Use Cases of Kubernetes as a Service

- Kubernetes – Your App’s Best Friend

Kubernetes as a Service offers a practical solution for businesses looking to leverage the power of Kubernetes without the complexities of managing the underlying infrastructure.

Kubernetes – The So-Called Orchestrator

Kubernetes can be described as a top-level construct that sits above the architecture of a solution or application.

Picture Kubernetes as a master conductor for your container orchestra. It’s a powerful tool that helps manage and organize large groups of containers. Just like a conductor coordinates musicians to play together, Kubernetes coordinates your containers, making sure they’re running, scaling up when needed, and even replacing them if they fail. It helps you focus on the music (your applications) without worrying about the individual instruments (containers).

Kubernetes acts as an orchestrator, a powerful tool that facilitates the management, coordination, and deployment of all these microservices running within the Docker containers. It takes care of scaling, load balancing, fault tolerance, and other aspects to ensure the smooth functioning of the application as a whole.

However, managing Kubernetes clusters can be complex and resource-intensive. This is where Kubernetes as a Service steps in, providing a managed environment that abstracts away the underlying infrastructure and offers a simplified experience.

Key Types of Kubernetes Services

- ClusterIP: The default service type, which exposes services only within the cluster, making it ideal for internal communication between components.

- NodePort: Extends access by opening a specific port on each worker node, allowing for limited external access—often used for testing or specific use cases. NodePort service ports must be within the range 30,000 to 32,767, ensuring external accessibility at defined ports.

- LoadBalancer: Integrates with cloud providers’ load balancers, making services accessible externally through a single entry point, suitable for production environments needing secure external access.

- Headless Service: Used for stateful applications needing direct pod-to-pod communication, which bypasses the usual load balancing in favor of direct IP-based connections.

In Kubernetes, service components provide stable IP addresses that remain consistent even when individual pods change. This stability ensures that different parts of an application can reliably communicate within the cluster, allowing seamless internal networking and load balancing across pod replicas without needing to track each pod’s dynamic IP. Services simplify communication, both internally within the cluster and with external clients, enhancing Kubernetes application reliability and scalability.

How does a Headless service benefit stateful applications?

Headless services in Kubernetes are particularly beneficial for stateful applications, like databases, that require direct pod-to-pod communication. Unlike typical services that use load balancing to distribute requests across pod replicas, a headless service provides each pod with its unique, stable IP address, enabling clients to connect directly to specific pods.

Key Benefits for Stateful Applications

- Direct Communication: Allows clients to connect to individual pods rather than a randomized one, which is crucial for databases where a “leader” pod may handle writes, and “follower” pods synchronize data from it.

- DNS-Based Pod Discovery: Instead of a single ClusterIP, headless services allow DNS queries to return individual pod IPs, supporting applications where pods need to be uniquely addressable.

- Support for Stateful Workloads: In databases and similar applications, each pod maintains its own state. Headless services ensure reliable, direct connections to each unique pod, essential for consistency in data management and state synchronization.

Headless services are thus well-suited for complex, stateful applications where pods have specific roles or need close data synchronization.

What are the key differences between NodePort and LoadBalancer services?

The key differences between NodePort and LoadBalancer services in Kubernetes lie in their network accessibility and typical use cases.

NodePort

- Access: Opens a specific port on each Kubernetes node, making the service accessible externally at the node’s IP address and the assigned NodePort.

- Use Case: Typically used in testing or development environments, or where specific port-based access is required.

- Limitations: Limited scalability and security since it directly exposes each node on a defined port, which may not be ideal for high-traffic production environments.

LoadBalancer

- Access: Integrates with cloud providers’ load balancers (e.g., AWS ELB, GCP Load Balancer) to route external traffic to the cluster through a single endpoint.

- Use Case: Best suited for production environments needing reliable, secure external access, as it provides a managed entry point for services.

- Advantages: Supports high availability and scalability by leveraging cloud-native load balancing, which routes traffic effectively without exposing individual nodes directly.

In summary: NodePort is suitable for limited, direct port-based access, while LoadBalancer offers a more robust and scalable solution for production-level external traffic, relying on cloud load balancers for secure and managed access.

Why is ClusterIP typically the default service type?

ClusterIP is typically the default service type in Kubernetes because it is designed for internal communication within the cluster. It allows pods to communicate with each other through a single, stable internal IP address without exposing any services to the external network. This configuration is ideal for most Kubernetes applications, where components (e.g., microservices or databases) need to interact internally without needing direct external access.

Reasons for ClusterIP as the Default

- Enhanced Security: By restricting access to within the cluster, ClusterIP limits exposure to external networks, which is often essential for security.

- Internal Load Balancing: ClusterIP automatically balances requests among pod replicas within the cluster, simplifying internal service-to-service communication.

- Ease of Use: Since most applications rely on internal networking, ClusterIP provides an easy setup without additional configurations.

As the internal communication standard in Kubernetes, ClusterIP simplifies development and deployment by keeping network traffic within the cluster, ensuring both security and performance.

Our team of experts can help you deploy, manage, and scale your Kubernetes applications.

What are Docker containers?

Imagine a container like a lunchbox for software. Instead of packing your food, you pack an application, along with everything it needs to run, like code, settings, and libraries. Containers keep everything organized and separate from other containers, making it easier to move and run your application consistently across different places, like on your computer, a server, or in the cloud.

In the past, when we needed to deploy applications or services, we relied on full-fledged computers with operating systems, additional software, and user configurations. Managing these large units was a cumbersome process, involving service startup, updates, and maintenance. It was the only way things were done, as there were no other alternatives.

Then came the concept of Docker containers. Think of a Docker container as a small, self-contained logical unit in which you only pack what’s essential to run your service. It includes a minimal operating system kernel and the necessary configurations to launch your service efficiently. The configuration of a Docker container is described using specific configuration files.

The name “Docker” comes from the analogy of standardized shipping containers used in freight transport. Just like those shipping containers, Docker containers are universal and platform-agnostic, allowing you to deploy them on any compatible system. This portability makes deployment much more convenient and efficient.

With Docker containers, you can quickly start, stop, or restart services, and they are isolated from the host system and other containers. This isolation ensures that if something crashes within a container, you can easily remove it, create a new one, and relaunch the service. This simplicity and ease of management have revolutionized the way we deploy and maintain applications.

Docker containers have brought a paradigm shift by offering lightweight, scalable, and isolated units for deploying applications, making the development and deployment processes much more streamlined and efficient.

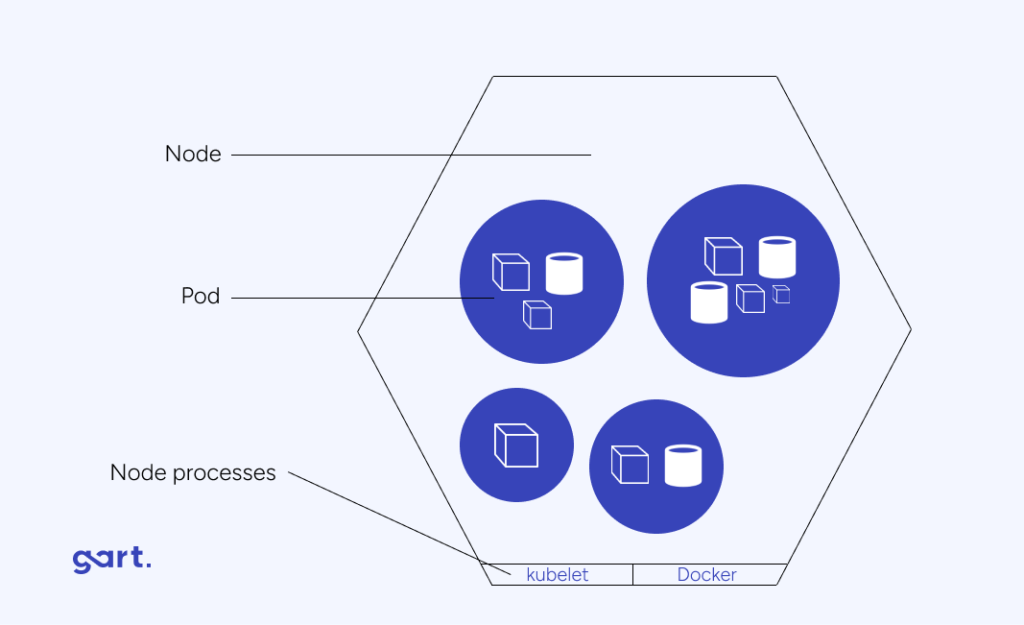

Pod

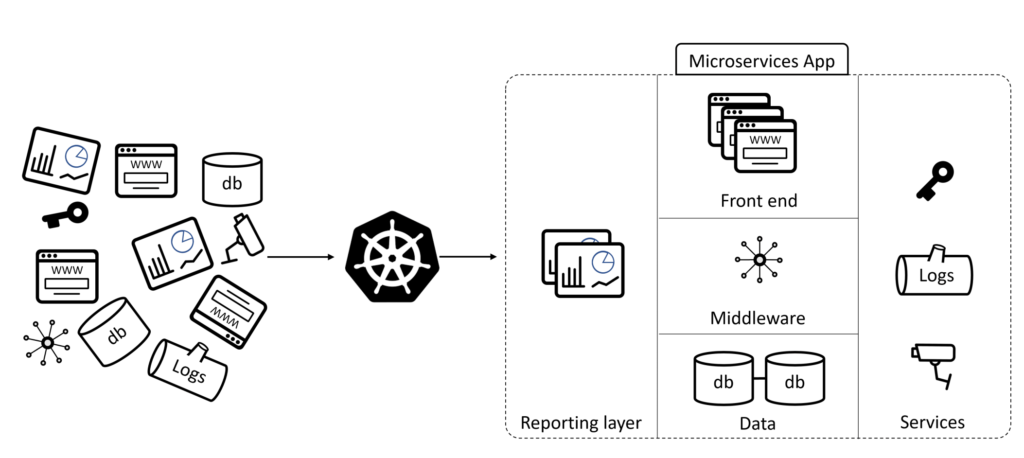

Kubernetes adopts a microservices architecture, where applications are broken down into smaller, loosely-coupled services. Each service performs a specific function, and they can be independently deployed, scaled, and updated. Microservices architecture promotes modularity and enables faster development and deployment of complex applications.

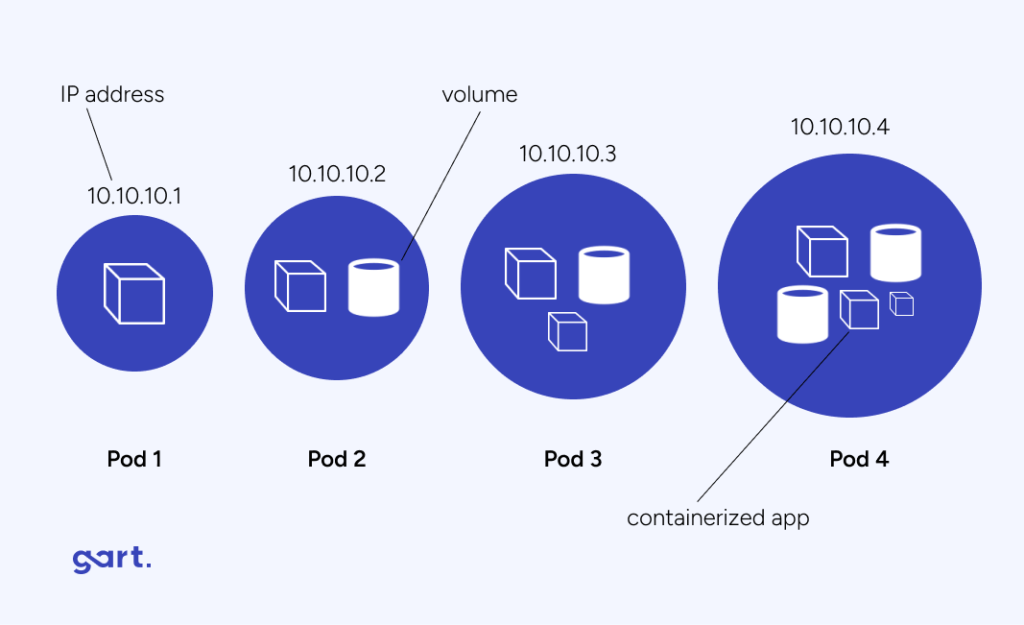

In Kubernetes, the basic unit of deployment is a Pod. A Pod is a logical group of one or more containers that share the same network namespace and are scheduled together on the same Worker Node.

A pod is like a cozy duo of friends sitting together. In the world of containers, a pod is a small group of containers that work closely together on the same task. Just as friends in a pod chat and collaborate easily, containers in a pod can easily share information and resources. They’re like buddies that stick together to get things done efficiently.

Containers within a Pod can communicate with each other using localhost. Pods represent the smallest deployable units in Kubernetes and are used to encapsulate microservices.

Containers are the runtime instances of images, and they run within Pods. Containers are isolated from one another and share the host operating system’s kernel. This isolation makes containers lightweight and efficient, enabling them to run consistently across different environments.

Node Overview

In the tech world, a node is a computer (or server) that’s part of a Kubernetes cluster. It’s where your applications actually run. Just like worker bees do various tasks in a beehive, nodes handle the work of running and managing your applications. They provide the resources and environment needed for your apps to function properly, like storage, memory, and processing power. So, a Kubernetes node is like a busy bee in your cluster, doing the hands-on work to keep your applications buzzing along.

Kubernetes Cluster

Imagine a cluster like a team of ants working together. In the tech world, a Kubernetes cluster is a group of computers (or servers) that work together to manage and run your applications. These computers collaborate under the guidance of Kubernetes to ensure your applications run smoothly, even if some computers have issues. It’s like a group of ants working as a team to carry food – if one ant gets tired or drops the food, others step in to keep things going. Similarly, in a Kubernetes cluster, if one computer has a problem, others step in to make sure your apps keep running without interruption.

Image source: Kubernetes.io

Streamlining Container Management with Kubernetes

Everyone enjoyed working with containers, and in the architecture of these microservices, containers became abundant. However, developers encountered a challenge when dealing with large platforms and a multitude of containers. Managing them became a complex task.

You cannot install all containers for a single service on a single server. Instead, you have to distribute them across multiple servers, considering how they will communicate and which ports they will use. Security and scalability need to be ensured throughout this process.

Several solutions emerged to address container orchestration, such as Docker Swarm, Docker Compose, Nomad, and ICS. These attempts aimed to create centralized entities to manage services and containers.

Then, Kubernetes came into the picture—a collection of logic that allows you to take a group of servers and combine them into a cluster. You can then describe all your services and Docker containers in configuration files and specify where they should be deployed programmatically.

The advantage of using Kubernetes is that you can make changes to the configuration files rather than manually altering servers. When an update is needed, you modify the configuration, and Kubernetes takes care of updating the infrastructure accordingly.

Image source: Quick start Kubernetes

Why Kubernetes Became a Separate Service Provided by Gart

Over time, Kubernetes became a highly popular platform for container orchestration, leading to the development of numerous services and approaches that could be integrated with Kubernetes. These services, often in the form of plugins and additional solutions, addressed various tasks such as traffic routing, secure port opening and closing, and performance scaling.

Kubernetes, with its advanced features and capabilities, evolved into a powerful but complex technology, requiring a significant learning curve. To manage these complexities, Kubernetes introduced various abstractions such as Deployments, StatefulSets, and DaemonSets, representing different ways of launching containers based on specific principles. For example, using the DaemonSet mode means having one container running on each of the five nodes in the cluster, serving as a particular deployment strategy.

Leading cloud providers, such as Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform (GCP), and others, offer Kubernetes as a managed service. Each cloud provider has its own implementation, but the core principle remains the same—providing a managed Kubernetes control plane with automated updates, monitoring, and scalability features.

For on-premises deployments or private data centers, companies can still install Kubernetes on their own servers (bare-metal approach), but this requires more manual management and upkeep of the underlying hardware.

However, this level of complexity made managing Kubernetes without specific knowledge and expertise almost impossible. Deploying Kubernetes for a startup that does not require such sophistication would be like using a sledgehammer to crack a nut. For many small-scale applications, the orchestration overhead would far exceed the complexity of the entire solution. Kubernetes is better suited for enterprise-level scenarios and more extensive infrastructures.

Regardless of the deployment scenario, working with Kubernetes demands significant expertise. It requires in-depth knowledge of Kubernetes concepts, best practices, and practical implementation strategies. Kubernetes expertise has become highly sought after. That’s why today, the Gart company offers Kubernetes services.

Need help with Kubernetes?

Contact Gart for managed Kubernetes clusters, consulting, and migration.

Use Cases of Kubernetes as a Service

Kubernetes as a Service offers a versatile and powerful platform for various use cases, including microservices and containerized applications, continuous integration/continuous deployment, big data processing, and Internet of Things applications. By providing automated management, scalability, and reliability, KaaS empowers businesses to accelerate development, improve application performance, and efficiently manage complex workloads in the cloud-native era.

Microservices and Containerized Applications

Kubernetes as a Service is an ideal fit for managing microservices and containerized applications. Microservices architecture breaks down applications into smaller, independent services, making it easier to develop, deploy, and scale each component separately. KaaS simplifies the orchestration and management of these microservices, ensuring seamless communication, scaling, and load balancing across the entire application.

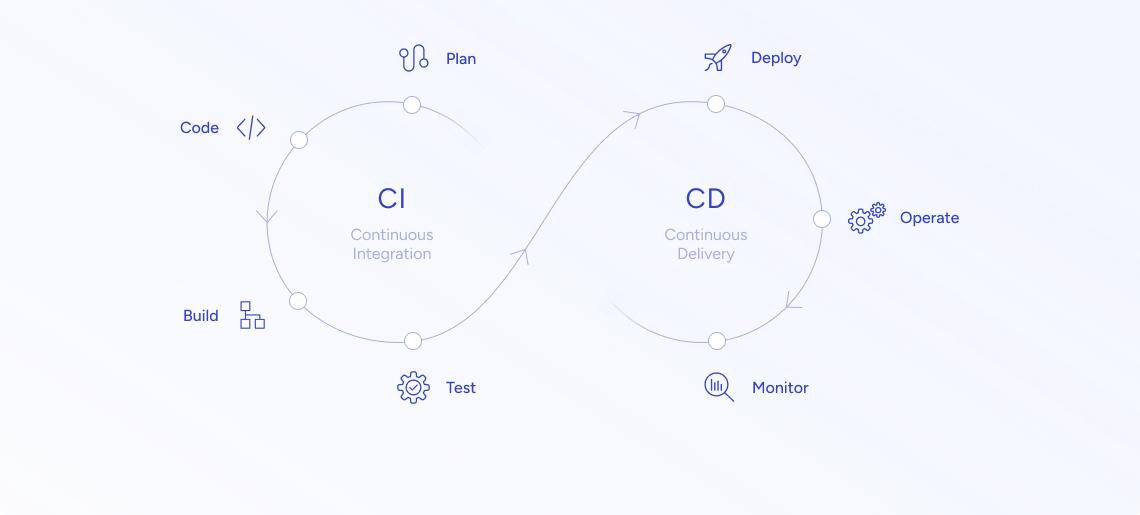

Continuous Integration/Continuous Deployment (CI/CD)

Kubernetes as a Service streamlines the CI/CD process for software development teams. With KaaS, developers can automate the deployment of containerized applications through the various stages of the development pipeline. This includes automated testing, code integration, and continuous delivery to production environments. KaaS ensures consistent and reliable deployments, enabling faster release cycles and reducing time-to-market.

Big Data Processing and Analytics

Kubernetes as a Service is well-suited for big data processing and analytics workloads. Big data applications often require distributed processing and scalability. KaaS enables businesses to deploy and manage big data processing frameworks, such as Apache Spark, Apache Hadoop, or Apache Flink, in a containerized environment. Kubernetes handles the scaling and resource management, ensuring efficient utilization of computing resources for processing large datasets.

Simplify your app management with our seamless Kubernetes setup. Enjoy enhanced security, easy scalability, and expert support.

Internet of Things (IoT) Applications

IoT applications generate a massive amount of data from various devices and sensors. Kubernetes as a Service offers a flexible and scalable platform to manage IoT applications efficiently. It allows organizations to deploy edge nodes and gateways close to IoT devices, enabling real-time data processing and analysis at the edge. KaaS ensures seamless communication between edge and cloud-based components, providing a robust and reliable infrastructure for IoT deployments.

IoT Device Management Using Kubernetes Case Study

In this real-life case study, discover how Gart implemented an innovative Internet of Things (IoT) device management system using Kubernetes. By leveraging the power of Kubernetes as an orchestrator, Gart efficiently deployed, scaled, and managed a network of IoT devices seamlessly. Learn how Kubernetes provided the flexibility and reliability required for handling the massive influx of data generated by the IoT devices. This successful implementation showcases how Kubernetes can empower businesses to efficiently manage complex IoT infrastructures, ensuring real-time data processing and analysis for enhanced performance and scalability.

Kubernetes offers a powerful, declarative approach to manage containerized applications, enabling developers to focus on defining the desired state of their system and letting Kubernetes handle the orchestration, scaling, and deployment automatically.

Kubernetes as a Service offers a gateway to efficient, streamlined application management. By abstracting complexities, automating tasks, and enhancing scalability, KaaS empowers businesses to focus on innovation.

Kubernetes – Your App’s Best Friend

Ever wish you had a superhero for managing your apps? Say hello to Kubernetes – your app’s sidekick that makes everything run like clockwork.

Managing the App Circus

Kubernetes is like the ringmaster of a circus, but for your apps. It keeps them organized, ensures they perform their best, and steps in if anything goes wrong. No more app chaos!

Auto-Scaling: App Flexibility

Imagine an app that can magically grow when there’s a crowd and shrink when it’s quiet. That’s what Kubernetes does with auto-scaling. Your app adjusts itself to meet the demand, so your customers always get a seamless experience.

Load Balancing: Fair Share for All

Picture your app as a cake – everyone wants a slice. Kubernetes slices the cake evenly and serves it up. It directs traffic to different parts of your app, keeping everything balanced and running smoothly.

Self-Healing: App First Aid

If an app crashes, Kubernetes plays doctor. It detects the issue, replaces the unhealthy parts, and gets your app back on its feet. It’s like having a team of medics for your software.

So, why is this important for your business? Because Kubernetes means your apps are always on point, no matter how busy things get. It’s like having a backstage crew that ensures every performance is a hit.

Unlock the Power of Kubernetes Today! Explore Our Expert Kubernetes Services and Elevate Your Container Orchestration Game. Contact Us for a Consultation and Seamless Deployment.