In my experience optimizing cloud costs, especially on AWS, I often find that many quick wins are in the "easy to implement - good savings potential" quadrant.

[lwptoc]

That's why I've decided to share some straightforward methods for optimizing expenses on AWS that will help you save over 80% of your budget.

Choose reserved instances

Potential Savings: Up to 72%

Choosing reserved instances involves committing to a subscription, even partially, and offers a discount for long-term rentals of one to three years. While planning for a year is often deemed long-term for many companies, especially in Ukraine, reserving resources for 1-3 years carries risks but comes with the reward of a maximum discount of up to 72%.

You can check all the current pricing details on the official website - Amazon EC2 Reserved Instances

Purchase Saving Plans (Instead of On-Demand)

Potential Savings: Up to 72%

There are three types of saving plans: Compute Savings Plan, EC2 Instance Savings Plan, SageMaker Savings Plan.

AWS Compute Savings Plan is an Amazon Web Services option that allows users to receive discounts on computational resources in exchange for committing to using a specific volume of resources over a defined period (usually one or three years). This plan offers flexibility in utilizing various computing services, such as EC2, Fargate, and Lambda, at reduced prices.

AWS EC2 Instance Savings Plan is a program from Amazon Web Services that offers discounted rates exclusively for the use of EC2 instances. This plan is specifically tailored for the utilization of EC2 instances, providing discounts for a specific instance family, regardless of the region.

AWS SageMaker Savings Plan allows users to get discounts on SageMaker usage in exchange for committing to using a specific volume of computational resources over a defined period (usually one or three years).

The discount is available for one and three years with the option of full, partial upfront payment, or no upfront payment. EC2 can help save up to 72%, but it applies exclusively to EC2 instances.

Utilize Various Storage Classes for S3 (Including Intelligent Tier)

Potential Savings: 40% to 95%

AWS offers numerous options for storing data at different access levels. For instance, S3 Intelligent-Tiering automatically stores objects at three access levels: one tier optimized for frequent access, 40% cheaper tier optimized for infrequent access, and 68% cheaper tier optimized for rarely accessed data (e.g., archives).

S3 Intelligent-Tiering has the same price per 1 GB as S3 Standard — $0.023 USD.

However, the key advantage of Intelligent Tiering is its ability to automatically move objects that haven't been accessed for a specific period to lower access tiers.

Every 30, 90, and 180 days, Intelligent Tiering automatically shifts an object to the next access tier, potentially saving companies from 40% to 95%. This means that for certain objects (e.g., archives), it may be appropriate to pay only $0.0125 USD per 1 GB or $0.004 per 1 GB compared to the standard price of $0.023 USD.

Information regarding the pricing of Amazon S3

AWS Compute Optimizer

Potential Savings: quite significant

The AWS Compute Optimizer dashboard is a tool that lets users assess and prioritize optimization opportunities for their AWS resources.

The dashboard provides detailed information about potential cost savings and performance improvements, as the recommendations are based on an analysis of resource specifications and usage metrics.

The dashboard covers various types of resources, such as EC2 instances, Auto Scaling groups, Lambda functions, Amazon ECS services on Fargate, and Amazon EBS volumes.

For example, AWS Compute Optimizer reproduces information about underutilized or overutilized resources allocated for ECS Fargate services or Lambda functions. Regularly keeping an eye on this dashboard can help you make informed decisions to optimize costs and enhance performance.

Use Fargate in EKS for underutilized EC2 nodes

If your EKS nodes aren't fully used most of the time, it makes sense to consider using Fargate profiles. With AWS Fargate, you pay for a specific amount of memory/CPU resources needed for your POD, rather than paying for an entire EC2 virtual machine.

For example, let's say you have an application deployed in a Kubernetes cluster managed by Amazon EKS (Elastic Kubernetes Service). The application experiences variable traffic, with peak loads during specific hours of the day or week (like a marketplace or an online store), and you want to optimize infrastructure costs. To address this, you need to create a Fargate Profile that defines which PODs should run on Fargate. Configure Kubernetes Horizontal Pod Autoscaler (HPA) to automatically scale the number of POD replicas based on their resource usage (such as CPU or memory usage).

Manage Workload Across Different Regions

Potential Savings: significant in most cases

When handling workload across multiple regions, it's crucial to consider various aspects such as cost allocation tags, budgets, notifications, and data remediation.

Cost Allocation Tags: Classify and track expenses based on different labels like program, environment, team, or project.

AWS Budgets: Define spending thresholds and receive notifications when expenses exceed set limits. Create budgets specifically for your workload or allocate budgets to specific services or cost allocation tags.

Notifications: Set up alerts when expenses approach or surpass predefined thresholds. Timely notifications help take actions to optimize costs and prevent overspending.

Remediation: Implement mechanisms to rectify expenses based on your workload requirements. This may involve automated actions or manual interventions to address cost-related issues.

Regional Variances: Consider regional differences in pricing and data transfer costs when designing workload architectures.

Reserved Instances and Savings Plans: Utilize reserved instances or savings plans to achieve cost savings.

AWS Cost Explorer: Use this tool for visualizing and analyzing your expenses. Cost Explorer provides insights into your usage and spending trends, enabling you to identify areas of high costs and potential opportunities for cost savings.

Transition to Graviton (ARM)

Potential Savings: Up to 30%

Graviton utilizes Amazon's server-grade ARM processors developed in-house. The new processors and instances prove beneficial for various applications, including high-performance computing, batch processing, electronic design automation (EDA) automation, multimedia encoding, scientific modeling, distributed analytics, and machine learning inference on processor-based systems.

The processor family is based on ARM architecture, likely functioning as a system on a chip (SoC). This translates to lower power consumption costs while still offering satisfactory performance for the majority of clients. Key advantages of AWS Graviton include cost reduction, low latency, improved scalability, enhanced availability, and security.

Spot Instances Instead of On-Demand

Potential Savings: Up to 30%

Utilizing spot instances is essentially a resource exchange. When Amazon has surplus resources lying idle, you can set the maximum price you're willing to pay for them. The catch is that if there are no available resources, your requested capacity won't be granted.

However, there's a risk that if demand suddenly surges and the spot price exceeds your set maximum price, your spot instance will be terminated.

Spot instances operate like an auction, so the price is not fixed. We specify the maximum we're willing to pay, and AWS determines who gets the computational power. If we are willing to pay $0.1 per hour and the market price is $0.05, we will pay exactly $0.05.

Use Interface Endpoints or Gateway Endpoints to save on traffic costs (S3, SQS, DynamoDB, etc.)

Potential Savings: Depends on the workload

Interface Endpoints operate based on AWS PrivateLink, allowing access to AWS services through a private network connection without going through the internet. By using Interface Endpoints, you can save on data transfer costs associated with traffic.

Utilizing Interface Endpoints or Gateway Endpoints can indeed help save on traffic costs when accessing services like Amazon S3, Amazon SQS, and Amazon DynamoDB from your Amazon Virtual Private Cloud (VPC).

Key points:

Amazon S3: With an Interface Endpoint for S3, you can privately access S3 buckets without incurring data transfer costs between your VPC and S3.

Amazon SQS: Interface Endpoints for SQS enable secure interaction with SQS queues within your VPC, avoiding data transfer costs for communication with SQS.

Amazon DynamoDB: Using an Interface Endpoint for DynamoDB, you can access DynamoDB tables in your VPC without incurring data transfer costs.

Additionally, Interface Endpoints allow private access to AWS services using private IP addresses within your VPC, eliminating the need for internet gateway traffic. This helps eliminate data transfer costs for accessing services like S3, SQS, and DynamoDB from your VPC.

Optimize Image Sizes for Faster Loading

Potential Savings: Depends on the workload

Optimizing image sizes can help you save in various ways.

Reduce ECR Costs: By storing smaller instances, you can cut down expenses on Amazon Elastic Container Registry (ECR).

Minimize EBS Volumes on EKS Nodes: Keeping smaller volumes on Amazon Elastic Kubernetes Service (EKS) nodes helps in cost reduction.

Accelerate Container Launch Times: Faster container launch times ultimately lead to quicker task execution.

Optimization Methods:

Use the Right Image: Employ the most efficient image for your task; for instance, Alpine may be sufficient in certain scenarios.

Remove Unnecessary Data: Trim excess data and packages from the image.

Multi-Stage Image Builds: Utilize multi-stage image builds by employing multiple FROM instructions.

Use .dockerignore: Prevent the addition of unnecessary files by employing a .dockerignore file.

Reduce Instruction Count: Minimize the number of instructions, as each instruction adds extra weight to the hash. Group instructions using the && operator.

Layer Consolidation: Move frequently changing layers to the end of the Dockerfile.

These optimization methods can contribute to faster image loading, reduced storage costs, and improved overall performance in containerized environments.

Use Load Balancers to Save on IP Address Costs

Potential Savings: depends on the workload

Starting from February 2024, Amazon begins billing for each public IPv4 address. Employing a load balancer can help save on IP address costs by using a shared IP address, multiplexing traffic between ports, load balancing algorithms, and handling SSL/TLS.

By consolidating multiple services and instances under a single IP address, you can achieve cost savings while effectively managing incoming traffic.

Optimize Database Services for Higher Performance (MySQL, PostgreSQL, etc.)

Potential Savings: depends on the workload

AWS provides default settings for databases that are suitable for average workloads. If a significant portion of your monthly bill is related to AWS RDS, it's worth paying attention to parameter settings related to databases.

Some of the most effective settings may include:

Use Database-Optimized Instances: For example, instances in the R5 or X1 class are optimized for working with databases.

Choose Storage Type: General Purpose SSD (gp2) is typically cheaper than Provisioned IOPS SSD (io1/io2).

AWS RDS Auto Scaling: Automatically increase or decrease storage size based on demand.

If you can optimize the database workload, it may allow you to use smaller instance sizes without compromising performance.

Regularly Update Instances for Better Performance and Lower Costs

Potential Savings: Minor

As Amazon deploys new servers in their data processing centers to provide resources for running more instances for customers, these new servers come with the latest equipment, typically better than previous generations. Usually, the latest two to three generations are available. Make sure you update regularly to effectively utilize these resources.

Take Memory Optimize instances, for example, and compare the price change based on the relevance of one instance over another. Regular updates can ensure that you are using resources efficiently.

InstanceGenerationDescriptionOn-Demand Price (USD/hour)m6g.large6thInstances based on ARM processors offer improved performance and energy efficiency.$0.077m5.large5thGeneral-purpose instances with a balanced combination of CPU and memory, designed to support high-speed network access.$0.096m4.large4thA good balance between CPU, memory, and network resources.$0.1m3.large3rdOne of the previous generations, less efficient than m5 and m4.Not avilable

Use RDS Proxy to reduce the load on RDS

Potential for savings: Low

RDS Proxy is used to relieve the load on servers and RDS databases by reusing existing connections instead of creating new ones. Additionally, RDS Proxy improves failover during the switch of a standby read replica node to the master.

Imagine you have a web application that uses Amazon RDS to manage the database. This application experiences variable traffic intensity, and during peak periods, such as advertising campaigns or special events, it undergoes high database load due to a large number of simultaneous requests.

During peak loads, the RDS database may encounter performance and availability issues due to the high number of concurrent connections and queries. This can lead to delays in responses or even service unavailability.

RDS Proxy manages connection pools to the database, significantly reducing the number of direct connections to the database itself.

By efficiently managing connections, RDS Proxy provides higher availability and stability, especially during peak periods.

Using RDS Proxy reduces the load on RDS, and consequently, the costs are reduced too.

Define the storage policy in CloudWatch

Potential for savings: depends on the workload, could be significant.

The storage policy in Amazon CloudWatch determines how long data should be retained in CloudWatch Logs before it is automatically deleted.

Setting the right storage policy is crucial for efficient data management and cost optimization. While the "Never" option is available, it is generally not recommended for most use cases due to potential costs and data management issues.

Typically, best practice involves defining a specific retention period based on your organization's requirements, compliance policies, and needs.

Avoid using an undefined data retention period unless there is a specific reason. By doing this, you are already saving on costs.

Configure AWS Config to monitor only the events you need

Potential for savings: depends on the workload

AWS Config allows you to track and record changes to AWS resources, helping you maintain compliance, security, and governance. AWS Config provides compliance reports based on rules you define. You can access these reports on the AWS Config dashboard to see the status of tracked resources.

You can set up Amazon SNS notifications to receive alerts when AWS Config detects non-compliance with your defined rules. This can help you take immediate action to address the issue. By configuring AWS Config with specific rules and resources you need to monitor, you can efficiently manage your AWS environment, maintain compliance requirements, and avoid paying for rules you don't need.

Use lifecycle policies for S3 and ECR

Potential for savings: depends on the workload

S3 allows you to configure automatic deletion of individual objects or groups of objects based on specified conditions and schedules. You can set up lifecycle policies for objects in each specific bucket. By creating data migration policies using S3 Lifecycle, you can define the lifecycle of your object and reduce storage costs.

These object migration policies can be identified by storage periods. You can specify a policy for the entire S3 bucket or for specific prefixes. The cost of data migration during the lifecycle is determined by the cost of transfers. By configuring a lifecycle policy for ECR, you can avoid unnecessary expenses on storing Docker images that you no longer need.

Switch to using GP3 storage type for EBS

Potential for savings: 20%

By default, AWS creates gp2 EBS volumes, but it's almost always preferable to choose gp3 — the latest generation of EBS volumes, which provides more IOPS by default and is cheaper.

For example, in the US-east-1 region, the price for a gp2 volume is $0.10 per gigabyte-month of provisioned storage, while for gp3, it's $0.08/GB per month. If you have 5 TB of EBS volume on your account, you can save $100 per month by simply switching from gp2 to gp3.

Switch the format of public IP addresses from IPv4 to IPv6

Potential for savings: depending on the workload

Starting from February 1, 2024, AWS will begin charging for each public IPv4 address at a rate of $0.005 per IP address per hour. For example, taking 100 public IP addresses on EC2 x $0.005 per public IP address per month x 730 hours = $365.00 per month.

While this figure might not seem huge (without tying it to the company's capabilities), it can add up to significant network costs. Thus, the optimal time to transition to IPv6 was a couple of years ago or now.

Here are some resources about this recent update that will guide you on how to use IPv6 with widely-used services — AWS Public IPv4 Address Charge.

Collaborate with AWS professionals and partners for expertise and discounts

Potential for savings: ~5% of the contract amount through discounts.

AWS Partner Network (APN) Discounts: Companies that are members of the AWS Partner Network (APN) can access special discounts, which they can pass on to their clients. Partners reaching a certain level in the APN program often have access to better pricing offers.

Custom Pricing Agreements: Some AWS partners may have the opportunity to negotiate special pricing agreements with AWS, enabling them to offer unique discounts to their clients. This can be particularly relevant for companies involved in consulting or system integration.

Reseller Discounts: As resellers of AWS services, partners can purchase services at wholesale prices and sell them to clients with a markup, still offering a discount from standard AWS prices. They may also provide bundled offerings that include AWS services and their own additional services.

Credit Programs: AWS frequently offers credit programs or vouchers that partners can pass on to their clients. These could be promo codes or discounts for a specific period.

Seek assistance from AWS professionals and partners. Often, this is more cost-effective than purchasing and configuring everything independently. Given the intricacies of cloud space optimization, expertise in this matter can save you tens or hundreds of thousands of dollars.

More valuable tips for optimizing costs and improving efficiency in AWS environments:

Scheduled TurnOff/TurnOn for NonProd environments: If the Development team is in the same timezone, significant savings can be achieved by, for example, scaling the AutoScaling group of instances/clusters/RDS to zero during the night and weekends when services are not actively used.

Move static content to an S3 Bucket & CloudFront: To prevent service charges for static content, consider utilizing Amazon S3 for storing static files and CloudFront for content delivery.

Use API Gateway/Lambda/Lambda Edge where possible: In such setups, you only pay for the actual usage of the service. This is especially noticeable in NonProd environments where resources are often underutilized.

If your CI/CD agents are on EC2, migrate to CodeBuild: AWS CodeBuild can be a more cost-effective and scalable solution for your continuous integration and delivery needs.

CloudWatch covers the needs of 99% of projects for Monitoring and Logging: Avoid using third-party solutions if AWS CloudWatch meets your requirements. It provides comprehensive monitoring and logging capabilities for most projects.

Feel free to reach out to me or other specialists for an audit, a comprehensive optimization package, or just advice.

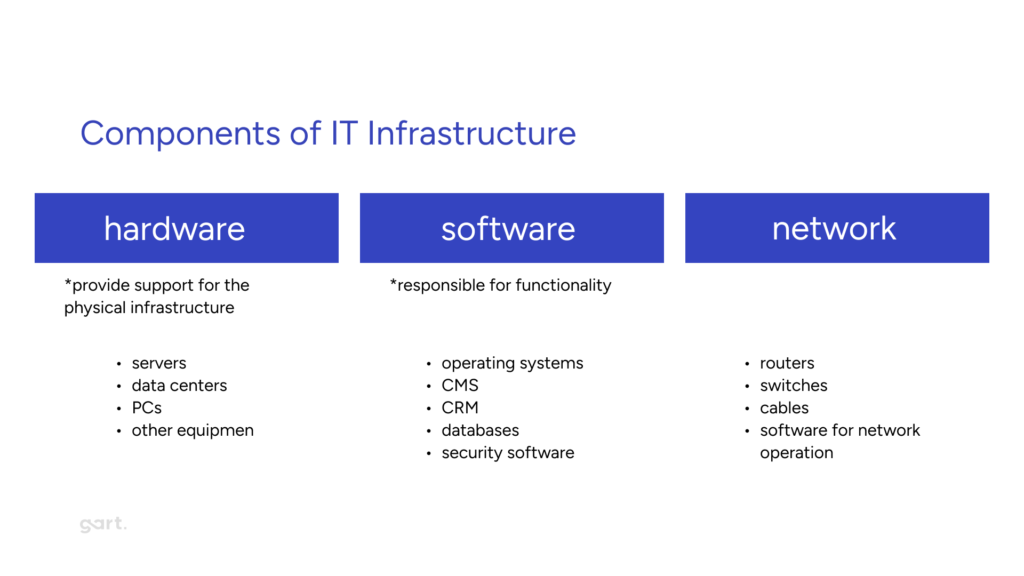

Speaking of the benefits of cloud deployment, simplicity of use, flexibility, scalability, and reliability are often mentioned. However, migration is not just about moving infrastructure to the cloud, it is also about switching to a different cost model.

To understand how profitable this is, it is necessary to calculate the current costs of local infrastructure, the planned costs of the cloud, as well as the cost of migration. In this article, we will tell you how to correctly calculate the costs of transferring infrastructure and not overpay for services after migration.

Key Questions to Consider Before Cloud Migration

Here are some questions businesses should ask themselves before migrating to the cloud:

Is downtime critical for the company? There is no need for the cloud if the business can wait a day or two for server and other component recovery/replacement without major consequences.

Has the equipment been purchased? How long ago? Cloud migration can be delayed if the servers are less than three years old and the company does not plan to grow rapidly.

Is a cloud solution necessary? A cloud solution is necessary if the project is new and the equipment is not yet available. Or if it is already working, the infrastructure is more than three years old, and one to four hours of downtime is critical for the company.

Other questions to consider:

What are the business goals for migrating to the cloud?

What are the specific applications and workloads that will be migrated?

What are the security and compliance requirements for the cloud environment?

What are the costs and benefits of migrating to the cloud?

What is the migration timeline and plan?

Who will be responsible for managing the cloud environment after migration?

Local Infrastructure Costs

To determine the potential financial benefits of migrating to the cloud, it is necessary to calculate the current costs of the local infrastructure as accurately as possible and obtain data on its performance. Only then will it be possible to determine the correct configuration of the cloud infrastructure and compare the current local costs with the planned costs in the cloud.

Costs are divided into direct and indirect.

Direct costs:

Capital expenditures:

Purchase of servers, storage systems, network equipment, etc.

Software licenses.

Data center construction or rental costs.

Operational expenditures:

Electricity costs.

Cooling costs.

Maintenance and repair costs.

IT staff salaries.

To calculate the total cost of local infrastructure, it is necessary to consider all of these factors. Once the total cost is known, it can be compared to the cost of cloud computing to determine whether migration is financially viable.

Direct Costs

Direct costs can be broken down into five main categories:

IT Equipment Acquisition: This includes the purchase of servers, storage systems, network equipment, and other IT hardware.

Data Center Colocation or Server Room Maintenance: This includes the cost of renting space in a data center or maintaining your own server room.

Software Licensing and Renewals: This includes the cost of purchasing and renewing licenses for operating systems, applications, and other software.

Hardware Setup and Maintenance: This includes the cost of installing, configuring, and maintaining IT hardware.

Software Updates and Equipment Service Contracts: This includes the cost of purchasing software updates and service contracts for IT hardware.

IT Personnel: This includes the cost of salaries, taxes, health insurance, vacation, bonuses, and office equipment for IT staff.

It is also important to consider the costs of HR, procurement, and accounting departments. These departments also spend some of their resources on ensuring the functionality of the corporate IT infrastructure.

Indirect Costs

Indirect costs are not as obvious as direct costs and can be more difficult to calculate. The main part of indirect costs is related to downtime, customer dissatisfaction, damage to business reputation, and lost profits. An example of indirect costs would be a corporate website crashing or freezing on Black Friday.

One way to calculate indirect costs is to review incident and degradation reports to determine how often and for how long the equipment fails. To calculate the amount of damage, it is necessary to multiply the downtime by the average hourly rate of employees.

Indirect costs:

Downtime costs:

Lost productivity due to system outages.

Revenue losses due to unavailability of applications or services.

Security risks:

Data breaches.

Compliance violations.

Business agility:

Inability to quickly scale up or down resources to meet changing business needs.

Get a sample of IT Audit

Sign up now

Get on email

Loading...

Thank you!

You have successfully joined our subscriber list.

Cloud Migration Costs

Several key factors influence the cost of cloud migration. These include:

Defining migration goals: It is important to clearly define the goals of the migration before starting the process. This will help to determine the scope of the migration and the resources required.

Identifying key pain points: Once the goals are defined, it is important to identify the key pain points that the migration is intended to address. This will help to prioritize the migration tasks and focus on the areas that will have the biggest impact.

Data volume to be transferred: The amount of data that needs to be migrated will have a significant impact on the cost of the migration. It is important to accurately estimate the amount of data to be transferred and to choose a migration method that is appropriate for the volume of data.

Cloud service model: The cost of cloud migration will also vary depending on the cloud service model chosen. Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS) all have different pricing models.

Provider selection: The cost of cloud migration will also vary depending on the cloud provider chosen. It is important to compare the pricing and features of different cloud providers before making a decision.

It is also necessary to assess the readiness of local applications for cloud operation. This is necessary to understand what can be immediately transferred to the cloud, what needs to be modernized, and what is better left in the local environment. Costs will need to be calculated for each scenario.

The migration process itself is the most labor-intensive and costly part of the migration. The costs of this stage consist of the following:

Initial costs for deploying new servers and cloud services. At the same time, the organization will incur additional time and financial costs for local infrastructure and data synchronization.

Modification of applications and code, creation of new infrastructure according to business needs. A significant part of the costs is for labor.

Solving potential problems with data privacy and security during the move.

Overall, it is quite difficult to calculate the exact cost of migration. The cost of migration depends heavily on the skills of the team. The more qualified it is, the lower the cost of the move. A cloud provider or partner with the necessary competencies can help reduce the time and cost of migration.

Successful Cloud Migration Case Studies by Gart:

Consulting Case: Migration from On-Premise to AWS for a Financial Company

Implementation of Nomad Cluster for Massively Parallel Computing – AWS Performance Optimization

DevOps in Gambling: Digital Transformation for Betting Provider

Gart’s Expertise in ISO 27001 Compliance Empowers Spiral Technology for Seamless Audits and Cloud Migration

Future Cloud Infrastructure Costs

After the migration, the cost structure changes. There is a regular fee for computing resources. However, it is only possible to estimate the costs of cloud infrastructure approximately, since it is difficult to predict the exact amount of resources needed to host applications in the cloud.

Fortunately for the customer, the estimate is usually higher than the actual costs. This is because the estimate is based on their own equipment, which is purchased with a margin. In the end, VM resources are optimized, and scaling is done on an as-needed basis.

How to Avoid Unnecessary Expenses

Ironically, many organizations move to the cloud to reduce costs, but in reality they overpay. According to a 2023 report by the American company Flexera, respondents estimated that their organizations lost 32% due to overspending.

Therefore, before migrating, it is necessary to develop a strategy for working and optimizing in the cloud. Depending on the industry, strategies may vary, so it is important to start from the needs of the business and the real VM load.

Here are some tips for avoiding unnecessary cloud infrastructure costs:

Optimize Costs

It is important to track your monthly cloud spending to identify any areas where you may be overspending. If you are not using a cloud service, you should terminate it to avoid paying for it. You should rightsize your VMs to ensure that you are only paying for the resources that you need. You can use spot instances to save money on your cloud costs.

Control Resource Allocation

To avoid overspending, it is important to use the correct amount of cloud resources. Most often, part of the cloud storage volume is wasted because it is larger than necessary. According to Flexera, 40% of cloud instances exceed the workloads required for operation.

Here are some tips for controlling resource allocation:

When you create a VM, you should choose the right size for your needs. There are many different VM sizes available, so you should be able to find one that is a good fit for your requirements.

You can use resource groups and tags to organize your cloud resources. This will make it easier to track your usage and costs.

You can use autoscaling to scale your cloud resources up or down as needed. This will help you to avoid overprovisioning resources.

You should monitor your cloud usage to track your resource allocation. You can use a cloud monitoring tool to help you monitor your usage.

Read more: 20 Easy Ways to Optimize Expenses on AWS and Save Over 80% of Your Budget

Comparing cloud provider services

Comparing cloud provider services is a complex task, but it helps to reduce the overexpenditure of cloud resources. Organizations that work with multiple providers at the same time must regularly review their contracts, which helps to reduce their cost.

Revaluating assets

Conduct an assessment to identify and remove assets that have become unnecessary. It is also worth using cloud cost optimization tools.

Choosing a migration model

You can choose one of the three main models, namely migration to:

Public cloud

You purchase the capacities of one or more large data centers that simultaneously cooperate with many clients. This is an option that provides the business with the necessary flexibility and the ability to automate routine tasks.

Private cloud

Development and arrangement of your own cloud infrastructure on your own resources. This is a great solution for large companies with a developed IT infrastructure, a large number of branches, and those who find it difficult to maintain the operability of the system under the old conditions. A private cloud allows you to ensure absolute confidentiality of important data.

Hybrid cloud

A compromise option, when the main array of services and data is serviced on its own resources, and due to an external data center, the company has the opportunity to quickly and confidently scale, as well as test new technological solutions without the risk of "letting in" the main system.

Whichever option you choose, Gart Solutions will help you understand how to design and create a cloud for file storage and hosting your infrastructure, so that your business processes are as protected as possible from the influence of any factors, and are also efficient regardless of the scale of your activities.

Regardless of the method you choose for migration to the cloud, we will help implement all the possibilities of this choice in practice, including automatic backup to the cloud.↳

Conclusion

Migration to the cloud and the transition to a new cost model can be beneficial for business if you approach this process responsibly: compare the costs of local infrastructure and cloud in advance, develop a strategy and carefully monitor costs. Gart Solutions will be happy to take over the "turnkey" migration to the cloud, freeing up the time of your company's specialists for the implementation of more important projects.

Kubernetes is becoming the standard for development, while the entry barrier remains quite high. We've compiled a list of recommendations for application developers who are migrating their apps to the orchestrator. Knowing the listed points will help you avoid potential problems and not create limitations where Kubernetes has advantages. Kubernetes Migration.

Who is this text for?

It's for developers who don't have DevOps expertise in their team – no full-time specialists. They want to move to Kubernetes because the future belongs to microservices, and Kubernetes is the best solution for container orchestration and accelerating development through code delivery automation to environments. At the same time, they may have locally run something in Docker but haven't developed anything for Kubernetes yet.

Such a team can outsource Kubernetes support – hire a contractor company, an individual specialist, or, for example, use the relevant services of an IT infrastructure provider. For instance, Gart Solutions offers DevOps as a Service – a service where the company's experienced DevOps specialists will take on any infrastructure project under full or partial supervision: they'll move your applications to Kubernetes, implement DevOps practices, and accelerate your time-to-market.

Regardless, there are nuances that developers should be aware of at the architecture planning and development stage – before the project falls into the hands of DevOps specialists (and they force you to modify the code to work in the cluster). We highlight these nuances in the text.

The text will be useful for those who are writing code from scratch and planning to run it in Kubernetes, as well as for those who already have a ready application that needs to be migrated to Kubernetes. In the latter case, you can go through the list and understand where you should check if your code meets the orchestrator's requirements.

The Basics

At the start, make sure you're familiar with the "golden" standard The Twelve-Factor App. This is a public guide describing the architectural principles of modern web applications. Most likely, you're already applying some of the points. Check how well your application adheres to these development standards.

The Twelve-Factor App

Codebase: One codebase, tracked in a version control system, serves all deployments.

Dependencies: Explicitly declare and isolate dependencies.

Configuration: Store configuration in the environment - don't embed in code.

Backing Services: Treat backing services as attached resources.

Build, Release, Run: Strictly separate the build, release, and run stages.

Processes: Run the application as one or more stateless processes.

Port Binding: Expose services through port binding.

Concurrency: Scale the application by adding processes.

Disposability: Maximize reliability with fast startup and graceful shutdown.

Dev/Prod Parity: Keep development, staging, and production environments as similar as possible.

Logs: Treat logs as event streams.

Admin Processes: Run administrative tasks as one-off processes.

Now let's move on to the highlighted recommendations and nuances.

Prefer Stateless Applications

Why?

Implementing fault tolerance for stateful applications will require significantly more effort and expertise.

Normal behavior for Kubernetes is to shut down and restart nodes. This happens during auto-healing when a node stops responding and is recreated, or during auto-scaling down (e.g., some nodes are no longer loaded, and the orchestrator excludes them to save resources).

Since nodes and pods in Kubernetes can be dynamically removed and recreated, the application should be ready for this. It should not write any data that needs to be preserved to the container it is running in.

What to do?

You need to organize the application so that data is written to databases, files to S3 storage, and, say, cache to Redis or Memcache. By having the application store data "on the side," we significantly facilitate cluster scaling under load when additional nodes need to be added, and replication.

In a stateful application where data is stored in an attached Volume (roughly speaking, in a folder next to the application), everything becomes more complicated. When scaling a stateful application, you'll have to order "volumes," ensure they're properly attached and created in the right zone. And what do you do with this "volume" when a replica needs to be removed?

Yes, some business applications should be run as stateful. However, in this case, they need to be made more manageable in Kubernetes. You need an Operator, specific agents inside performing the necessary actions... A prime example here is the postgres-operator. All of this is far more labor-intensive than simply throwing the code into a container, specifying five replicas, and watching it all work without additional dancing.

Ensure Availability of Endpoints for Application Health Checks

Why?

We've already noted that Kubernetes itself ensures the application is maintained in the desired state. This includes checking the application's operation, restarting faulty pods, disabling load, restarting on less loaded nodes, terminating pods exceeding the set resource consumption limits.

For the cluster to correctly monitor the application's state, you should ensure the availability of endpoints for health checks, the so-called liveness and readiness probes. These are important Kubernetes mechanisms that essentially poke the container with a stick – check the application's viability (whether it's working properly).

What to do?

The liveness probes mechanism helps determine when it's time to restart the container so the application doesn't get stuck and continues to work.

Readiness probes are not so radical: they allow Kubernetes to understand when the container is ready to accept traffic. In case of an unsuccessful check, the pod will simply be excluded from load balancing – no new requests will reach it, but no forced termination will occur.

You can use this capability to allow the application to "digest" the incoming stream of requests without pod failure. Once a few readiness checks pass successfully, the replica pod will return to load balancing and start receiving requests again. From the nginx-ingress side, this looks like excluding the replica's address from the upstream.

Such checks are a useful Kubernetes feature, but if liveness probes are configured incorrectly, they can harm the application's operation. For example, if you try to deploy an application update that fails the liveness/readiness checks, it will roll back in the pipeline or lead to performance degradation (in case of correct pod configuration). There are also known cases of cascading pod restarts, when Kubernetes "collapses" one pod after another due to liveness probe failures.

You can disable checks as a feature, and you should do so if you don't fully understand their specifics and implications. Otherwise, it's important to specify the necessary endpoints and inform DevOps about them.

If you have an HTTP endpoint that can be an exhaustive indicator, you can configure both liveness and readiness probes to work with it. By using the same endpoint, make sure your pod will be restarted if this endpoint cannot return a correct response.

Aim for More Predictable and Uniform Application Resource Consumption

Why?

Almost always, containers inside Kubernetes pods are resource-limited within certain (sometimes quite small) values. Scaling in the cluster happens horizontally by increasing the number of replicas, not the size of a single replica. In Kubernetes, we deal with memory limits and CPU limits. Mistakes in CPU limits can lead to throttling, exhausting the available CPU time allotted to the container. And if we promise more memory than is available, as the load increases and hits the ceiling, Kubernetes will start evicting the lowest priority pods from the node. Of course, limits are configurable. You can always find a value at which Kubernetes won't "kill" pods due to memory constraints, but nevertheless, more predictable resource consumption by the application is a best practice. The more uniform the application's consumption, the tighter you can schedule the load.

What to do

Evaluate your application: estimate approximately how many requests it processes, how much memory it occupies. How many pods need to be run for the load to be evenly distributed among them? A situation where one pod consistently consumes more than the others is unfavorable for the user. Such a pod will be constantly restarted by Kubernetes, jeopardizing the application's fault-tolerant operation. Expanding resource limits for potential peak loads is also not an option. In that case, resources will remain idle whenever there is no load, wasting money. In parallel with setting limits, it's important to monitor pod metrics. This could be kube-Prometheus-Stack, VictoriaMetrics, or at least Metrics Server (more suitable for very basic scaling; in its console, you can view stats from kubelet—how much pods are consuming). Monitoring will help identify problem areas in production and reconsider the resource distribution logic.

There is a rather specific nuance regarding CPU time that Kubernetes application developers should keep in mind to avoid deployment issues and having to rewrite code to meet SRE requirements. Let's say a container is allocated 500 milli-CPUs—roughly 0.5 CPU time of a single core over 100 ms of real time. Simplified, if the application utilizes CPU time in several continuous threads (let's say four) and "eats up" all 500 milli-CPUs in 25 ms, it will be frozen by the system for the remaining 75 ms until the next quota period. Staging databases run in Kubernetes with small limits exemplify this behavior: under load, queries that would normally take 5 ms plummet to 100 ms. If the response graphs show load continuously increasing and then latency spiking, you've likely encountered this nuance. Address resource management—give more resources to replicas or increase the number of replicas to reduce load on each one.

Get a sample of IT Audit

Sign up now

Get on email

Loading...

Thank you!

You have successfully joined our subscriber list.

Leverage Kubernetes ConfigMaps, Secrets, and Environment Variables

Kubernetes has several objects that can greatly simplify life for developers. Study them to save yourself time in the future.

ConfigMaps are Kubernetes objects used to store non-confidential data as key-value pairs. Pods can use them as environment variables or as configuration files in volumes. For example, you're developing an application that can run locally (for direct development) and, say, in the cloud. You create an environment variable for your app—e.g., DATABASE_HOST—which the app will use to connect to the database. You set this variable locally to localhost. But when running the app in the cloud, you need to specify a different value—say, the hostname of an external database.

Environment variables allow using the same Docker image for both local use and cloud deployment. No need to rebuild the image for each individual parameter. Since the parameter is dynamic and can change, you can specify it via an environment variable.

The same applies to application config files. These files store certain settings for the app to function correctly. Usually, when building a Docker image, you specify a default config or a config file to load into Docker. Different environments (dev, prod, test, etc.) require different settings that sometimes need to be changed, for testing, for example.

Instead of building separate Docker images for each environment and config, you can mount config files into Docker when starting the pod. The app will then pick up the specified configuration files, and you'll use one Docker image for the pods.

Typically, iftheconfig files are large,youuse a Volume to mount them into Docker as files. If the config files contain short values, environment variables are more convenient. It all depends on yourapplication's requirements.

Another useful Kubernetes abstraction is Secrets. Secrets are similar to ConfigMaps but are meant for storing confidential data—passwords, tokens, keys, etc. Using Secrets means you don't need to include secret data in your application code. They can be created independently of the pods that use them, reducing the risk of data exposure. Secrets can be used as files in volumes mounted in one or multiple pod containers. They can also serve as environment variables for containers.

Disclaimer: In this point, we're only describing out-of-the-box Kubernetes functionality. We're not covering more specialized solutions for working with secrets, such as Vault.

Knowing about these Kubernetes features, a developer won't need to rebuild the entire container if something changes in the prepared configuration, for example, a password change.

Ensure Graceful Container Shutdown with SIGTERM

Why?

There are situations where Kubernetes "kills" an application before it has a chance to release resources. This is not an ideal scenario. It's better if the application can respond to incoming requests without accepting new ones, complete a transaction, or save data to a database before shutting down.

What to Do

A successful practice here is for the application to handle the SIGTERM signal. When a container is being terminated, the PID 1 process first receives the SIGTERM signal, and then the application is given some time for graceful termination (the default is 30 seconds). Subsequently, if the container has not terminated itself, it receives SIGKILL—the forced termination signal. The application should not continue accepting connections after receiving SIGTERM.

Many frameworks (e.g., Django) can handle this out of the box. Your application may already handle SIGTERM appropriately. Ensure that this is the case.

Here are a few more important points:

The Application Should Not Depend on Which Pod a Request Goes To

When moving an application to Kubernetes, we expectedly encounter auto-scaling—you may have migrated for this very reason. Depending on the load, the orchestrator adds or removes application replicas. The application mustn't depend on which pod a client request goes to, for example, static pods. Either the state needs to be synchronized to provide an identical response from any pod, or your backend should be able to work across multiple replicas without corrupting data.

Your Application Should Be Behind a Reverse Proxy and Serve Links Over HTTPS

Kubernetes has the Ingress entity, which essentially provides a reverse proxy for the application, typically an nginx with cluster automation. For the application, it's enough to work over HTTP and understand that the external link will be over HTTPS. Remember that in Kubernetes, the application is behind a reverse proxy, not directly exposed to the internet, and links should be served over HTTPS. When the application returns an HTTP link, Ingress rewrites it to HTTPS, which can lead to looping and a redirect error. Usually, you can avoid such a conflict by simply toggling a parameter in the library you're using, checking that the application is behind a reverse proxy. But if you're writing an application from scratch, it's important to remember how Ingress works as a reverse proxy.

Leave SSL Certificate Management to Kubernetes

Developers don't need to think about how to "hook up" certificate addition in Kubernetes. Reinventing the wheel here is unnecessary and even harmful. For this, a separate service is typically used in the orchestrator — cert-manager, which can be additionally installed. The Ingress Controller in Kubernetes allows using SSL certificates for TLS traffic termination. You can use both Let's Encrypt and pre-issued certificates. If needed, you can create a special secret for storing issued SSL certificates.