- What Does Cloud Computing Mean and Why Most Enterprises Use It?

- Why Choose On‑Premises Infrastructure Even Today?

- On-Premises Infrastructure

- Cost Considerations: OpEx vs CapEx and Hidden Costs

- Performance and Scalability: Cloud vs. Bare Metal

- Compliance, Data Sovereignty & Security: Cloud vs. On‑Premises

- Cloud Provider Security Measures vs. In-House Security

- Use Cases and Considerations: Cloud vs. On-premises

- The Future is Hybrid

- Additional Factors to Consider When Choosing Cloud or On-Premises

- In Conclusion

The way businesses store and manage data is undergoing a significant shift. Traditionally, companies relied on on-premises infrastructure – physical servers and hardware located within their own facilities. However, the rise of cloud computing offers a compelling alternative. Let’s delve into both options with facts and figures to help you decide which is best for your needs.

This article helps you decide when to use cloud computing, on‑premises hardware, or a hybrid strategy. Learn the advantages, costs, performance considerations, and compliance implications for each.

What Does Cloud Computing Mean and Why Most Enterprises Use It?

Cloud computing delivers IT services, including servers, databases, storage, networking, and analytics, over the internet. It supports scalability, rapid innovation, and cost flexibility.

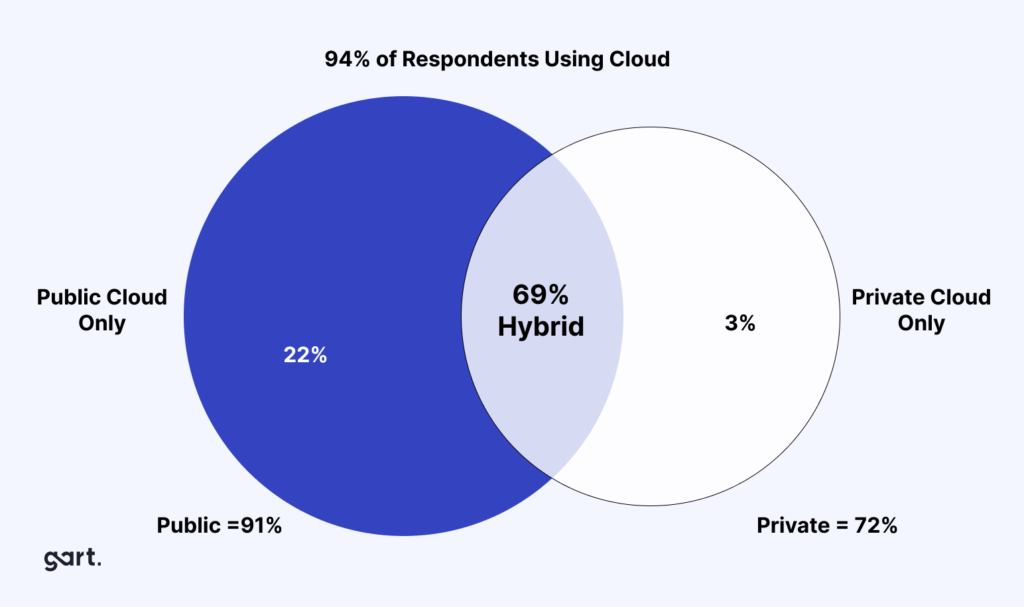

As of 2019, 94% of enterprises used cloud services (Source: Flexera), and by 2025, 85% of IT strategies will be cloud-first (Source: Gartner). Why?

Cloud eliminates the upfront costs of buying and maintaining hardware. You only pay for the resources you use, leading to significant potential savings.

Cloud providers handle software updates and security patches, freeing up your IT staff for other tasks. Access your data and applications from anywhere with an internet connection, promoting remote work and collaboration.

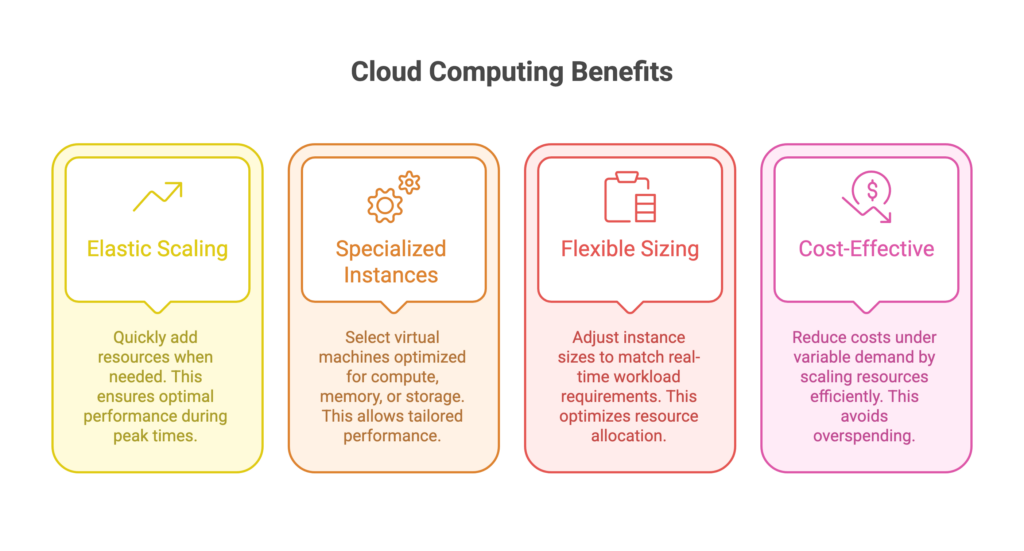

Key Cloud Benefits:

- Elastic Resources: Scale up or down instantly.

- Reduced Maintenance: Providers handle updates, patches, and uptime.

- Cost Efficiency: Pay only for what you use (OpEx model).

- Remote Access: Support distributed teams and collaboration.

- Innovation Ready: Experiment faster with new tools and services.

A 2023 Flexera report found that cloud migration can cut infrastructure costs by up to 30%.

Why Choose On‑Premises Infrastructure Even Today?

Summary:

On‑premises infrastructure gives you full control, high customization, and total ownership of your environment—but also demands greater capital investment and ongoing maintenance.

What is On-Premises Infrastructure?

Also known as bare metal, it refers to computing resources physically located and managed within your organization’s facilities.

While cloud is trending, on-premises still holds relevance for:

- Customization: Full control over hardware/software.

- Data Security Preference: Some industries view on-prem as more secure.

- Regulatory Pressure: Industries like finance or defense may require data to stay in-house.

The global bare metal cloud market was valued at $5.6B in 2021 and is expected to reach $56.6B by 2031 (CAGR of 26.1%).

On-Premises Infrastructure

On-premises or bare metal refers to a computing infrastructure that is installed and run on computers on the premises of the organization using the software, rather than at a remote facility or in the cloud. The global bare metal cloud market was valued at $5.6 billion in 2021, and is projected to reach $56.6 billion by 2031, growing at a CAGR of 26.1% from 2022 to 2031. (Source: Verified Market Research).

On average, organizations using on-premises infrastructure spend 55% of their IT budgets on maintenance, compared to 45% for cloud users (Source: Deloitte).

While cloud computing is gaining traction, on-premises solutions still hold value for some businesses:

- You have complete control over your hardware and software, allowing for high levels of customization.

- Some businesses might prefer to keep sensitive data in-house, perceived to be more secure. However, with advanced security measures, reputable cloud providers offer robust data protection.

- Certain industries may have strict data residency regulations that favor on-premises storage.

Cost Considerations: OpEx vs CapEx and Hidden Costs

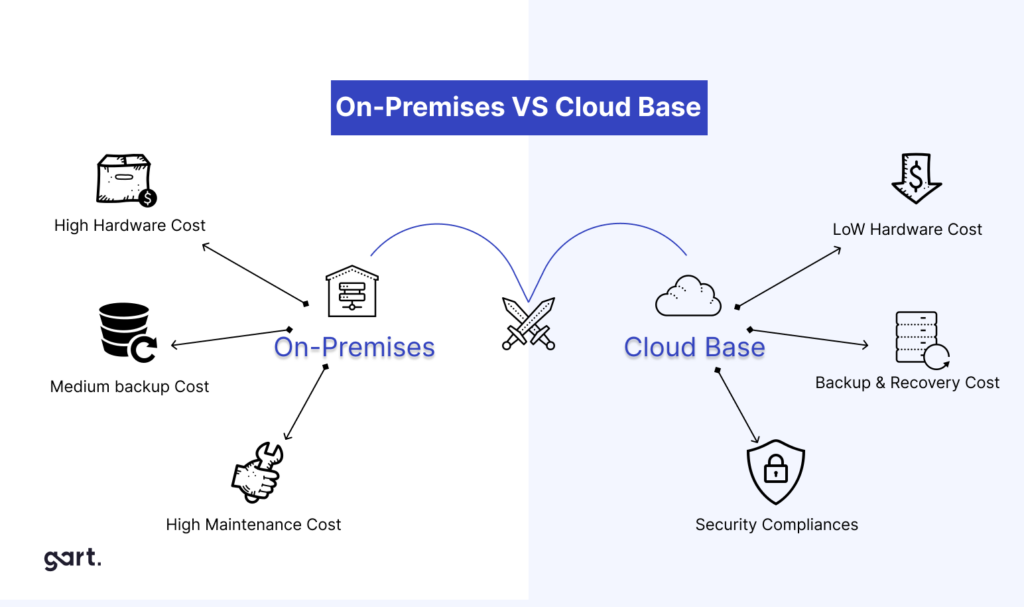

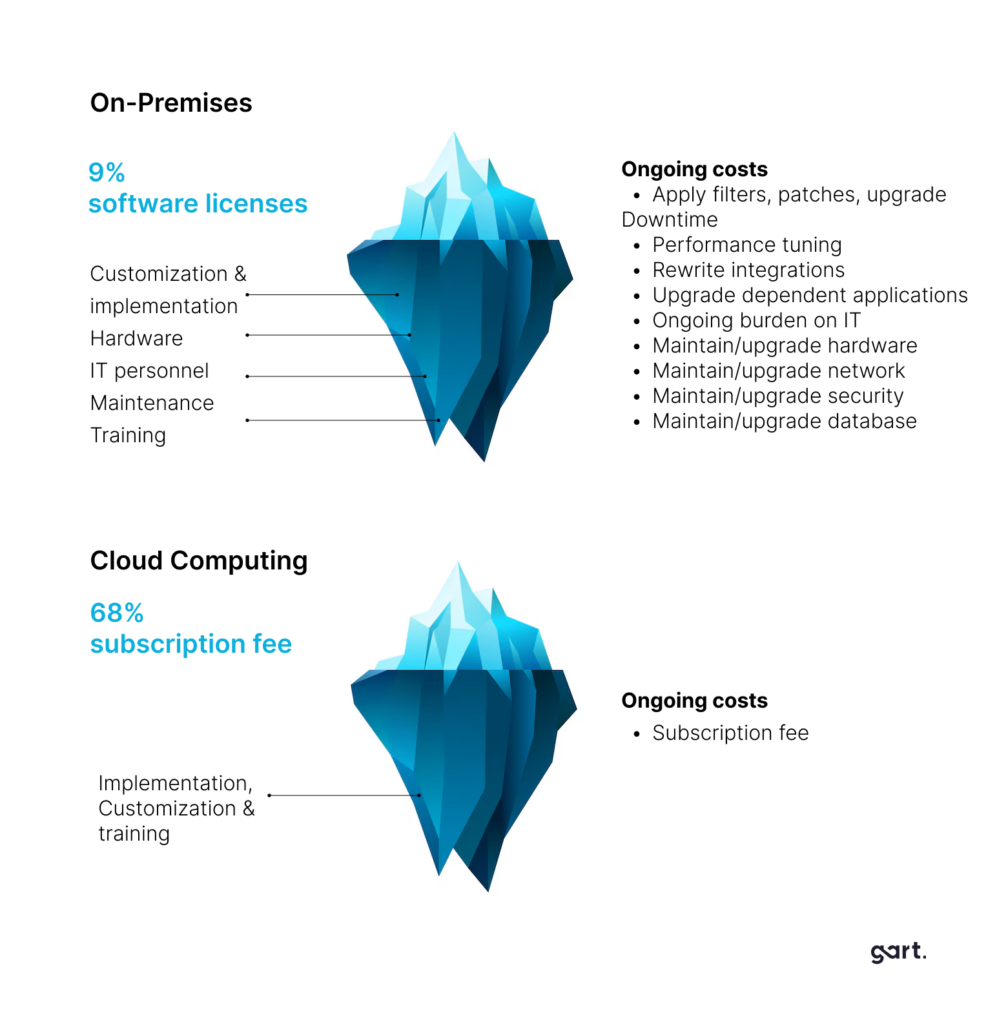

Cloud services operate on an operational expenditure (OpEx) model, where you pay for resources and services as you use them. This eliminates the need for large upfront investments.

On-premises solutions require substantial capital expenditure (CapEx) for purchasing and setting up hardware, data centers, and related infrastructure. This includes costs for servers, storage, networking equipment, and facilities.

Starting with cloud services often requires minimal initial financial commitment. You can quickly provision resources without significant capital expenditure, making it accessible for startups and projects with limited budgets.

The hardware you buy will depreciate over time, which can affect long-term financial planning and capital allocation.

Cloud uses an OpEx model (pay-as-you-go), while on-premises requires CapEx (hardware + setup). However, the total cost includes hidden factors, such as maintenance, refresh cycles, and staff, which can make on-prem more expensive over time.

| Feature | Cloud Computing | On-Premises (Bare Metal) |

|---|---|---|

| Initial Investment | Low (OpEx) | High (CapEx) |

| Hidden Costs | Fewer (no cooling, staffing) | Higher (power, cooling, facilities, staff) |

| Hardware Refresh | Handled by provider | Requires internal planning and expense |

| Resource Utilization | Pay only for what you use | Risk of overprovisioning and idle hardware |

| Scalability | Instant, elastic, cost-efficient | Requires physical scaling and long lead times |

Key Insights:

- On-prem may appear cheaper upfront, but over time, TCO (Total Cost of Ownership) can be significantly higher.

- Many organizations overspend due to underused hardware and frequent refresh cycles.

Performance and Scalability: Cloud vs. Bare Metal

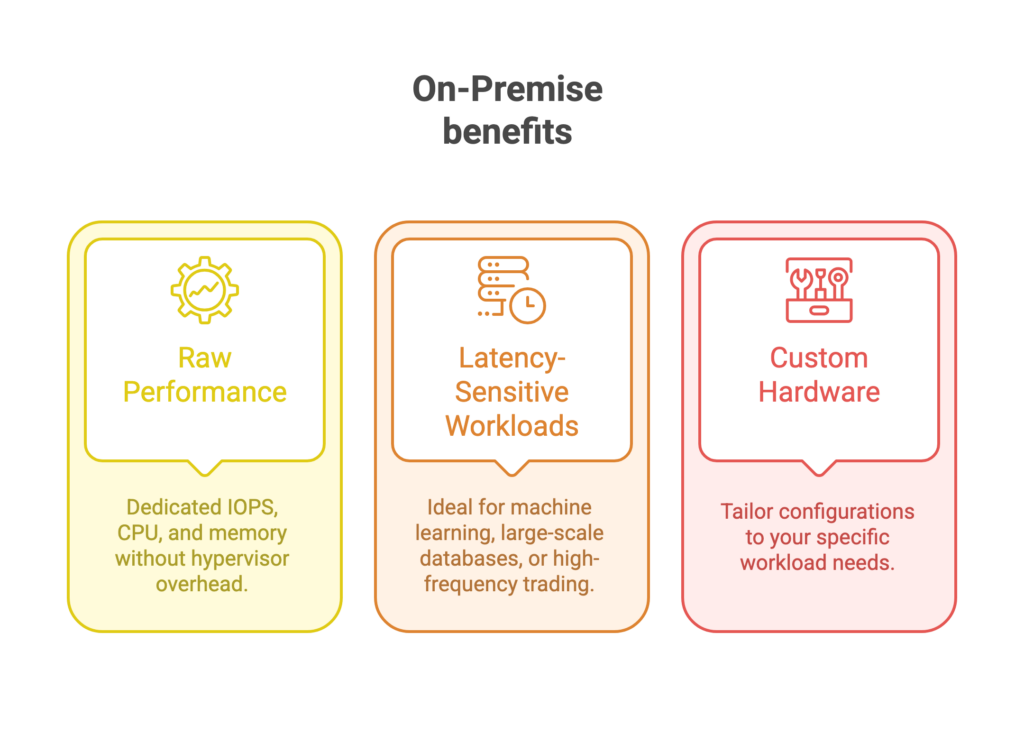

Cloud offers elastic scalability— ideal for dynamic workloads. Bare-metal provides raw power and consistency — ideal for latency-sensitive, compute-heavy tasks.

Cloud computing offers elasticity, allowing you to rapidly scale resources (processing power, storage) up or down based on real-time demand. This ensures optimal performance during peak loads without sacrificing resources during low usage periods. A 2023 study by Flexera found that 73% of businesses reported improved application performance after migrating to the cloud.

Examples:

▪️ You can choose from a range of instance types optimized for different workloads, such as compute-optimized, memory-optimized, and storage-optimized instances. For example, an m5.2xlarge instance provides 8 vCPUs and 32 GB of memory, suitable for high-performance computing tasks.

▪️ Azure offers virtual machine sizes tailored for specific scenarios, such as the D-series for general-purpose workloads and the H-series for high-performance computing.

Bare metal servers often provide superior performance for certain high-demand workloads due to their dedicated hardware. This can be critical for applications requiring high I/O throughput, low latency, or substantial computational power. With bare metal, you have the flexibility to configure hardware to meet specific performance requirements. This is particularly beneficial for specialized applications, such as machine learning models or high-frequency trading platforms.

Examples:

▪️ A bare metal server with Intel Xeon Platinum CPUs and NVMe SSDs can handle large-scale databases or data-intensive applications with minimal latency. For instance, benchmarks show that a single bare metal server can achieve up to 1 million IOPS (input/output operations per second) compared to 100,000 IOPS for a typical cloud SSD instance.

▪️ IBM offers customizable bare metal servers with up to 192 GB of RAM and 16 vCPUs, providing the raw performance needed for demanding workloads. These servers are often used for tasks that require consistent, high-speed performance without the overhead of virtualization.

Scaling on-premises infrastructure typically requires purchasing and installing additional hardware. This process involves significant planning, procurement, and installation time. For example, scaling from a small data center to a larger one may involve several months of lead time for new hardware and infrastructure.

Compliance, Data Sovereignty & Security: Cloud vs. On‑Premises

Cloud providers offer robust security and global compliance, but you must manage shared responsibilities. On-premises gives full control, but also full accountability.

Major cloud providers comply with a range of international and industry-specific standards. For example:

- AWS Compliance: AWS holds certifications such as ISO 27001, SOC 1/2/3, GDPR compliance, and HIPAA compliance.

- Azure Compliance: Microsoft Azure is compliant with standards including ISO 27001, SOC 1/2/3, GDPR, and HIPAA.

- Google Cloud Compliance: Google Cloud complies with standards like ISO 27001, SOC 1/2/3, GDPR, and HIPAA.

Cloud providers offer data residency options, allowing organizations to choose the geographical location where their data is stored. For instance, AWS provides data centers across various regions globally, and users can select the region that aligns with their data sovereignty requirements.

Cloud providers ensure compliance with local data protection laws, such as the EU’s General Data Protection Regulation (GDPR), which mandates that data of EU citizens must be stored within the EU or in countries with adequate protection levels.

On‑Prem Compliance Pros and Cons:

- Full control over data and infrastructure.

- Ideal for strict regulations in finance, defense, or healthcare.

- But: You’re fully responsible for audits, reporting, and security hardening.

A study by IAPP found that GDPR compliance costs average $1.5M per organization — cloud providers often absorb parts of this burden via shared responsibility.

On-premises environments require organizations to ensure compliance with local and industry regulations. This often involves implementing complex data protection measures and ensuring that all aspects of the infrastructure adhere to regulatory standards.

With on-premises infrastructure, organizations have complete control over their data and its location, which can be advantageous for meeting specific data sovereignty requirements. However, this also means that the organization is fully responsible for implementing and maintaining compliance measures.

Cloud Provider Security Measures vs. In-House Security

In cloud environments, security is a shared responsibility between the cloud provider and the customer. Providers like AWS, Azure, and Google Cloud are responsible for the security of the cloud infrastructure, including physical security, network security, and virtualization layers. Customers are responsible for securing their data, applications, and configurations within the cloud.

On-premises security involves dedicated resources for managing physical security, network security, and data protection. This includes physical access controls, firewalls, intrusion detection systems, and regular security audits.

According to a Ponemon Institute study, organizations with in-house security teams spend an average of $3.6 million annually on security, compared to $2.6 million for organizations using managed security services. This highlights the potential cost advantage of cloud security solutions, where many security services are included as part of the subscription.

Use Cases and Considerations: Cloud vs. On-premises

| Use Case | Cloud Computing | On-Premises Infrastructure |

|---|---|---|

| Startups & Rapid Dev | Ideal – fast deployment, low CapEx, scalability | Less suitable – high upfront costs, longer setup time |

| High-Traffic Applications | Elastic scaling handles spikes effortlessly | Requires costly overprovisioning to match peak demand |

| Stable Workloads | Good with cost-optimized reserved instances | Suitable, though scaling may be more complex |

| Variable Demand | Best – resources scale dynamically | Scaling up/down is time-consuming and hardware-bound |

| Sensitive Data Compliance | Supports with region selection + certifications | Offers full control for strict regulatory environments |

The Future is Hybrid

Many businesses are adopting a hybrid approach, combining cloud and on-premises infrastructure. This allows them to leverage the benefits of both: cost-effectiveness, scalability, and control over sensitive data.

| Feature | Cloud Computing | On-premises/Bare Metal |

|---|---|---|

| Deployment Model | Off-site, delivered over the internet | On-site, within your data center |

| Scalability | Easy to scale up or down resources | Scaling can be slow and expensive |

| Cost | Pay-as-you-go model | High upfront costs for hardware, software, and IT staff |

| Accessibility | Accessible from anywhere with an internet connection | Access might be restricted to the local network |

| Security | Robust security features offered by cloud providers | Requires strong internal security measures |

| Maintenance | Managed by the cloud provider | Requires in-house IT staff for maintenance |

| Control | Less control over hardware and software | Full control over hardware and software |

| Customization | Limited customization options | Highly customizable |

Why Hybrid Works:

- Critical apps or sensitive data stay on-premises.

- Web apps, backups, and analytics move to the cloud.

- You gain cost-efficiency, resilience, and agility.

Additional Factors to Consider When Choosing Cloud or On-Premises

IT Expertise

- Cloud simplifies infrastructure—no need for deep in-house IT skills.

- On-premises requires hands-on experience for setup, maintenance, and compliance.

Industry Regulations

- Healthcare, finance, and government may mandate data residency or on-premise data control.

- Cloud providers increasingly meet these standards, but internal audits may still favor on-prem.

Business Stage

- Startups or growing businesses benefit most from cloud flexibility.

- Mature organizations with legacy systems may prefer to extend on-prem investments.

In Conclusion

Cloud computing has revolutionized how businesses manage IT. With elastic scalability, global reach, and reduced CapEx, it fits most modern businesses.

However, on-premises remains valuable for highly regulated, security-conscious, or performance-driven environments.

For many, a hybrid approach offers the best balance — agility, control, and cost-efficiency combined.

Still unsure?

Let’s discuss your infrastructure needs and tailor a solution that fits both your tech and your compliance goals.

See how we can help to overcome your challenges