- What are the four golden signals in SRE

- Why Golden Signals Matter

- What are the key benefits of using “golden signals” in a microservices environment?

- How to Monitor Microservices Using Golden Signals

- How can the “one-hop dependency view” assist in troubleshooting?

- Practical Application: Using APM Dashboards

- What is the significance of distinguishing 500 vs. 400 errors in SRE monitoring?

- SRE Monitoring Dashboard Best Practices

- Conclusion

Site Reliability Engineering (SRE) focuses on keeping services reliable and scalable. A crucial part of this discipline is monitoring, which is where the concept of Golden Signals comes into play.

By focusing on just four “Golden Signals,” organizations can cut their incident response time in half. Golden Signals help teams quickly identify and diagnose issues within a system.

This post explores how SRE teams use these metrics — latency, errors, traffic, saturation—to drive reliability and streamline troubleshooting in complex microservices environments.

What are the four golden signals in SRE

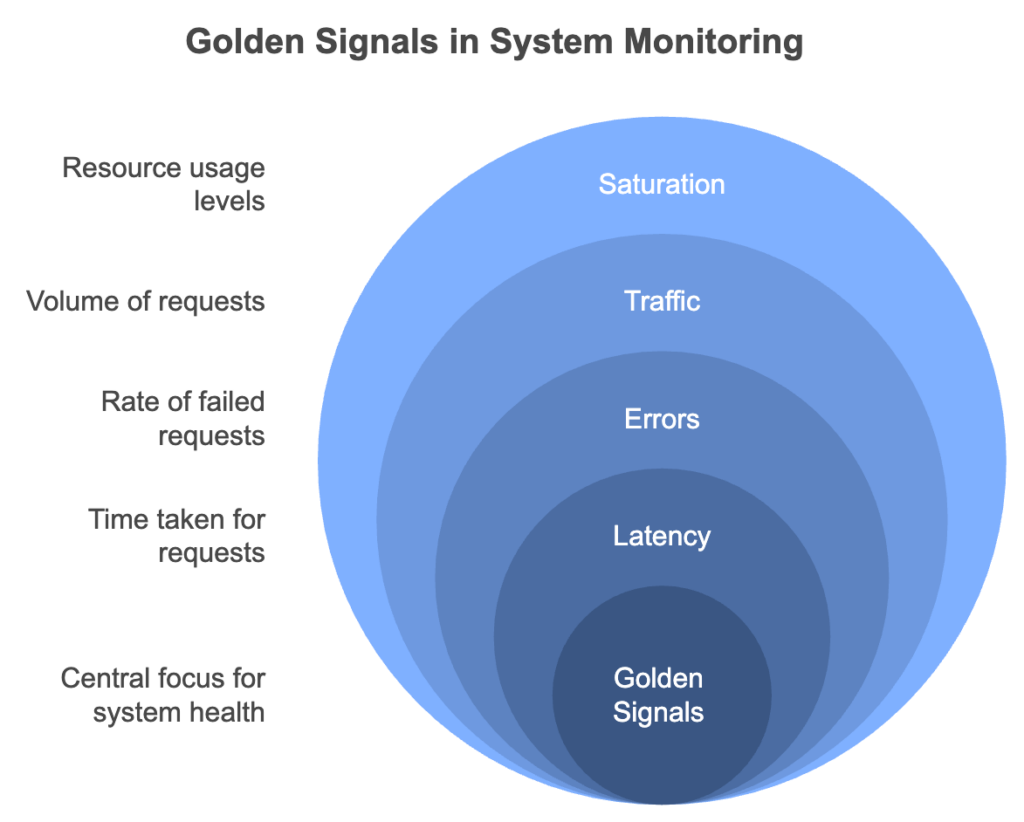

SRE principles streamline monitoring by focusing on four key metrics—latency, errors, traffic, and saturation—collectively known as Golden Signals. Instead of tracking numerous metrics across different technologies, focusing on these four metrics helps in quickly identifying and resolving issues.

Latency:

Latency is the time it takes for a request to travel from the client to the server and back. High latency can cause a poor user experience, making it critical to keep this metric in check. For example, in web applications, latency might typically range from 200 to 400 milliseconds. Latency under 300 ms ensures good user experience; errors >1% necessitate investigation. Latency monitoring helps detect slowdowns early, allowing for quick corrective action.

Errors:

Errors refer to the rate of failed requests. Monitoring errors is essential because not all errors have the same impact. For instance, a 500 error (server error) is more severe than a 400 error (client error) because the former often requires immediate intervention. Identifying error spikes can alert teams to underlying issues before they escalate into major problems.

Traffic:

Traffic measures the volume of requests coming into the system. Understanding traffic patterns helps teams prepare for expected loads and identify anomalies that might indicate issues such as DDoS attacks or unplanned spikes in user activity. For example, if your system is built to handle 1,000 requests per second and suddenly receives 10,000, this surge might overwhelm your infrastructure if not properly managed.

Saturation:

Saturation is about resource utilization; it shows how close your system is to reaching its full capacity. Monitoring saturation helps avoid performance bottlenecks caused by overuse of resources like CPU, memory, or network bandwidth. Think of it like a car’s tachometer: once it redlines, you’re pushing the engine too hard, risking a breakdown.

Challenges associated with monitoring saturation in microservices:

- Complexity of Microservice Architectures:

In microservice environments, various services are often built on different technologies (e.g., Node.js, databases, Swift). Each service may handle resource usage differently, making it challenging to monitor and understand overall system saturation accurately. Saturation occurs when resources such as CPU, memory, or network bandwidth are fully utilized, leading to degraded performance. - Resource Utilization Visibility:

Since each microservice can have its unique metrics, gaining a clear view of overall saturation is difficult. Teams need to aggregate and standardize data from multiple services to accurately assess saturation levels. This can be time-consuming and requires expertise across different technology stacks. - Identification of Bottlenecks:

Saturation often results in bottlenecks where some services are overloaded while others are underutilized. Pinpointing which service is causing the bottleneck in a complex system can be difficult without a cohesive monitoring approach like the one provided by SRE Golden Signals. - Dynamic and Variable Loads:

In microservice architectures, traffic and resource demands can fluctuate rapidly, making it essential to monitor saturation in real-time. Services must adapt to changes in load, but without proper monitoring, it’s easy to miss critical saturation points that can impact overall system performance.

Why Golden Signals Matter

Golden Signals provide a comprehensive overview of a system’s health, enabling SREs and DevOps teams to be proactive rather than reactive. By continuously monitoring these metrics, teams can spot trends and anomalies, address potential issues before they affect end-users, and maintain a high level of service reliability.

SRE Golden Signals help in proactive system monitoring

SRE Golden Signals are crucial for proactive system monitoring because they simplify the identification of root causes in complex applications. Instead of getting overwhelmed by numerous metrics from various technologies, SRE Golden Signals focus on four key indicators: latency, errors, traffic, and saturation.

By continuously monitoring these signals, teams can detect anomalies early and address potential issues before they affect the end-user. For instance, if there is an increase in latency or a spike in error rates, it signals that something is wrong, prompting immediate investigation.

What are the key benefits of using “golden signals” in a microservices environment?

The “golden signals” approach is especially beneficial in a microservices environment because it provides a simplified yet powerful framework to monitor essential metrics across complex service architectures.

Here’s why this approach is effective:

▪️Focuses on Key Performance Indicators (KPIs)

By concentrating on latency, errors, traffic, and saturation, the golden signals let teams avoid the overwhelming and often unmanageable task of tracking every metric across diverse microservices. This strategic focus means that only the most crucial metrics impacting user experience are monitored.

▪️Enhances Cross-Technology Clarity

In a microservices ecosystem where services might be built on different technologies (e.g., Node.js, DB2, Swift), using universal metrics minimizes the need for specific expertise. Teams can identify issues without having to fully understand the intricacies of every service’s technology stack.

▪️Speeds Up Troubleshooting

Golden signals quickly highlight root causes by filtering out non-essential metrics, allowing the team to narrow down potential problem areas in a large web of interdependent services. This is crucial for maintaining service uptime and a seamless user experience.

By applying these golden signals, SRE teams can efficiently diagnose and address issues, keeping complex applications stable and responsive.

How to Monitor Microservices Using Golden Signals

Monitoring microservices requires a streamlined approach, especially in environments where dozens (or hundreds) of services interact across various technology stacks. Golden Signals provide a clear, focused framework for tracking system health across these distributed systems.

1. Start by Defining What You’ll Monitor

Each microservice should have its own observability pipeline for:

- Latency – Measure the time it takes for a request to be processed from start to finish.

- Errors – Capture both 4xx and 5xx HTTP codes or application-level exceptions.

- Traffic – Monitor request rates (RPS/QPS) and message throughput.

- Saturation – Track CPU, memory, thread usage, and queue lengths.

Tip: Integrate these signals into SLIs (Service Level Indicators) and SLOs (Service Level Objectives) to measure system reliability over time.

2. Use Unified Observability Tools

Deploy tools that allow you to collect metrics, logs, and traces across all services. Popular platforms include:

- Datadog and New Relic: Full-stack observability with built-in Golden Signals support.

- Prometheus + Grafana: Open-source, highly customizable metrics + dashboards.

- OpenTelemetry: Instrument code once to collect traces, metrics, and logs.

3. Isolate Service Boundaries

Microservices should expose telemetry endpoints (e.g., /metrics for Prometheus or OpenTelemetry exporters). Group Golden Signals by service for clarity:

| Microservice | Latency | Error Rate | Traffic | Saturation |

|---|---|---|---|---|

| Auth | 220ms | 1.2% | 5k RPS | 78% CPU |

| Payments | 310ms | 3.1% | 3k RPS | 89% Memory |

4. Correlate Signals with Tracing

Use distributed tracing to map requests across services. Tools like Jaeger or Zipkin help you:

- Trace latency across hops

- Find the exact service causing spikes in error rates

- Visualize traffic flows and bottlenecks

5. Automate Alerting with Context

Set thresholds and anomaly detection for each signal:

- Latency > 500ms? Alert DevOps

- Saturation > 90%? Trigger autoscaling

- Error Rate > 2% over 5 mins? Notify engineering and create an incident ticket

How can the “one-hop dependency view” assist in troubleshooting?

The “one-hop dependency view” in application performance monitoring (APM) simplifies troubleshooting by focusing only on the services that directly impact the affected service.

Here’s how it helps:

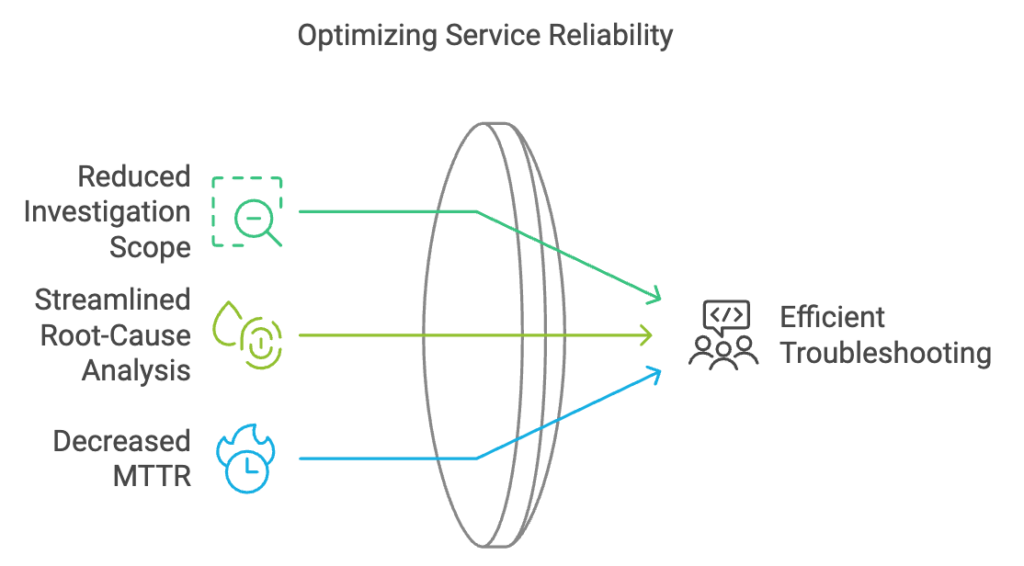

▪️Reduces Investigation Scope

Rather than analyzing the entire microservices topology, the one-hop view narrows the scope to immediate dependencies. This selective approach allows engineers to focus on the most likely sources of issues, saving time in identifying the root cause.

▪️Streamlines Root-Cause Analysis

By examining only the services one level away, the team can apply the golden signals (latency, errors, traffic, saturation) to detect any anomalies quickly. If a direct dependency is experiencing problems, it becomes immediately apparent without unnecessary complexity.

▪️Decreases Mean-Time-to-Recovery (MTTR)

With fewer services to investigate, the MTTR is significantly reduced. Engineers can identify and address the root issue faster, minimizing downtime and maintaining the application’s reliability.

Using the one-hop dependency view helps SRE teams keep the troubleshooting process efficient, especially in complex, interdependent service ecosystems

Practical Application: Using APM Dashboards

Application Performance Management (APM) dashboards integrate Golden Signals into a single view, allowing teams to monitor all critical metrics at once. For example, the operations team can use APM dashboards to get insights into latency, errors, traffic, and saturation. This holistic view simplifies troubleshooting and reduces the mean time to resolution (MTTR).

Here’s how they work together:

▪️Centralized Monitoring with APM Dashboards:

APM tools provide dashboards that centralize the key Golden Signals—latency, errors, traffic, and saturation. This centralized view allows operations and development teams to monitor the health of their applications in real-time. By displaying these critical metrics in one place, APM tools simplify the identification of performance issues, making it easier to spot trends and anomalies that need attention.

▪️“One Hop” Dependency Views:

APM tools often support a “one hop” dependency view, which shows only the immediate downstream services connected to a problematic service. This feature is particularly useful in complex microservice environments where pinpointing the root cause of an issue can be daunting. By focusing on immediate dependencies, teams can quickly assess which services are functioning within normal parameters and which are experiencing issues, thereby speeding up the troubleshooting process.

▪️Proactive Issue Detection and Resolution:

Integrating Golden Signals into APM tools allows for proactive monitoring, where issues can be identified before they escalate into more serious problems. For example, if a service’s saturation levels begin trending upwards, the APM tool can alert the team before users experience degraded performance. This proactive approach helps reduce the mean time to resolution (MTTR) and improves overall service reliability.

▪️ Customization for Different Teams:

The video also mentions that APM tools can be customized for different stakeholders within the organization. While the operations team may focus on all four Golden Signals, development teams might create specialized dashboards that prioritize the signals most relevant to their services. This tailored approach ensures that both dev and ops teams are aligned and can address issues quickly, often even before they impact the end-users.

In essence, the integration of SRE Golden Signals with APM tools empowers teams to maintain high levels of service performance and reliability by providing clear, actionable insights into the most critical aspects of their systems.

What is the significance of distinguishing 500 vs. 400 errors in SRE monitoring?

The distinction between 500 and 400 errors in SRE monitoring is crucial because it impacts how issues are prioritized and addressed.

Here’s a breakdown:

| Error Type | Cause | Severity | Response |

|---|---|---|---|

| 500 Server-side issue | System/app failure | High | Immediate investigation |

| 400 Client-side request issue | Bad input/auth | Lower | Monitor trends only |

500 Errors (Server Errors)

These indicate serious problems on the server side, such as downtime or crashes. They require immediate attention because they prevent users from accessing the service entirely, often resulting in significant disruptions. For instance, a 500 error signals that something is failing within the server’s infrastructure, meaning end-users can’t receive a response at all. Therefore, these errors are more critical in incident response and may trigger alerts for the SRE team.

400 Errors (Client Errors)

These typically indicate client-side issues, where a request is invalid or needs adjustment, like when the requested resource doesn’t exist or is restricted. Such errors might be resolved simply by retrying or by the client correcting the request, so they’re usually less urgent. Monitoring 400 errors can still reveal trends or user behavior that may require attention, but they don’t indicate systemic issues.

In summary, recognizing the difference allows SREs to prioritize resources on issues that directly affect the system’s reliability and availability (like 500 errors) versus issues that may just need minor adjustments or retries.

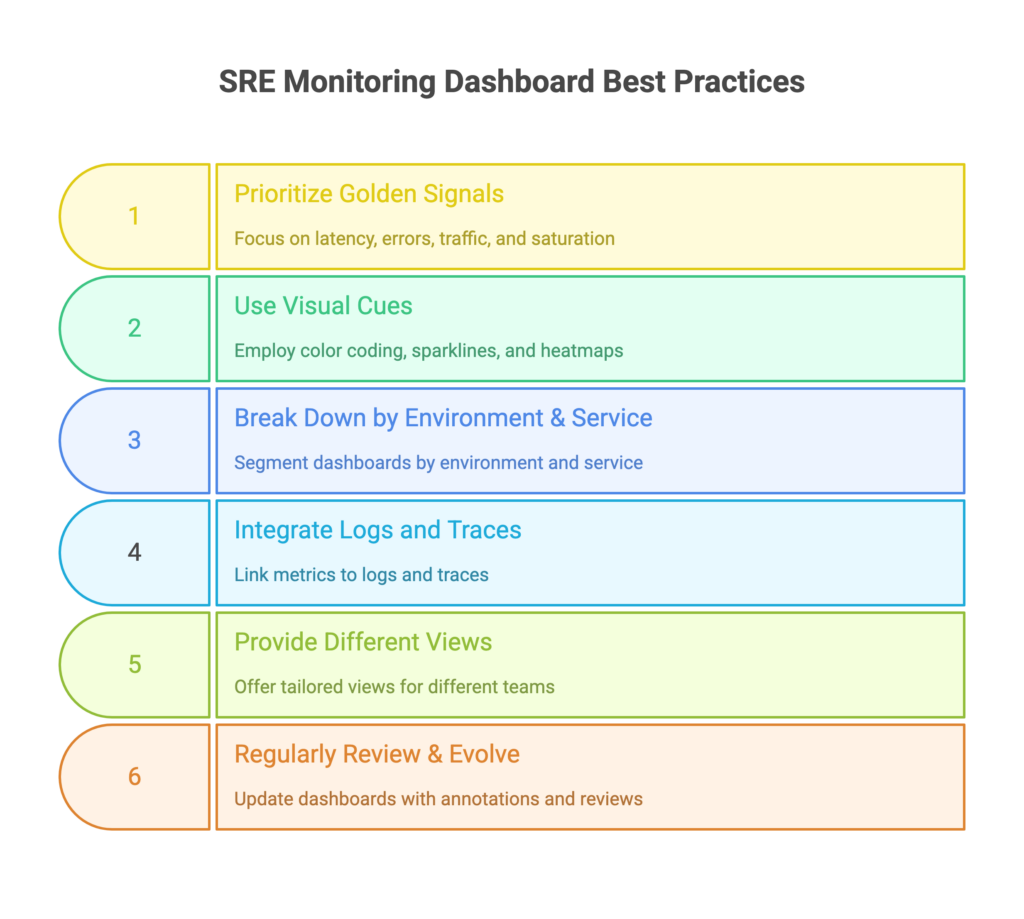

SRE Monitoring Dashboard Best Practices

A well-structured SRE dashboard makes or breaks your incident response. It’s not just about displaying data — it’s about surfacing the right insights at the right time. Here’s how to do it:

1. Prioritize Golden Signals Above All

Place latency, errors, traffic, and saturation front and center. Avoid clutter—these four are your frontline defense against performance issues.

Example Layout:

- Top row: Latency (P50/P95), Error Rate (%), Traffic (RPS), Saturation (CPU, Memory)

- Second row: SLIs, SLO burn rates, alerts over time

2. Use Visual Cues Effectively

- Color code thresholds: green (healthy), yellow (warning), red (critical)

- Sparklines for trend visualization

- Heatmaps to spot saturation across clusters or zones

3. Break Down by Environment & Service

Segment dashboards by:

- Environment (prod, staging, dev)

- Service or team ownership

- Availability zone or region

This helps you quickly isolate issues when incidents arise.

4. Integrate Logs and Traces

Link metrics to logs or traces:

- Click on a spike in latency → see related trace in Jaeger or logs in Kibana

- Integrate dashboards with alert management (PagerDuty, Opsgenie)

5. Provide Different Views for Different Teams

- SRE/DevOps view: Full stack overview + real-time alerts

- Engineering view: Deep dive into a specific service’s metrics

- Management view: SLO dashboards and service health summaries

Use templating (in Grafana or Datadog) so one dashboard serves multiple roles.

6. Regularly Review & Evolve Dashboards

- Prune unused panels or metrics

- Reassess thresholds quarterly

- Add annotations for incidents or deployments

Dashboards should be living documents, not static reports. Learn from the official Google documentation.

Conclusion

Ready to take your system’s reliability and performance to the next level? Gart Solutions offers top-tier SRE Monitoring services to ensure your systems are always running smoothly and efficiently. Our experts can help you identify and address potential issues before they impact your business, ensuring minimal downtime and optimal performance.

Discover how Gart Solutions can enhance your system’s reliability today!

Learn from our IT Monitoring case studies (Monitoring Solution for a B2C SaaS Music Platform and Advanced Monitoring for Digital Landfill Management) to learn more about our SRE Monitoring expertise.

After implementing Golden Signals, our customer reduced MTTR by 60% in under two months.

See how we can help to overcome your challenges