In this guide, we will explore various scenarios for working with Gitlab CI/CD Pipelines. We will provide examples of using the most commonly used options when working with CI/CD. The template library will be expanded as needed.

[lwptoc]

You can easily find what CI/CD is and why it's needed through a quick internet search. Full documentation on configuring pipelines in GitLab is also readily available. Here, I'll briefly and as seamlessly as possible describe the system's operation from a bird's-eye view:

A developer submits a commit to the repository, creates a merge request through the website, or initiates a pipeline explicitly or implicitly in some other way.

The pipeline configuration selects all tasks whose conditions allow them to run in the current context.

Tasks are organized according to their respective stages.

Stages are executed sequentially, meaning that all tasks within a stage run in parallel.

If a stage fails (i.e., if at least one task in the stage fails), the pipeline typically stops (almost always).

If all stages are completed successfully, the pipeline is considered to have passed successfully.

In summary, we have:

A pipeline: a set of tasks organized into stages, where you can build, test, package code, deploy a ready build to a cloud service, and more.

A stage: a unit of organizing a pipeline, containing one or more tasks.

A task (job): a unit of work in the pipeline, consisting of a script (mandatory), launch conditions, settings for publishing/caching artifacts, and much more.

Consequently, the task when setting up CI/CD is to create a set of tasks that implement all necessary actions for building, testing, and publishing code and artifacts.

? Discover the Power of CI/CD Services with Gart Solutions – Elevate Your DevOps Workflow!

Templates

In this section, we will provide several ready-made templates that you can use as a foundation for writing your own pipeline.

Minimal Scenario

For small tasks consisting of a couple of jobs:

stages:

- build

TASK_NAME:

stage: build

script:

- ./build_script.sh

stages: Describes the stages of our pipeline. In this example, there is only one stage.

TASK_NAME: The name of our job.

stage: The stage to which our job belongs.

script: A set of scripts to execute.

Standard Build Cycle

Typically, the CI/CD process includes the following steps:

Building the package.

Testing.

Delivery.

Deployment.

You can use the following template as a basis for such a scenario:

stages:

- build

- test

- delivery

- deploy

build-job:

stage: build

script:

- echo "Start build job"

- build-script.sh

test-job:

stage: test

script:

- echo "Start test job"

- test-script.sh

delivery-job:

stage: delivery

script:

- echo "Start delivery job"

- delivery-script.sh

deploy-job:

stage: deploy

script:

- echo "Start deploy job"

- deploy-script.sh

Jobs

In this section, we will explore options that can be applied when defining a job. The general syntax is as follows

<TASK_NAME>:

<OPTION1>: ...

<OPTION2>: ...

We will list commonly used options, and you can find the complete list in the official documentation.

Stage

Documentation

This option specifies to which stage the job belongs. For example:

stages:

- build

- test

TASK_NAME:

...

stage: build

TASK_NAME:

...

stage: test

Stages are defined in the stages directive.

There are two special stages that do not need to be defined in stages:

.pre: Runs before executing the main pipeline jobs.

.post: Executes at the end, after the main pipeline jobs have completed.

For example:

stages:

- build

- test

getVersion:

stage: .pre

script:

- VERSION=$(cat VERSION_FILE)

- echo "VERSION=${VERSION}" > variables.env

artifacts:

reports:

dotenv: variables.env

In this example, before starting all build jobs, we define a variable VERSION by reading it from a file and pass it as an artifact as a system variable.

Image

Documentation

Specifies the name of the Docker image to use if the job runs in a Docker container:

TASK_NAME:

...

image: debian:11

Before_script

Documentation

This option defines a list of commands to run before the script option and after obtaining artifacts:

TASK_NAME:

...

before_script:

- echo "Run before_script"

Script

Documentation

The main section where job tasks are executed is described in the script option. Let's explore it further.

Describing an array of commands: Simply list the commands that need to be executed sequentially in your job:

TASK_NAME:

...

script:

- command1

- command2

Long commands split into multiple lines: You may need to execute commands as part of a script (with comparison operators, for example). In this case, a multiline format is more convenient. You can use different indicators:

Using |:

TASK_NAME:

...

script:

- |

command_line1

command_line2

Using >:

TASK_NAME:

...

script:

- >

command_line1

command_line1_continue

command_line2

command_line2_continue

After_script

Documentation

A set of commands that are run after the script, even if the script fails:

TASK_NAME:

...

script:

...

after_script:

- command1

- command2

Artifacts

Documentation

Artifacts are intermediate builds or files that can be passed from one stage to another.

You can specify which files or directories will be artifacts:

TASK_NAME:

...

artifacts:

paths:

- ${PKG_NAME}.deb

- ${PKG_NAME}.rpm

- *.txt

- configs/

In this example, artifacts will include all files with names ending in .txt, ${PKG_NAME}.deb, ${PKG_NAME}.rpm, and the configs directory. ${PKG_NAME} is a variable (more on variables below).

In other jobs that run afterward, you can use these artifacts by referencing them by name, for example:

TASK_NAME_2:

...

script:

- cat *.txt

- yum -y localinstall ${PKG_NAME}.rpm

- apt -y install ./${PKG_NAME}.deb

You can also pass system variables that you defined in a file:

TASK_NAME:

...

script:

- echo -e "VERSION=1.1.1" > variables.env

...

artifacts:

reports:

dotenv: variables.env

In this example, we pass the system variable VERSION with the value 1.1.1 through the variables.env file.

If necessary, you can exclude specific files by name or pattern:

TASK_NAME:

...

artifacts:

paths:

...

exclude:

- ./.git/**/

In this example, we exclude the .git directory, which typically contains repository metadata. Note that unlike paths, exclude does not recursively include files and directories, so you need to explicitly specify objects.

Extends

Documentation

Allows you to separate a part of the script into a separate block and combine it with a job. To better understand this, let's look at a specific example:

.my_extend:

stage: build

variables:

USERNAME: my_user

script:

- extend script

TASK_NAME:

extends: .my_extend

variables:

VERSION: 123

PASSWORD: my_pwd

script:

- task script

In this case, in our TASK_NAME job, we use extends. As a result, the job will look like this:

TASK_NAME:

stage: build

variables:

VERSION: 123

PASSWORD: my_pwd

USERNAME: my_user

script:

- task script

What happened:

stage: build came from .my_extend.

Variables were merged, so the job includes VERSION, PASSWORD, and USERNAME.

The script is taken from the job (key values are not merged; the job's value takes precedence).

Environment

Documentation

Specifies a system variable that will be created for the job:

TASK_NAME:

...

environment:

RSYNC_PASSWORD: rpass

EDITOR: vi

Release

Documentation

Publishes a release on the Gitlab portal for your project:

TASK_NAME:

...

release:

name: 'Release $CI_COMMIT_TAG'

tag_name: '$CI_COMMIT_TAG'

description: 'Created using the release-cli'

assets:

links:

- name: "myprogram-${VERSION}"

url: "https://gitlab.com/master.dmosk/project/-/jobs/${CI_JOB_ID}/artifacts/raw/myprogram-${VERSION}.tar.gz"

rules:

- if: $CI_COMMIT_TAG

Please note that we use the if rule (explained below).

? Read more: CI/CD Pipelines and Infrastructure for E-Health Platform

Rules and Constraints Directives

To control the behavior of job execution, you can use directives with rules and conditions. You can execute or skip jobs depending on certain conditions. Several useful directives facilitate this, which we will explore in this section.

Rules

Documentation

Rules define conditions under which a job can be executed. Rules regulate different conditions using:

if

changes

exists

allow_failure

variables

The if Operator: Allows you to check a condition, such as whether a variable is equal to a specific value:

TASK_NAME:

...

rules:

- if: '$CI_COMMIT_BRANCH == $CI_DEFAULT_BRANCH'

In this example, the commit must be made to the default branch.

Changes: Checks whether changes have affected specific files. This check is performed using the changes option:In this example, it checks if the script.sql file has changed:

TASK_NAME:

...

rules:

- changes:

- script.sql

Multiple Conditions: You can have multiple conditions for starting a job. Let's explore some examples.

a) If the commit is made to the default branch AND changes affect the script.sql file:

TASK_NAME:

...

rules:

- if: '$CI_COMMIT_BRANCH == $CI_DEFAULT_BRANCH'

changes:

- script.sql

b) If the commit is made to the default branch OR changes affect the script.sql file:

TASK_NAME:

...

rules:

- if: '$CI_COMMIT_BRANCH == $CI_DEFAULT_BRANCH'

- changes:

- script.sql

Checking File Existence: Determined using exists:

TASK_NAME:

...

rules:

- exists:

- script.sql

The job will only execute if the script.sql file exists.

Allowing Job Failure: Defined with allow_failure:

TASK_NAME:

...

rules:

- allow_failure: true

In this example, the pipeline continues even if the TASK_NAME job fails.

Conditional Variable Assignment: You can conditionally assign variables using a combination of if and variables:

TASK_NAME:

...

rules:

- if: '$CI_COMMIT_BRANCH == $CI_DEFAULT_BRANCH'

variables:

DEPLOY_VARIABLE: "production"

- if: '$CI_COMMIT_BRANCH =~ demo'

variables:

DEPLOY_VARIABLE: "demo"

When

Documentation

Determines when a job should be run, such as on manual trigger or at specific intervals. The when directive determines when a job should run. Possible values include:

on_success (default): Runs if all previous jobs have succeeded or have allow_failure: true.

manual: Requires manual triggering (a "Run Pipeline" button appears in the GitLab CI/CD panel).

always: Runs always, regardless of previous results.

on_failure: Runs only if at least one previous job has failed.

delayed: Delays job execution. You can control the delay using the start_in directive.

never: Never runs.

Let's explore some examples:

Manual:

TASK_NAME:

...

when: manual

The job won't start until you manually trigger it in the GitLab CI/CD panel.

2. Always:

TASK_NAME:

...

when: always

The job will always run. Useful, for instance, when generating a report regardless of build results.

On_failure:

TASK_NAME:

...

on_failure: always

The job will run if there is a failure in previous stages. You can use this for sending notifications.

Delayed:

TASK_NAME:

...

when: delayed

start_in: 30 minutes

The job will be delayed by 30 minutes.

Never:

TASK_NAME:

...

when: never

The job will never run.

Using with if:

TASK_NAME:

...

rules:

- if: '$CI_COMMIT_BRANCH == $CI_DEFAULT_BRANCH'

when: manual

In this example, the job will only execute if the commit is made to the default branch and an administrator confirms the run.

Needs

Documentation

Allows you to specify conditions for job execution based on the presence of specific artifacts or completed jobs. With rules of this type, you can control the order in which jobs are executed.

Let's look at some examples.

Artifacts: This directive accepts true (default) and false. To start a job, artifacts must be uploaded in previous stages. Using this configuration:

TASK_NAME:

...

needs:

- artifacts: false

...allows the job to start without artifacts.

Job: You can start a job only after another job has completed:

TASK_NAME:

...

needs:

- job: createBuild

In this example, the task will only start after the job named createBuild has finished.

? Read more: Building a Robust CI/CD Pipeline for Cybersecurity Company

Variables

In this section, we will discuss user-defined variables that you can use in your pipeline scripts as well as some built-in variables that can modify the pipeline's behavior.

User-Defined Variables User-defined variables are set using the variables directive. You can define them globally for all jobs:

variables:

PKG_VER: "1.1.1"

Or for a specific job:

TASK_NAME:

...

variables:

PKG_VER: "1.1.1"

You can then use your custom variable in your script by prefixing it with a dollar sign and enclosing it in curly braces, for example:

script:

- echo ${PKG_VER}

GitLab Variables These variables help you control the build process. Let's list these variables along with descriptions of their properties:

Variable Description Example LOG_LEVEL Sets the log level. Options: debug, info, warn, error, fatal, and panic. Lower priority compared to command-line arguments --debug and --log-level. LOG_LEVEL: warning CI_DEBUG_TRACE Enables or disables debug tracing. Takes values true or false. CI_DEBUG_TRACE: true CONCURRENT Limits the number of jobs that can run simultaneously. CONCURRENT: 5 GIT_STRATEGY Controls how files are fetched from the repository. Options: clone, fetch, and none (don't fetch). GIT_STRATEGY: none

Additional Options In this section, we will cover various options that were not covered in other sections.

Workflow: Allows you to define common rules for the entire pipeline. Let's look at an example from the official GitLab documentation:

workflow:

rules:

- if: $CI_COMMIT_TITLE =~ /-draft$/

when: never

- if: $CI_PIPELINE_SOURCE == "merge_request_event"

- if: $CI_COMMIT_BRANCH == $CI_DEFAULT_BRANCH

In this example, the pipeline:

Won't be triggered if the commit title contains "draft."

Will be triggered if the pipeline source is a merge request event.

Will be triggered if changes are made to the default branch of the repository.

Default Values: Defined in the default directive. Options with these values will be used in all jobs but can be overridden at the job level.

default:

image: centos:7

tags:

- ci-test

In this example, we've defined an image (e.g., a Docker image) and tags (which may be required for some runners).

Import Configuration from Another YAML File: This can be useful for creating a shared part of a script that you want to apply to all pipelines or for breaking down a complex script into manageable parts. It is done using the include option and has different ways to load files. Let's explore them in more detail.

a) Local File Inclusion (local):

include:

- local: .gitlab/ci/build.yml

b) Template Collections (template):

include:

- template: Custom/.gitlab-ci.yml

In this example, we include the contents of the Custom/.gitlab-ci.yml file in our script.

c) External File Available via HTTP (remote):

include:

- remote: 'https://gitlab.dmosk.ru/main/build.yml'

d) Another Project:

include:

- project: 'public-project'

ref: main

file: 'build.yml'

!reference tags: Allows you to describe a script and reuse it for different stages and tasks in your CI/CD. For example:

.apt-cache:

script:

- apt update

after_script:

- apt clean all

install:

stage: install

script:

- !reference [.apt-cache, script]

- apt install nginx

update:

stage: update

script:

- !reference [.apt-cache, script]

- apt upgrade

- !reference [.apt-cache, after_script]

Let's break down what's happening in our example:

We created a task called apt-cache. The dot at the beginning of the name tells the system not to start this task automatically when the pipeline is run. The created task consists of two sections: script and after_script. There can be more sections.

We execute the install stage. In one of the execution lines, we call apt-cache (only the commands from the script section).

In the update stage, we call apt-cache twice—the first executes commands from the script section, and the second executes those from the after_script section.

These are the fundamental concepts and options of GitLab CI/CD pipelines. You can use these directives and templates as a starting point for configuring your CI/CD pipelines efficiently. For more advanced use cases and additional options, refer to the official GitLab CI/CD documentation.

In recent times, there has been a growing urgency regarding the security concerns of the developed software. Integrating secure development practices into the current processes has become imperative.

This is where DevSecOps comes into play—a powerful methodology that seamlessly integrates security practices into the software development process. In this article, we will explore the world of DevSecOps tools and how they play a pivotal role in enhancing software security, enabling organizations to stay one step ahead of potential threats.

[lwptoc]

Understanding DevSecOps Tools

DevSecOps tools are software applications and utilities designed to integrate security practices into the DevOps (Development and Operations) workflow. These tools aim to automate security checks, improve code quality, and ensure that security measures are an integral part of the software development process.

Some popular DevSecOps tools include:

Continuous Integration (CI) tools for security testing

Continuous Integration (CI) tools play a vital role in automating security testing throughout the development pipeline. They enable developers to regularly test code changes for vulnerabilities and weaknesses, ensuring that security is integrated into every stage of development.

Continuous Deployment (CD) tools for secure deployment

Continuous Deployment (CD) tools facilitate the secure and automated release of software into production environments. By leveraging CD tools, organizations can ensure that security measures are applied consistently during the deployment process.

? Read more: Exploring the Best CI/CD Tools for Streamlined Software Delivery

Security Information and Event Management (SIEM) tools for monitoring and incident response

SIEM tools help organizations monitor and analyze security events across their infrastructure. By providing real-time insights and automated incident response capabilities, SIEM tools empower teams to swiftly detect and respond to potential security breaches.

Here is a list of SIEM (Security Information and Event Management) tools: Splunk, IBM QRadar, ArcSight (now part of Micro Focus), LogRhythm, Sumo Logic, AlienVault (now part of AT&T Cybersecurity), SolarWinds Security Event Manager, Graylog, McAfee Enterprise Security Manager (ESM), Rapid7 InsightIDR.

Static Application Security Testing (SAST) tools for code analysis

SAST tools analyze the source code of applications to identify security flaws, vulnerabilities, and compliance issues. Integrating SAST into the development workflow allows developers to proactively address security concerns during the coding phase.

Some examples of Static Application Security Testing (SAST) tools: Fortify Static Code Analyzer, Checkmarx, Veracode Static Analysis, SonarQube, WhiteSource Bolt, Synopsys Coverity, Kiuwan, WebInspect, AppScan Source, Codacy

Key Features of SAST Solutions:

Code Analysis: SAST solutions perform in-depth code analysis to identify security issues, such as SQL injection, cross-site scripting (XSS), and buffer overflows.

Early Detection: By integrating SAST into the development workflow, security issues can be identified and addressed during the coding phase, reducing the cost and effort required to fix vulnerabilities later in the development cycle.

Continuous Scanning: SAST solutions can be configured for continuous scanning, allowing developers to receive real-time feedback on security issues as code changes are made.

Integration with CI/CD Pipelines: SAST tools seamlessly integrate with Continuous Integration/Continuous Deployment (CI/CD) pipelines, ensuring security testing is an integral part of the software development process.

Compliance Checks: SAST solutions can help organizations adhere to industry-specific and regulatory security standards by identifying code that may violate compliance requirements.

False Positive Reduction: Modern SAST solutions employ advanced algorithms and heuristics to reduce false positives, providing developers with more accurate and actionable results.

Language Support: SAST solutions support a wide range of programming languages, enabling organizations to secure diverse application portfolios.

Remediation Guidance: SAST tools often provide guidance on how to remediate identified vulnerabilities, helping developers address security issues effectively.

Dynamic Application Security Testing (DAST) tools for web application scanning

DAST tools perform dynamic scans of web applications in real-time, simulating attacks to identify potential vulnerabilities. By testing applications from the outside, DAST tools offer a comprehensive view of security risks.

Here is a list of Dynamic Application Security Testing (DAST) tools: OWASP ZAP (Zed Attack Proxy), Burp Suite, Acunetix, Netsparker, WebInspect, Qualys Web Application Scanning (WAS), AppScan Standard, Trustwave App Scanner (formerly Cenzic Hailstorm), Rapid7 AppSpider, Tenable.io Web Application Scanning.

Key Features of DAST Solutions:

Web Application Scanning: DAST tools focus on web applications and assess their security from an external perspective by sending crafted requests and analyzing responses.

Real-World Simulation: DAST solutions mimic actual attack scenarios, including SQL injection, cross-site scripting (XSS), and other common security threats, to identify vulnerabilities.

Comprehensive Coverage: DAST solutions analyze the entire application, including dynamic content generated by the server, to detect security issues across different pages and functionalities.

Automation and Scalability: DAST tools can be automated to perform regular and extensive scans, making them suitable for large-scale web applications and continuous testing.

Out-of-Band Testing: DAST solutions can identify vulnerabilities not discoverable through traditional scanning, such as those found in non-standard HTTP methods or custom headers.

Reduced False Positives: Modern DAST tools employ advanced techniques to minimize false positives, providing developers with accurate and reliable security findings.

Integration with CI/CD Pipelines: DAST solutions can be seamlessly integrated into CI/CD pipelines, allowing security testing to be an integral part of the software development process.

Compliance Support: DAST tools help organizations meet industry standards and regulatory requirements by detecting security weaknesses that may lead to compliance violations.

Continuous Monitoring: Some DAST solutions offer continuous monitoring capabilities, enabling developers to receive real-time feedback on security issues as the application changes.

Interactive Application Security Testing (IAST) tools for real-time code analysis

IAST tools provide real-time security analysis by observing application execution. By combining dynamic and static analysis, IAST tools offer deeper insights into application security while minimizing false positives.

Here is a list of IAST (Interactive Application Security Testing) tools: Contrast Security, Hdiv Security, RIPS Technologies, Seeker by Synopsys, Waratek AppSecurity for Java, Quotium Seeker, ShiftLeft, WhiteHat Security Sentinel IAST, AppSecTest by Pradeo, IriusRisk IAST.

Key Features of IAST Solutions:

Real-Time Analysis: IAST tools continuously monitor applications in real-time as they execute, capturing data on code behavior and interactions with the system to detect security flaws.

Code-Level Visibility: IAST solutions provide detailed information about vulnerabilities, including the exact line of code responsible for the issue, helping developers pinpoint and fix problems with greater accuracy.

Low False Positives: IAST reduces false positives by directly observing application behavior, resulting in more precise identification of true security vulnerabilities.

Minimal Performance Impact: IAST operates with low overhead, ensuring that security testing does not significantly impact the application's performance during runtime.

Comprehensive Security Coverage: By analyzing code execution paths, IAST solutions identify a wide range of vulnerabilities, including those arising from data flow, configuration, and authentication.

Seamless Integration: IAST tools easily integrate into existing development and testing environments, supporting various programming languages and frameworks.

Continuous Security Monitoring: IAST solutions enable continuous monitoring of applications, offering ongoing security assessment throughout the development lifecycle.

DevSecOps Collaboration: IAST fosters collaboration between development, security, and operations teams by providing real-time insights accessible to all stakeholders.

Remediation Guidance: IAST solutions not only identify vulnerabilities but also offer actionable remediation guidance, streamlining the process of fixing security issues.

Compliance Support: IAST assists organizations in meeting regulatory requirements by providing accurate and detailed security assessments.

Runtime Application Self-Protection (RASP) tools for runtime security

RASP tools operate within the application runtime environment, actively monitoring for malicious behavior and automatically blocking potential threats. These tools provide an additional layer of protection to complement other security measures.

Here is a list of RASP (Runtime Application Self-Protection) tools: Sqreen, Contrast Security, Waratek AppSecurity for Java, StackRox, Veeam PN (Powered Network), Guardicore, Aqua Security, Datadog Security Monitoring, Wallarm, Arxan Application Protection.

Key Features of RASP Solutions:

Real-Time Protection: RASP tools actively monitor application behavior during runtime, detecting and blocking security threats as they occur in real-time.

Immediate Response: By residing within the application, RASP can take immediate action against threats without relying on external systems, ensuring faster response times.

Application-Centric Approach: RASP solutions focus on protecting the application itself, making them independent of external security configurations.

Automatic Policy Enforcement: RASP automatically enforces security policies based on the application's behavior, mitigating vulnerabilities and enforcing compliance.

Precise Attack Detection: RASP can identify and differentiate between legitimate application behavior and malicious activities, reducing false positives.

Low Performance Overhead: RASP operates with minimal impact on application performance, ensuring efficient security without compromising user experience.

Runtime Visibility: RASP solutions provide real-time insights into application security events, helping developers and security teams understand attack patterns and trends.

Adaptive Defense: RASP can dynamically adjust its protection strategies based on the evolving threat landscape, adapting to new attack vectors and tactics.

Application-Aware Security: RASP understands the unique context of the application, allowing it to tailor security responses to specific application vulnerabilities.

Continuous Protection: RASP continuously monitors the application, offering ongoing security coverage that extends throughout the application's lifecycle.

DevSecOps Integration: RASP seamlessly integrates into DevSecOps workflows, empowering developers with real-time security feedback and enabling collaboration between teams.

Compliance Support: RASP helps organizations meet regulatory requirements by providing active protection against security threats and potential data breaches.

DevSecOps Tools Table

CategoryDevSecOps ToolCI/CDJenkins, GitLab CI, Travis CI, CircleCISASTSonarQube, Veracode, Checkmarx, FortifyDASTOWASP Zap, Burp Suite, Acunetix, NetsparkerIASTContrast Security, RASPberryRASPSqreen, AppTrana, GuardicoreSIEMSplunk, IBM QRadar, ArcSight, LogRhythmContainer SecurityAqua Security, Sysdig, TwistlockSecurity OrchestrationDemisto, Phantom, ResilientAPI SecurityPostman, Swagger InspectorChaos EngineeringGremlin, Chaos Monkey, PumbaPolicy-as-a-CodeOpen Policy Agent (OPA), Rego, KyvernoThis table provides a selection of DevSecOps tools across different categories.

Empower your team with DevOps excellence! Streamline workflows, boost productivity, and fortify security. Let's shape the future of your software development together – inquire about our DevOps Consulting Services.

The bigger your product and the more people and companies it serves, the more it becomes a juicy target for hackers itching to mess with your infrastructure. They might mess up your system performance or even swipe users' personal data.

Within the framework of DevOps, there exists DevSecOps, a response to contemporary security challenges while also addressing the need for rapid software development.

[lwptoc]

In this content, I will elaborate on the importance of DevSecOps, how to assess its relevance for your organization, and the necessary actions to integrate this practice into your company.

What is DevSecOps?

DevSecOps is an approach to product development that integrates security from the very beginning. The main goal is to reduce the number of defects in the final product by addressing security concerns proactively throughout the software development process. Instead of dealing with the consequences of existing issues, the focus is on preventing their occurrence altogether.

It can be likened to planning for a warm house during construction rather than insulating it after realizing it's too cold. With DevSecOps, the emphasis is on avoiding vulnerabilities and weaknesses in the first place and taking necessary measures at every stage of development to ensure a secure and robust end product.

Pre-commit Checks. Code inspection to detect the presence of sensitive information (such as passwords, secrets, tokens, etc.) that should not be included in the Git history.

Commit-time Checks. Checks performed during the commit process to ensure the correctness and security of the code in the repository.

Post-build Checks. Checks carried out after the application has been built, including artifact testing (e.g., docker images).

Test-time Checks. Vulnerability testing of the deployed application (e.g., API scanning for common vulnerabilities).

Deploy-time Checks. Checks performed during the application deployment to assess the infrastructure for vulnerabilities.

A few years ago, DevSecOps was primarily relevant for large companies with numerous products and extensive development teams. However, today, its importance is gradually extending to smaller players in the industry.

Previously, development efforts prioritized swiftly creating a pilot version and dealing with security concerns later. Yet, investors now grasp the significance of airtight security and raise their inquiries. As a result, DevSecOps becomes increasingly relevant for a broader audience. However, for teams with fewer than 50 developers, security concerns may not be as pressing, and they are often handled through simpler, standard methods (in practice). Their main focus is on business functionality, with security addressed in fragments after product creation. Vulnerabilities are often identified in finished products using free scanners and penetration testing, and then remedied. As businesses grow and demand higher quality, security gains paramount importance and becomes deeply ingrained in the development process.

Consequently, companies reach a new level with their unique requirements. The market demands faster responses, driving the significance of the Time To Market metric. This urges the automation of every feasible aspect. Code is written, built, and deployed swiftly, showcasing DevOps in full effect - automating build, delivery, and deployment processes. As the transition to a pipeline-driven development occurs, security becomes a critical concern, leading us to the world of DevSecOps.

What are the Business Benefits?

The advantages for businesses are evident. As development speeds up with business growth, security should also keep pace, preferably taking a proactive approach. This is where DevSecOps becomes invaluable. When security practices are well-established and seamlessly integrated into the pipeline, automation becomes the norm. Detecting and rectifying bugs during the product's creation phase prevents a cascade of issues in subsequent products, saving significant resources.

Furthermore, meeting investor and client demands for prompt product delivery is crucial. Discovering errors at the final stage can lead to time-consuming fixes, causing delivery delays, contract breaches, and potential penalties. Hence, prioritizing security throughout ensures smoother product development without setbacks.

? Ready to Strengthen Your Application Security? Learn How to Implement DevSecOps Best Practices Today! Contact Us

Security in Software Development

The industry is well acquainted with various practices that aid in ensuring security at different development stages. But what exactly is security? Can there be a scenario where no vulnerabilities exist? Unfortunately, the notion of being entirely free of vulnerabilities is implausible, given the constant emergence of new ones and the vigilance of security researchers. Thus, the primary objective is to minimize the number of "holes," swiftly detecting and rectifying them before malicious actors exploit them.

To achieve this goal, the implementation of security practices becomes vital. Drawing an analogy with an automotive assembly line, we can better understand the importance of security throughout the development process.

During the blueprint phase, which is akin to the design stage in software development, we assess the software's architecture for correctness, authentication elements, database integration, and appropriate platform selection.

As we proceed to the parts stage, which corresponds to the integration of third-party libraries, we must ensure their functionality and check for any vulnerabilities or licensing issues.

The framework phase mirrors the creation of our own code, where we adhere to secure coding practices, prioritize data protection, and encryption.

The installation of parts phase relates to the assembly of the software, allowing us to conduct basic dynamic tests, verifying assembly correctness and library usage.

Subsequently, in the testing stage, we perform comprehensive tests to observe how the software functions in its infrastructure and interacts with users.

Finally, the production phase marks the product's release to the world, where constant monitoring ensures its performance under real-world conditions.

Throughout each development stage, security should be thoroughly evaluated, much like checking for installed airbags, a functioning steering wheel, a key for the door, working seatbelts, and appropriate brakes in a car.

Conducting timely and continuous checks at each stage is essential to ensure security is ingrained within the development process, avoiding last-minute fixes and mitigating potential risks.

The Path of Application Security Practices Transformation

Application Security has gained widespread acceptance as a mainstream concern in the cybersecurity landscape. The evolving market demands more innovative and efficient solutions, especially with the rise in API attacks and software supply chain vulnerabilities. As technology advances and market requirements change, new tools and modifications in the cybersecurity toolkit are emerging. To understand the current trends and the level of development in cybersecurity tools, we can refer to the Gartner Hype Cycle for Application Security, 2023 report.

The cycle comprises five distinct phases:

Innovation Trigger: This phase marks the introduction of technologies in the cybersecurity domain, just starting their journey.

Peak of Inflated Expectations: Technologies in this phase demonstrate some successful use cases but also experience setbacks. Companies strive to tailor these practices to their specific needs, but widespread adoption is yet to be achieved.

Trough of Disillusionment: Interest in technologies of this phase begins to decline as their implementation doesn't always yield desired results.

Slope of Enlightenment: At this stage, technologies have a solid track record of being beneficial to companies, leading to new generations of tools and an increase in demand.

Plateau of Productivity: In this final stage, technologies have well-defined tasks and applications, gaining momentum as mainstream cybersecurity solutions.

Now, let's explore DevSecOps and delve into the most impactful and compelling secure development practices, considering their implications on businesses, technological complexities, and geopolitical implications.

DevSecOps in the Current Landscape

As per Gartner's assessment, DevSecOps has reached the "Plateau of Productivity" phase. It has now become a mature mainstream approach, adopted by over 50% of the target audience. This methodology allows security teams to stay in sync with development and operations units during the creation of modern applications. The model ensures seamless integration of security tools into DevOps and automates all processes involved in developing secure software. Consequently, DevSecOps aids businesses in elevating product security, aligning applications and processes with industrial and regulatory standards, reducing vulnerability remediation costs, improving Time-to-Market metrics, and enhancing developers' expertise.

While striving to establish an effective secure development process, companies face several challenges:

Improper implementation of AppSec practices and poorly structured security processes can create a contradiction with DevOps, leading developers to perceive security tools as hindrances to their work.

The wide variety of tools used in modern CI/CD pipelines complicates the smooth integration of DevSecOps.

Many developers lack expertise in security, resulting in a lack of understanding of potential risks in their code. They may be hesitant to leave the CI/CD pipeline for security testing or scan results and may encounter difficulties with false positives from SAST and DAST tools.

Open-source security solutions may contain malicious code, and there is a risk that such tools may become unavailable for Russian users at any moment.

Despite these challenges, implementing DevSecOps can greatly benefit organizations by enhancing their security practices and ensuring the safety and compliance of their applications and processes.

Practices of DevSecOps in the Context of Modern Challenges

SCA (Software Composition Analysis): SCA involves analyzing the components and dependencies in software applications to identify and address vulnerabilities in third-party libraries or open-source code. With the increasing use of external libraries, SCA helps ensure that potential security risks from these components are mitigated.

MAST (Mobile Application Security Testing): MAST focuses on evaluating the security of mobile applications across various platforms. It involves conducting comprehensive security assessments to identify weaknesses and vulnerabilities specific to mobile app development.

Container Security: Containerization has become prevalent in modern application deployment. Container Security practices involve scanning container images for potential security flaws and continuously monitoring container runtime environments to prevent unauthorized access and data breaches.

ASOC (Application Security Orchestration & Correlation): ASOC is about streamlining and automating security practices throughout the software development lifecycle. It includes integrating various security tools, orchestrating their actions, and correlating their findings to improve the efficiency and effectiveness of security assessments.

API Security Testing: With the increasing use of APIs in modern applications, API security testing is crucial. It involves evaluating the security of APIs, ensuring they are protected against potential attacks, and safeguarding sensitive data exchanged through these interfaces.

Securing Development Environments: Securing development environments involves implementing robust security measures to protect the tools, platforms, and repositories used by developers during the software development process. This ensures that the codebase remains secure from the very beginning.

Chaos Engineering: Chaos Engineering is a proactive approach to testing system resilience. It involves simulating real-world scenarios and failures to identify potential weaknesses in applications and infrastructure and enhance their overall resilience.

SBOM (Software Bill of Materials): SBOM is a detailed inventory of all software components used in an application. It helps organizations track and manage their software supply chain, facilitating vulnerability management and risk assessment.

Policy-as-a-Code: Policy-as-a-Code involves codifying security policies and compliance requirements into the software development process. By integrating policy checks into the CI/CD pipeline, organizations can ensure that applications adhere to security standards and regulatory guidelines.

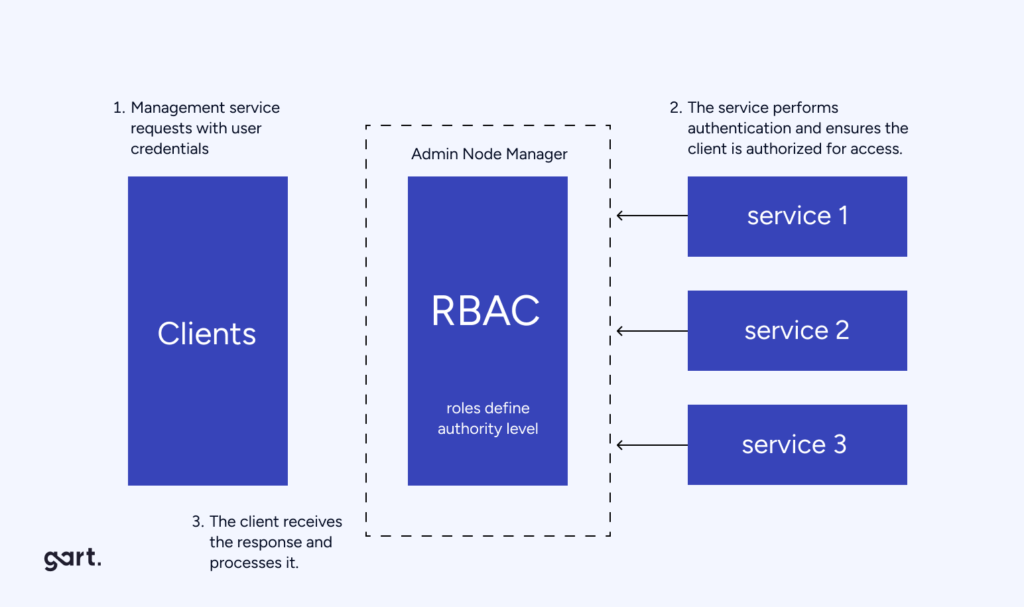

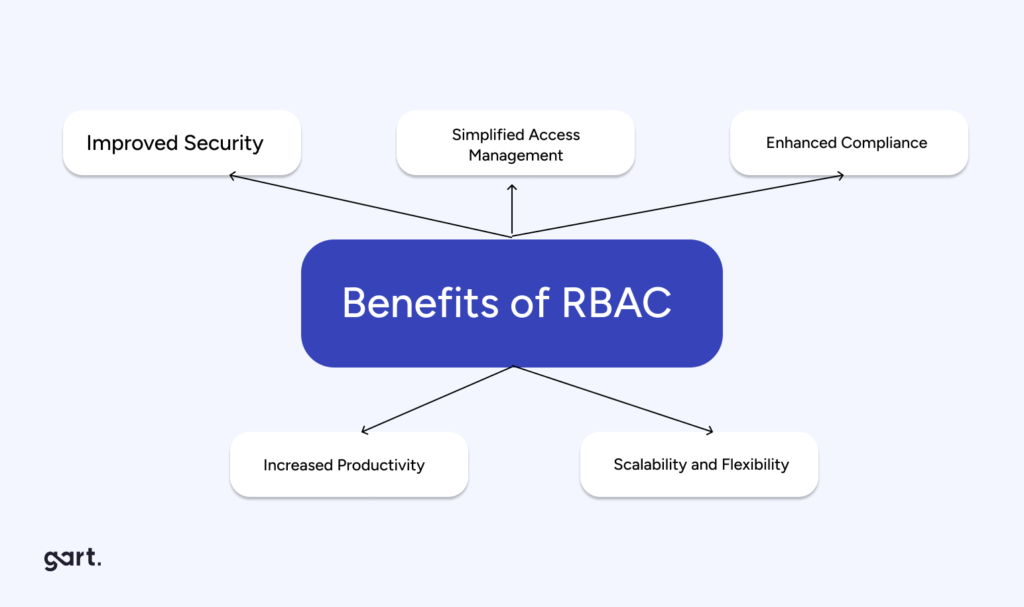

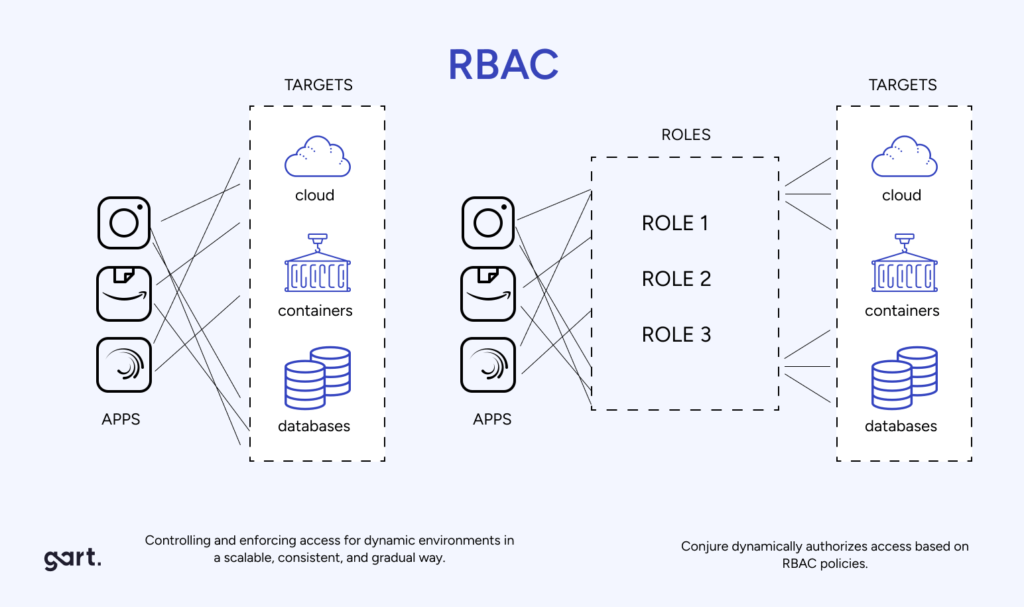

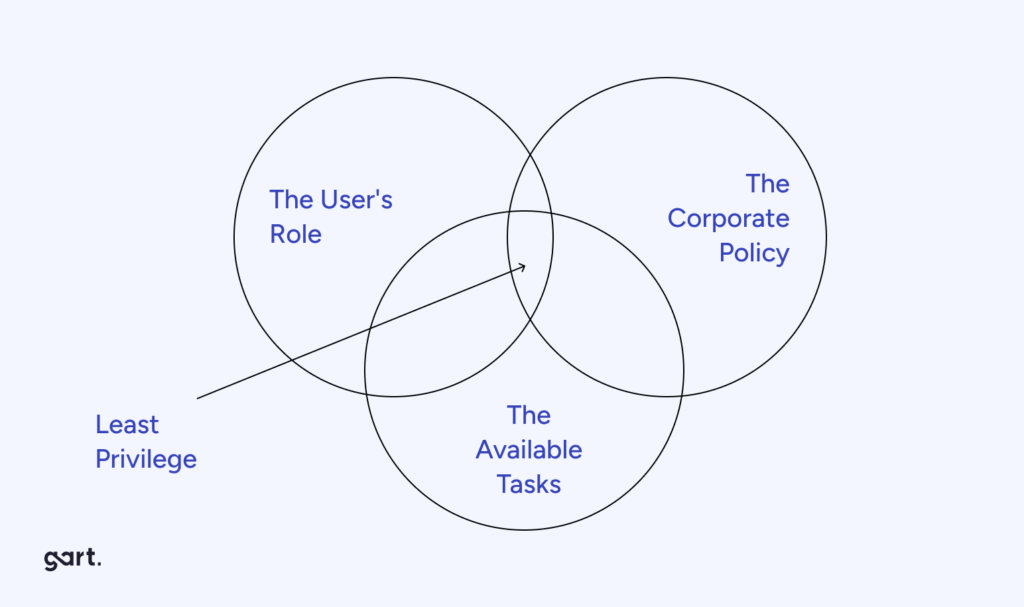

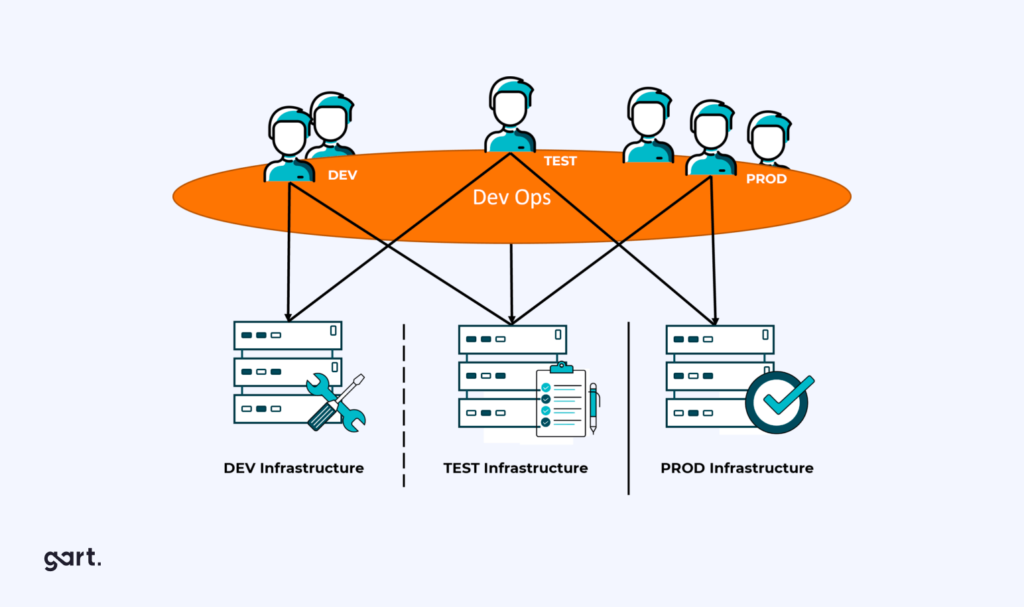

RBAC stands for Role-Based Access Control, a method of restricting system access based on user roles. In CI/CD pipelines, RBAC ensures that only authorized individuals have access to specific stages of the pipeline, enhancing security and control.

Implementing these DevSecOps practices can significantly enhance application security, address modern challenges, and foster a proactive approach to safeguarding software throughout its lifecycle.

Triggers for Implementation and Recommendations

Knowing when to prioritize the security of your products and embark on serious DevSecOps implementation can be a crucial decision. It depends on your industry, market position, and the demands of your audience. Compliance with regulators and the assessment of potential risks act as significant drivers for security. DevSecOps has become a mature mainstream technology embraced by over 50% of the target audience. It enables security teams to align with development and operations units, fostering the creation of modern applications. Deep integration of security tools into DevOps and automation of secure software development processes help businesses elevate product security levels, comply with industry standards, reduce vulnerability fixing costs, improve Time-to-Market metrics, and enhance developer expertise.

Several triggers can prompt the adoption of DevSecOps practices:

A development team comprising more than 50 members.

The implementation of process automation in development, such as CI/CD and DevOps.

An emphasis on microservices architecture.

The need for post-implementation improvements in application security practices.

For companies with large development teams and multiple products, introducing DevSecOps should be a gradual process, involving the team in decision-making. Though initial challenges may arise, once the process functions efficiently, developers, other team members, investors, and stakeholders will recognize the benefits of these changes.

Before proceeding, it's wise to seek guidance from successful implementations, consult with experts, and evaluate the advantages gained by companies that have already adopted DevSecOps, making informed decisions backed by data.

Empower your team with DevOps excellence! Streamline workflows, boost productivity, and fortify security. Let's shape the future of your software development together – inquire about our DevOps Consulting Services.