The cloud offers incredible scalability and agility, but managing costs can be a challenge. As businesses increasingly embrace the cloud, managing costs has become a critical concern. The flexibility and scalability of cloud services come with a price tag that can quickly spiral out of control without proper optimization strategies in place.

In this post, I'll share some practical tips to help you maximize the value of your cloud investments while minimizing unnecessary expenses.

[lwptoc]

Main Components of Cloud Costs

ComponentDescriptionCompute InstancesCost of virtual machines or compute instances used in the cloud.StorageCost of storing data in the cloud, including object storage, block storage, etc.Data TransferCost associated with transferring data within the cloud or to/from external networks.NetworkingCost of network resources like load balancers, VPNs, and other networking components.Database ServicesCost of utilizing managed database services, both relational and NoSQL databases.Content Delivery Network (CDN)Cost of using a CDN for content delivery to end users.Additional ServicesCost of using additional cloud services like machine learning, analytics, etc.Table Comparing Main Components of Cloud Costs

Are you looking for ways to reduce your cloud operating costs? Look no further! Contact Gart today for expert assistance in optimizing your cloud expenses.

10 Cloud Cost Optimization Strategies

Here are some key strategies to optimize your cloud spending:

Analyze Current Cloud Usage and Costs

Analyzing your current cloud usage and costs is an essential first step towards optimizing your cloud operating costs. Start by examining the cloud services and resources currently in use within your organization. This includes virtual machines, storage solutions, databases, networking components, and any other services utilized in the cloud. Take stock of the specific configurations, sizes, and usage patterns associated with each resource.

Once you have a comprehensive overview of your cloud infrastructure, identify any resources that are underutilized or no longer needed. These could be instances running at low utilization levels, storage volumes with little data, or services that have become obsolete or redundant. By identifying and addressing such resources, you can eliminate unnecessary costs.

Dig deeper into your cloud costs and identify the key drivers behind your expenditure. Look for patterns and trends in your usage data to understand which services or resources are consuming the majority of your cloud budget. It could be a particular type of instance, high data transfer volumes, or storage solutions with excessive replication. This analysis will help you prioritize cost optimization efforts.

During this analysis phase, leverage the cost management tools provided by your cloud service provider. These tools often offer detailed insights into resource usage, costs, and trends, allowing you to make data-driven decisions for cost optimization.

Optimize Resource Allocation

Optimizing resource allocation is crucial for reducing cloud operating costs while ensuring optimal performance.

Leverage Autoscaling

Adopt Reserved Instances

Utilize Spot Instances

Rightsize Resources

Optimize Storage

Assess the utilization of your cloud resources and identify instances or services that are over-provisioned or underutilized. Right-sizing involves matching the resource specifications (e.g., CPU, memory, storage) to the actual workload requirements. Downsize instances that are consistently running at low utilization, freeing up resources for other workloads. Similarly, upgrade underpowered instances experiencing performance bottlenecks to improve efficiency.

Take advantage of cloud scalability features to align resources with varying workload demands. Autoscaling allows resources to automatically adjust based on predefined thresholds or performance metrics. This ensures you have enough resources during peak periods while reducing costs during periods of low demand. Autoscaling can be applied to compute instances, databases, and other services, optimizing resource allocation in real-time.

Reserved instances (RIs) or savings plans offer significant cost savings for predictable or consistent workloads over an extended period. By committing to a fixed term (e.g., 1 or 3 years) and prepaying for the resource usage, you can achieve substantial discounts compared to on-demand pricing. Analyze your workload patterns and identify instances that have steady usage to maximize savings with RIs or savings plans.

For workloads that are flexible and can tolerate interruptions, spot instances can be a cost-effective option. Spot instances are spare computing capacity offered at steep discounts (up to 90% off on AWS) compared to on-demand prices. However, these instances can be reclaimed by the cloud provider with little notice, making them suitable for fault-tolerant, interruptible tasks.

When optimizing resource allocation, it's crucial to continuously monitor and adjust your resource configurations based on changing workload patterns. Leverage cloud provider tools and services that provide insights into resource utilization and performance metrics, enabling you to make data-driven decisions for efficient resource allocation.

Implement Cost Monitoring and Budgeting

Implementing effective cost monitoring and budgeting practices is crucial for maintaining control over cloud operating costs.

Take advantage of the cost management tools and features offered by your cloud provider. These tools provide detailed insights into your cloud spending, resource utilization, and cost allocation. They often include dashboards, reports, and visualizations that help you understand the cost breakdown and identify areas for optimization. Familiarize yourself with these tools and leverage their capabilities to gain better visibility into your cloud costs.

Configure cost alerts and notifications to receive real-time updates on your cloud spending. Define spending thresholds that align with your budget and receive alerts when costs approach or exceed those thresholds. This allows you to proactively monitor and control your expenses, ensuring you stay within your allocated budget. Timely alerts enable you to identify any unexpected cost spikes or unusual patterns and take appropriate actions.

Set a budget for your cloud operations, allocating specific spending limits for different services or departments. This budget should align with your business objectives and financial capabilities. Regularly review and analyze your cost performance against the budget to identify any discrepancies or areas for improvement. Adjust the budget as needed to optimize your cloud spending and align it with your organizational goals.

By implementing cost monitoring and budgeting practices, you gain better visibility into your cloud spending and can take proactive steps to optimize costs. Regularly reviewing cost performance allows you to identify potential cost-saving opportunities, make informed decisions, and ensure that your cloud usage remains within the defined budget.

Remember to involve relevant stakeholders, such as finance and IT teams, to collaborate on budgeting and align cost optimization efforts with your organization's overall financial strategy.

Use Cost-effective Storage Solutions

To optimize cloud operating costs, it is important to use cost-effective storage solutions.

Begin by assessing your storage requirements and understanding the characteristics of your data. Evaluate the available storage options, such as object storage and block storage, and choose the most suitable option for each use case. Object storage is ideal for storing large amounts of unstructured data, while block storage is better suited for applications that require high performance and low latency. By aligning your storage needs with the appropriate options, you can avoid overprovisioning and optimize costs.

Implement data lifecycle management techniques to efficiently manage your data throughout its lifecycle. This involves practices like data tiering, where you classify data based on its frequency of access or importance and store it in the appropriate storage tiers. Frequently accessed or critical data can be stored in high-performance storage, while less frequently accessed or archival data can be moved to lower-cost storage options. Archiving infrequently accessed data to cost-effective storage tiers can significantly reduce costs while maintaining data accessibility.

Cloud providers often provide features such as data compression, deduplication, and automated storage tiering. These features help optimize storage utilization, reduce redundancy, and improve overall efficiency. By leveraging these built-in optimization features, you can lower your storage costs without compromising data availability or performance.

Regularly review your storage usage and make adjustments based on changing needs and data access patterns. Remove any unnecessary or outdated data to avoid incurring unnecessary costs. Periodically evaluate storage options and pricing plans to ensure they align with your budget and business requirements.

Employ Serverless Architecture

Employing a serverless architecture can significantly contribute to reducing cloud operating costs.

Embrace serverless computing platforms provided by cloud service providers, such as AWS Lambda or Azure Functions. These platforms allow you to run code without managing the underlying infrastructure. With serverless, you can focus on writing and deploying functions or event-driven code, while the cloud provider takes care of resource provisioning, maintenance, and scalability.

One of the key benefits of serverless architecture is its cost model, where you only pay for the actual execution of functions or event triggers. Traditional computing models require provisioning resources for peak loads, resulting in underutilization during periods of low activity. With serverless, you are charged based on the precise usage, which can lead to significant cost savings as you eliminate idle resource costs.

Serverless platforms automatically scale your functions based on incoming requests or events. This means that resources are allocated dynamically, scaling up or down based on workload demands. This automatic scaling eliminates the need for manual resource provisioning, reducing the risk of overprovisioning and ensuring optimal resource utilization. With automatic scaling, you can handle spikes in traffic or workload without incurring additional costs for idle resources.

When adopting serverless architecture, it's important to design your applications or functions to take full advantage of its benefits. Decompose your applications into smaller, independent functions that can be executed individually, ensuring granular scalability and cloud cost optimization.

Consider Multi-Cloud and Hybrid Cloud Strategies

Considering multi-cloud and hybrid cloud strategies can help optimize cloud operating costs while maximizing flexibility and performance.

Evaluate the pricing models, service offerings, and discounts provided by different cloud providers. Compare the costs of comparable services, such as compute instances, storage, and networking, to identify the most cost-effective options. Take into account the specific needs of your workloads and consider factors like data transfer costs, regional pricing variations, and pricing commitments. By leveraging competition among cloud providers, you can negotiate better pricing and optimize your cloud costs.

Analyze your workloads and determine the most suitable cloud environment for each workload. Some workloads may perform better or have lower costs in specific cloud providers due to their specialized services or infrastructure. Consider factors like latency, data sovereignty, compliance requirements, and service-level agreements (SLAs) when deciding where to deploy your workloads. By strategically placing workloads, you can optimize costs while meeting performance and compliance needs.

Adopt a hybrid cloud strategy that combines on-premises infrastructure with public cloud services. Utilize on-premises resources for workloads with stable demand or data that requires local processing, while leveraging the scalability and cost-efficiency of the public cloud for variable or bursty workloads. This hybrid approach allows you to optimize costs by using the most cost-effective infrastructure for different aspects of your data processing pipeline.

Automate Resource Management and Provisioning

Automating resource management and provisioning is key to optimizing cloud operating costs and improving operational efficiency.

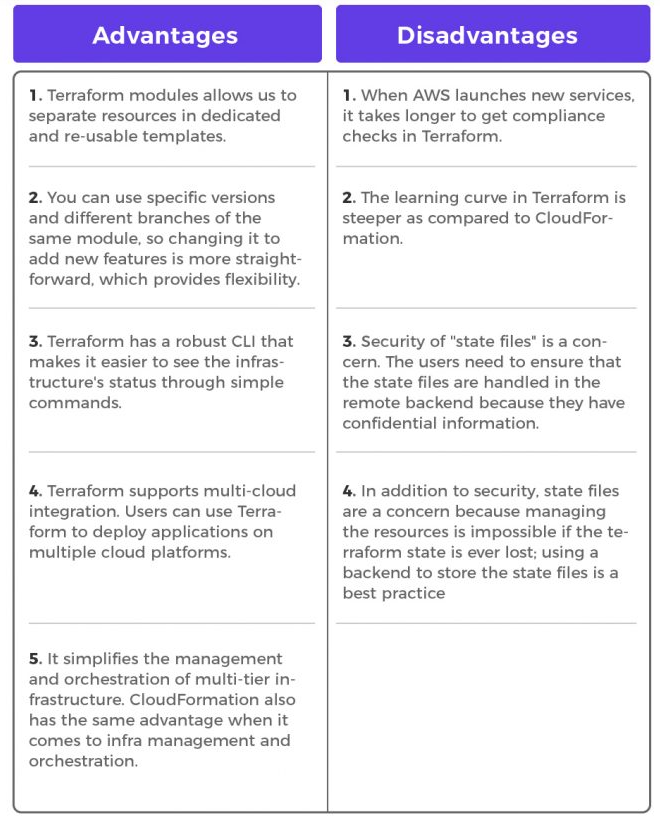

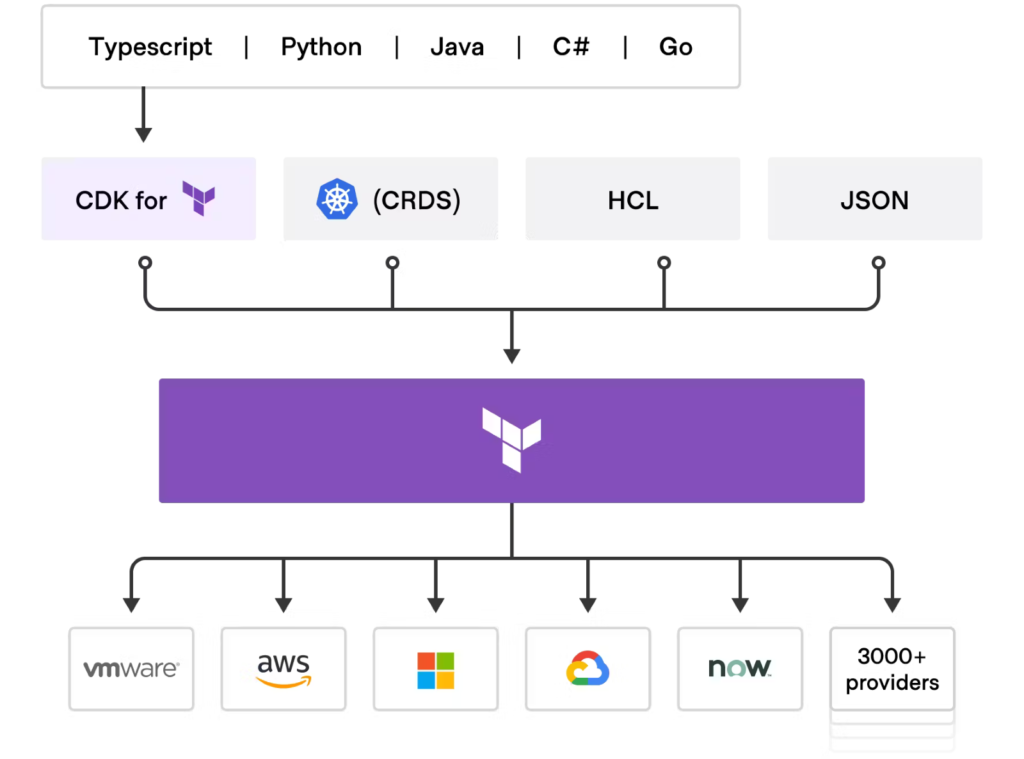

Infrastructure-as-code (IaC) tools such as Terraform or CloudFormation allow you to define and manage your cloud infrastructure as code. With IaC, you can express your infrastructure requirements in a declarative format, enabling automated provisioning, configuration, and management of resources. This approach ensures consistency, repeatability, and scalability while reducing manual efforts and potential configuration errors.

Automate the process of provisioning and deprovisioning cloud resources based on workload requirements. By using scripting or orchestration tools, you can create workflows or scripts that automatically provision resources when needed and release them when they are no longer required. This automation eliminates the need for manual intervention, reduces resource wastage, and optimizes costs by ensuring resources are only provisioned when necessary.

Auto-scaling enables your infrastructure to dynamically adjust its capacity based on workload demands. By setting up auto-scaling rules and policies, you can automatically add or remove resources in response to changes in traffic or workload patterns. This ensures that you have the right amount of resources available to handle workload spikes without overprovisioning during periods of low demand. Auto-scaling optimizes resource allocation, improves performance, and helps control costs by scaling resources efficiently.

It's important to regularly review and optimize your automation scripts, policies, and configurations to align them with changing business needs and evolving workload patterns. Monitor resource utilization and performance metrics to fine-tune auto-scaling rules and ensure optimal resource allocation.

Optimize Data Transfer and Bandwidth Usage

Optimizing data transfer and bandwidth usage is crucial for reducing cloud operating costs.

Analyze your data flows and minimize unnecessary data transfer between cloud services and different regions. When designing your architecture, consider the proximity of services and data to minimize cross-region data transfer. Opt for services and resources located in the same region whenever possible to reduce latency and data transfer costs. Additionally, use efficient data transfer protocols and optimize data payloads to minimize bandwidth usage.

Employ content delivery networks (CDNs) to cache and distribute content closer to your end users. CDNs have a network of edge servers distributed across various locations, enabling faster content delivery by reducing the distance data needs to travel. By caching content at edge locations, you can minimize data transfer from your origin servers to end users, reducing bandwidth costs and improving user experience.

Implement data compression and caching techniques to optimize bandwidth usage. Compressing data before transferring it between services or to end users reduces the amount of data transmitted, resulting in lower bandwidth costs. Additionally, leverage caching mechanisms to store frequently accessed data closer to users or within your infrastructure, reducing the need for repeated data transfers. Caching helps improve performance and reduces bandwidth usage, particularly for static or semi-static content.

Evaluate Reserved Instances and Savings Plans

It is important to evaluate and leverage Reserved Instances (RIs) and Savings Plans provided by cloud service providers.

Analyze your historical usage patterns and identify workloads or services with consistent, predictable usage over an extended period. These workloads are ideal candidates for long-term commitments. By understanding your long-term usage requirements, you can determine the appropriate level of reservation coverage needed to optimize costs.

Reserved Instances (RIs) and Savings Plans are cost-saving options offered by cloud providers. RIs allow you to reserve instances for a specified term, typically one to three years, at a significantly discounted rate compared to on-demand pricing. Savings Plans provide flexible coverage for a specific dollar amount per hour, allowing you to apply the savings across different instance types within the same family. Evaluate your usage patterns and purchase RIs or Savings Plans accordingly to benefit from the cost savings they offer.

Cloud usage and requirements may change over time, so it is crucial to regularly review your reserved instances and savings plans. Assess if the existing reservations still align with your workload demands and make adjustments as needed. This may involve modifying the reservation terms, resizing or exchanging instances, or reallocating savings plans to different services or instance families. By optimizing your reservations based on evolving needs, you can ensure that you maximize cost savings and minimize unused or underutilized resources.

Continuously Monitor and Optimize

Monitor your cloud usage and costs regularly to identify opportunities for cloud cost optimization. Analyze resource utilization, identify underutilized or idle resources, and make necessary adjustments such as rightsizing instances, eliminating unused services, or reconfiguring storage allocations. Continuously assess your workload demands and adjust resource allocation accordingly to ensure optimal usage and cost efficiency.

Cloud service providers frequently introduce new cost optimization features, tools, and best practices. Stay informed about these updates and enhancements to leverage them effectively. Subscribe to newsletters, participate in webinars, or engage with cloud provider communities to stay up to date with the latest cost optimization strategies. By taking advantage of new features, you can further optimize your cloud costs and take advantage of emerging cost-saving opportunities.

Create awareness and promote a culture of cost consciousness and cloud cost Optimization across your organization. Educate and train your teams on cost optimization strategies, best practices, and tools. Encourage employees to be mindful of resource usage, waste reduction, and cost-saving measures. Establish clear cost management policies and guidelines, and regularly communicate cost-saving success stories to encourage and motivate cost optimization efforts.

Real-world Examples of Cloud Operating Costs Reduction Strategies

AWS Cost Optimization and CI/CD Automation for Entertainment Software Platform

This case study showcases how Gart helped an entertainment software platform optimize their cloud operating costs on AWS while enhancing their Continuous Integration/Continuous Deployment (CI/CD) processes.

The entertainment software platform was facing challenges with escalating cloud costs due to inefficient resource allocation and manual deployment processes. Gart stepped in to identify cost optimization opportunities and implement effective strategies.

Through their expertise in AWS cost optimization and CI/CD automation, Gart successfully helped the entertainment software platform optimize their cloud operating costs, reduce manual efforts, and improve deployment efficiency.

Optimizing Costs and Operations for Cloud-Based SaaS E-Commerce Platform

This Gart case study showcases how Gart helped a cloud-based SaaS e-commerce platform optimize their cloud operating costs and streamline their operations.

The e-commerce platform was facing challenges with rising cloud costs and operational inefficiencies. Gart began by conducting a comprehensive assessment of the platform's cloud environment, including resource utilization, workload patterns, and cost drivers. Based on this analysis, we devised a cost optimization strategy that focused on rightsizing resources, leveraging reserved instances, and implementing resource scheduling based on demand.

By rightsizing instances to match the actual workload requirements and utilizing reserved instances to take advantage of cost savings, Gart helped the e-commerce platform significantly reduce their cloud operating costs.

Furthermore, we implemented resource scheduling based on demand, ensuring that resources were only active when needed, leading to further cost savings. We also optimized storage costs by implementing data lifecycle management techniques and leveraging cost-effective storage options.

In addition to cost optimization, Gart worked on streamlining the platform's operations. We automated infrastructure provisioning and deployment processes using infrastructure-as-code (IaC) tools like Terraform, improving efficiency and reducing manual efforts.

Azure Cost Optimization for a Software Development Company

This case study highlights how Gart helped a software development company optimize their cloud operating costs on the Azure platform.

The software development company was experiencing challenges with high cloud costs and a lack of visibility into cost drivers. Gart intervened to analyze their Azure infrastructure and identify opportunities for cost optimization.

We began by conducting a thorough assessment of the company's Azure environment, examining resource utilization, workload patterns, and cost allocation. Based on this analysis, they developed a cost optimization strategy tailored to the company's specific needs.

The strategy involved rightsizing Azure resources to match the actual workload requirements, identifying and eliminating underutilized resources, and implementing reserved instances for long-term cost savings. Gart also recommended and implemented Azure cost management tools and features to provide better cost visibility and tracking.

Additionally, we worked with the software development company to implement infrastructure-as-code (IaC) practices using tools like Azure DevOps and Azure Resource Manager templates. This allowed for streamlined resource provisioning and reduced manual efforts, further optimizing costs.

Conclusion: Cloud Cost Optimization

By taking a proactive approach to cloud cost optimization, businesses can not only reduce their expenses but also enhance their overall cloud operations, improve scalability, and drive innovation. With careful planning, monitoring, and optimization, businesses can achieve a cost-effective and efficient cloud infrastructure that aligns with their specific needs and budgetary goals.

Elevate your business with our Cloud Consulting Services! From migration strategies to scalable infrastructure, we deliver cost-efficient, secure, and innovative cloud solutions. Ready to transform? Contact us today.

Cloud adoption is a crucial consideration for many enterprises. With the need to migrate from on-premises infrastructure to the cloud, businesses seek effective frameworks to streamline this transition. One such framework gaining traction is the Terraform Framework.

[lwptoc]

This article delves into the details of the Terraform Framework and its significance, particularly for enterprise-level cloud adoption projects. We will explore the background behind its adoption, the Cloud Adoption Framework for Microsoft, the concept of landing zones, and the four levels of the Terraform Framework.

https://youtu.be/vzCO-h4a9h4

Background and Adoption Strategy

Many large enterprises face the challenge of migrating their infrastructure from on-premises environments to the cloud. In response to this, Microsoft developed the Cloud Adoption Framework (CAF) as a strategic guide for customers to plan, adopt, and implement cloud services effectively.

Let's dive deeper into the components and benefits of the Terraform Framework within the Cloud Adoption Framework.

Understanding the Azure Cloud Adoption Framework (CAF)

The Cloud Adoption Framework for Microsoft (CAF) is a comprehensive framework that assists customers in defining their cloud strategy, planning the adoption process, and continuously implementing and managing cloud services. It covers various aspects of cloud adoption, from migration strategies to application and service management in the cloud. To gain a better understanding of this framework, it is essential to explore its core components.

Landing Zones

A fundamental component of the CAF is the concept of landing zones. A landing zone represents a scaled and secure Azure environment, typically designed for multiple subscriptions. It acts as the building block for the overall infrastructure landscape, ensuring proper connectivity and security between different application components and even on-premises systems. Landing zones consist of several elements, including security measures, governance policies, management and monitoring services, and application-specific services within a subscription.

CAF and Infrastructure Organization

The Microsoft documentation on CAF outlines different approaches to cloud adoption based on the size and complexity of an organization. Small organizations utilizing a single subscription in Azure will have a different adoption approach compared to large enterprises with numerous services and subscriptions. For enterprise-level deployments, an organized infrastructure landscape is crucial. This includes creating management groups and subscription organization, each serving specific governance and security requirements. Additionally, specialized subscriptions, such as identity subscriptions, management subscriptions, and connectivity subscriptions, are part of the overall landing zone architecture.

? Discover the power of Caf-Terraform, a revolutionary framework that takes your infrastructure management to the next level. Let's dive in!

The Four Levels of the Terraform Framework

The Terraform Framework, an open-source project developed by Microsoft architects and engineers, simplifies the deployment of landing zones within Azure. It consists of four main components: rover, models, landing zones, and launchpad.

a. Rover:

The rover is a Docker container that encapsulates all the necessary tools for infrastructure deployment. It includes Terraform itself and additional scripts, facilitating a seamless transition to CI/CD pipelines across different platforms. By utilizing the rover, teams can standardize deployments and avoid compatibility issues caused by different Terraform versions on individual machines.

b. Models:

The models represent cloud adoption framework templates, hosted within the Terraform registry or GitHub repositories. These templates cover a wide range of Azure resources, providing a standardized approach for deploying infrastructure components. Although they may not cover every single resource available in Azure, they offer a strong foundation for most common resources and are continuously updated and supported by the community.

c. Landing Zones:

Landing zones represent compositions of multiple resources, services, or blueprints within the context of the Terraform Framework. They enable the creation of complex environments by dividing them into manageable subparts or services. By modularizing landing zones, organizations can efficiently deploy and manage infrastructure based on their specific requirements. The Terraform state file generated from the landing zone provides valuable information for subsequent deployments and configurations.

d. Launchpad:

The launchpad serves as the starting point for the Terraform Framework. It comprises scripts and Terraform configurations responsible for creating the foundational components required for all other levels. By deploying the launchpad, organizations establish storage accounts, keywords, and permissions necessary for storing and managing Terraform state files for higher-level deployments.

Understanding the Communication between Levels

To ensure efficient management and organization, the Terraform Framework promotes a layered approach, divided into four levels:

Level Zero: This level represents the launchpad and focuses on establishing the foundational infrastructure required for subsequent levels. It involves creating storage accounts, setting up subscriptions, and permissions for managing state files.

Level One: Level one primarily deals with security and compliance aspects. It encompasses policies, access control, and governance implementation across subscriptions. The level one pipeline reads outputs from level zero but has read-only access to the state files.

Level Two: Level two revolves around network infrastructure and shared services. It includes creating hub networks, configuring DNS, implementing firewalls, and enabling shared services such as monitoring and backup solutions. Level two interacts with level one and level zero, retrieving information from their state files.

Level Three and Beyond: From level three onwards, the focus shifts to application-specific deployments. Development teams responsible for application infrastructure, such as Kubernetes clusters, virtual machines, or databases, engage with levels three and beyond. These levels have access to state files from the previous levels, enabling seamless integration and deployment of application-specific resources.

Simplifying Infrastructure Deployments

In order to create new virtual machines for specific applications, we can leverage the power of Terraform and modify the configuration inside the Terraform code. By doing so, we can trigger a pipeline that resembles regular Terraform work. This approach allows us to have more control over the deployment and configuration of virtual machines.

Streamlining Service Composition and Environment Delivery

When discussing service composition and delivering a complete environment, this layered approach in Terraform can be quite beneficial. We can utilize landing zones or blueprint models at different levels. These models have input variables and produce output variables that are saved into the Terraform state file. Another landing zone or level can access these output variables, use them within its own logic, compose them with input variables, and produce its own output variables.

Organizing Teams and Repositories

This layered approach, facilitated by Terraform, helps to organize the relationship between different repositories or teams within an organization. Developers or DevOps professionals responsible for creating landing zones or cleaning zones can work locally with the Rover container in VS Code. They write Terraform code, compose and utilize modules, and create landing zone logic.

Separation of Logic and Configuration

The logic and configuration in the Terraform code are split into separate files, similar to regular Terraform practices. The logic is stored in .tf and .tfvars files, while the configuration is stored in .tfvars files, which can be organized into different environments. This separation allows for better management and maintainability.

Empowering Application Teams

Within an organization, different teams can be responsible for different aspects of the infrastructure. An experienced Azure team can define the organization's standards and write the landing zone logic using Terraform. They can provide examples of configuration files that application teams can use. By offloading the configuration files to the application teams, they can easily create infrastructure for their applications without directly involving the operations team.

Standardization and Unification

This approach allows for the standardization and unification of infrastructure within the organization. With the use of modules in Terraform, teams don't have to start from scratch but can reuse existing code and configurations, creating a consistent and streamlined infrastructure landscape.

Challenges and Considerations

Working with Terraform and the Caf-terraform framework may have some complexities. For example, the Rover tool is not able to work with managed identities, requiring the management of service principals in addition to containers and managed identities. Additionally, there may be some bugs in the modules that need to be addressed, but the open-source nature of the framework allows for contributions and improvements. Understanding the framework and its intricacies may take some time due to the documentation being spread across multiple reports and components.

Key components and features of CAF Terraform:

ComponentDescriptionCloud Adoption Framework (CAF)Microsoft's framework that provides guidance and best practices for organizations adopting Azure cloud services.TerraformOpen-source infrastructure-as-code tool used for provisioning and managing cloud resources.Azure Landing ZonesPre-configured environments in Azure that provide a foundation for deploying workloads securely and consistently.Infrastructure as Code (IaC)Approach to defining and managing infrastructure resources using declarative code.Standardized DeploymentsEnsures consistent configurations and deployments across environments, reducing inconsistencies and human errors.ModularityOffers a modular architecture allowing customization and extension of the framework based on organizational requirements.CustomizabilityEnables organizations to adapt and tailor CAF Terraform to their specific needs, incorporating existing processes, policies, and compliance standards.Security and GovernanceEmbeds security controls, network configurations, identity management, and compliance requirements into infrastructure code to enforce best practices and ensure secure deployments.Ongoing ManagementSimplifies ongoing management, updates, and scaling of Azure landing zones, enabling organizations to easily make changes to configurations and manage the lifecycle of resources.Collaboration and AgilityFacilitates collaboration among teams through infrastructure-as-code practices, promoting agility, version control, and rapid deployments.Documentation and CommunityComprehensive documentation and resources provided by Microsoft Azure, along with a vibrant community offering tutorials, examples, and support for leveraging CAF Terraform effectively.This table provides an overview of the key components and features of CAF Terraform

Conclusion: Azure Cloud Adoption Framework

The Terraform Framework within the Cloud Adoption Framework (CAF) offers enterprises a powerful toolset for cloud adoption and migration projects. By leveraging the modular structure of landing zones and adhering to the layered approach, organizations can effectively manage infrastructure deployments in Azure. The Terraform Framework's components, including rover, models, landing zones, and launchpad, contribute to standardization, automation, and collaboration, leading to successful cloud adoption and improved operational efficiency.

As organizations embrace the cloud, the Caf-terraform framework provides a layered approach to managing infrastructure and deployments. By separating logic and configuration and leveraging modules, it allows for standardized and unified infrastructure across teams and repositories. This framework simplifies and optimizes the transition from on-premises to the cloud, enabling enterprises to harness the full potential of Azure's capabilities.

Empower your team with DevOps excellence! Streamline workflows, boost productivity, and fortify security. Let's shape the future of your software development together – inquire about our DevOps Consulting Services.

By treating infrastructure as software code, IaC empowers teams to leverage the benefits of version control, automation, and repeatability in their cloud deployments.

This article explores the key concepts and benefits of IaC, shedding light on popular tools such as Terraform, Ansible, SaltStack, and Google Cloud Deployment Manager. We'll delve into their features, strengths, and use cases, providing insights into how they enable developers and operations teams to streamline their infrastructure management processes.

IaC Tools Comparison Table

IaC ToolDescriptionSupported Cloud ProvidersTerraformOpen-source tool for infrastructure provisioningAWS, Azure, GCP, and moreAnsibleConfiguration management and automation platformAWS, Azure, GCP, and moreSaltStackHigh-speed automation and orchestration frameworkAWS, Azure, GCP, and morePuppetDeclarative language-based configuration managementAWS, Azure, GCP, and moreChefInfrastructure automation frameworkAWS, Azure, GCP, and moreCloudFormationAWS-specific IaC tool for provisioning AWS resourcesAmazon Web Services (AWS)Google Cloud Deployment ManagerInfrastructure management tool for Google Cloud PlatformGoogle Cloud Platform (GCP)Azure Resource ManagerAzure-native tool for deploying and managing resourcesMicrosoft AzureOpenStack HeatOrchestration engine for managing resources in OpenStackOpenStackInfrastructure as a Code Tools Table

Exploring the Landscape of IaC Tools

The IaC paradigm is widely embraced in modern software development, offering a range of tools for deployment, configuration management, virtualization, and orchestration. Prominent containerization and orchestration tools like Docker and Kubernetes employ YAML to express the desired end state. HashiCorp Packer is another tool that leverages JSON templates and variables for creating system snapshots.

The most popular configuration management tools, namely Ansible, Chef, and Puppet, adopt the IaC approach to define the desired state of the servers under their management.

Ansible functions by bootstrapping servers and orchestrating them based on predefined playbooks. These playbooks, written in YAML, outline the operations Ansible will execute and the targeted resources it will operate on. These operations can include starting services, installing packages via the system's package manager, or executing custom bash commands.

Both Chef and Puppet operate through central servers that issue instructions for orchestrating managed servers. Agent software needs to be installed on the managed servers. While Chef employs Ruby to describe resources, Puppet has its own declarative language.

Terraform seamlessly integrates with other IaC tools and DevOps systems, excelling in provisioning infrastructure resources rather than software installation and initial server configuration.

Unlike configuration management tools like Ansible and Chef, Terraform is not designed for installing software on target resources or scheduling tasks. Instead, Terraform utilizes providers to interact with supported resources.

Terraform can operate on a single machine without the need for a master or managed servers, unlike some other tools. It does not actively monitor the actual state of resources and automatically reapply configurations. Its primary focus is on orchestration. Typically, the workflow involves provisioning resources with Terraform and using a configuration management tool for further customization if necessary.

For Chef, Terraform provides a built-in provider that configures the client on the orchestrated remote resources. This allows for automatic addition of all orchestrated servers to the master server and further customization using Chef cookbooks (Chef's infrastructure declarations).

Optimize your infrastructure management with our DevOps expertise. Harness the power of IaC tools for streamlined provisioning, configuration, and orchestration. Scale efficiently and achieve seamless deployments. Contact us now.

Popular Infrastructure as Code Tools

Terraform

Terraform, introduced by HashiCorp in 2014, is an open-source Infrastructure as Code (IaC) solution. It operates based on a declarative approach to managing infrastructure, allowing you to define the desired end state of your infrastructure in a configuration file. Terraform then works to bring the infrastructure to that desired state. This configuration is applied using the PUSH method. Written in the Go programming language, Terraform incorporates its own language known as HashiCorp Configuration Language (HCL), which is used for writing configuration files that automate infrastructure management tasks.

Download: https://github.com/hashicorp/terraform

Terraform operates by analyzing the infrastructure code provided and constructing a graph that represents the resources and their relationships. This graph is then compared with the cached state of resources in the cloud. Based on this comparison, Terraform generates an execution plan that outlines the necessary changes to be applied to the cloud in order to achieve the desired state, including the order in which these changes should be made.

Within Terraform, there are two primary components: providers and provisioners. Providers are responsible for interacting with cloud service providers, handling the creation, management, and deletion of resources. On the other hand, provisioners are used to execute specific actions on the remote resources created or on the local machine where the code is being processed.

Terraform offers support for managing fundamental components of various cloud providers, such as compute instances, load balancers, storage, and DNS records. Additionally, Terraform's extensibility allows for the incorporation of new providers and provisioners.

In the realm of Infrastructure as Code (IaC), Terraform's primary role is to ensure that the state of resources in the cloud aligns with the state expressed in the provided code. However, it's important to note that Terraform does not actively track deployed resources or monitor the ongoing bootstrapping of prepared compute instances. The subsequent section will delve into the distinctions between Terraform and other tools, as well as how they complement each other within the workflow.

Real-World Examples of Terraform Usage

Terraform has gained immense popularity across various industries due to its versatility and user-friendly nature. Here are a few real-world examples showcasing how Terraform is being utilized:

CI/CD Pipelines and Infrastructure for E-Health Platform

For our client, a development company specializing in Electronic Medical Records Software (EMRS) for government-based E-Health platforms and CRM systems in medical facilities, we leveraged Terraform to create the infrastructure using VMWare ESXi. This allowed us to harness the full capabilities of the local cloud provider, ensuring efficient and scalable deployments.

Implementation of Nomad Cluster for Massively Parallel Computing

Our client, S-Cube, is a software development company specializing in creating a product based on a waveform inversion algorithm for building Earth models. They sought to enhance their infrastructure by separating the software from the underlying infrastructure, allowing them to focus solely on application development without the burden of infrastructure management.

To assist S-Cube in achieving their goals, Gart Solutions stepped in and leveraged the latest cloud development techniques and technologies, including Terraform. By utilizing Terraform, Gart Solutions helped restructure the architecture of S-Cube's SaaS platform, making it more economically efficient and scalable.

The Gart Solutions team worked closely with S-Cube to develop a new approach that takes infrastructure management to the next level. By adopting Terraform, they were able to define their infrastructure as code, enabling easy provisioning and management of resources across cloud and on-premises environments. This approach offered S-Cube the flexibility to run their workloads in both containerized and non-containerized environments, adapting to their specific requirements.

Streamlining Presale Processes with ChatOps Automation

Our client, Beyond Risk, is a dynamic technology company specializing in enterprise risk management solutions. They faced several challenges related to environmental management, particularly in managing the existing environment architecture and infrastructure code conditions, which required significant effort.

To address these challenges, Gart implemented ChatOps Automation to streamline the presale processes. The implementation involved utilizing the Slack API to create an interactive flow, AWS Lambda for implementing the business logic, and GitHub Action + Terraform Cloud for infrastructure automation.

One significant improvement was the addition of a Notification step, which helped us track the success or failure of Terraform operations. This allowed us to stay informed about the status of infrastructure changes and take appropriate actions accordingly.

Unlock the full potential of your infrastructure with our DevOps expertise. Maximize scalability and achieve flawless deployments. Drop us a line right now!

AWS CloudFormation

AWS CloudFormation is a powerful Infrastructure as Code (IaC) tool provided by Amazon Web Services (AWS). It simplifies the provisioning and management of AWS resources through the use of declarative CloudFormation templates. Here are the key features and benefits of AWS CloudFormation, its declarative infrastructure management approach, its integration with other AWS services, and some real-world case studies showcasing its adoption.

Key Features and Advantages:

Infrastructure as Code: CloudFormation enables you to define and manage your infrastructure resources using templates written in JSON or YAML. This approach ensures consistent, repeatable, and version-controlled deployments of your infrastructure.

Automation and Orchestration: CloudFormation automates the provisioning and configuration of resources, ensuring that they are created, updated, or deleted in a controlled and predictable manner. It handles resource dependencies, allowing for the orchestration of complex infrastructure setups.

Infrastructure Consistency: With CloudFormation, you can define the desired state of your infrastructure and deploy it consistently across different environments. This reduces configuration drift and ensures uniformity in your infrastructure deployments.

Change Management: CloudFormation utilizes stacks to manage infrastructure changes. Stacks enable you to track and control updates to your infrastructure, ensuring that changes are applied consistently and minimizing the risk of errors.

Scalability and Flexibility: CloudFormation supports a wide range of AWS resource types and features. This allows you to provision and manage compute instances, databases, storage volumes, networking components, and more. It also offers flexibility through custom resources and supports parameterization for dynamic configurations.

Case studies showcasing CloudFormation adoption

Netflix leverages CloudFormation for managing their infrastructure deployments at scale. They use CloudFormation templates to provision resources, define configurations, and enable repeatable deployments across different regions and accounts.

Yelp utilizes CloudFormation to manage their AWS infrastructure. They use CloudFormation templates to provision and configure resources, enabling them to automate and simplify their infrastructure deployments.

Dow Jones, a global news and business information provider, utilizes CloudFormation for managing their AWS resources. They leverage CloudFormation to define and provision their infrastructure, enabling faster and more consistent deployments.

Ansible

Perhaps Ansible is the most well-known configuration management system used by DevOps engineers. This system is written in the Python programming language and uses a declarative markup language to describe configurations. It utilizes the PUSH method for automating software configuration and deployment.

What are the main differences between Ansible and Terraform? Ansible is a versatile automation tool that can be used to solve various tasks, while Terraform is a tool specifically designed for "infrastructure as code" tasks, which means transforming configuration files into functioning infrastructure.

Use cases highlighting Ansible's versatility

Configuration Management: Ansible is commonly used for configuration management, allowing you to define and enforce the desired configurations across multiple servers or network devices. It ensures consistency and simplifies the management of configuration drift.

Application Deployment: Ansible can automate the deployment of applications by orchestrating the installation, configuration, and updates of application components and their dependencies. This enables faster and more reliable application deployments.

Cloud Provisioning: Ansible integrates seamlessly with various cloud providers, enabling the provisioning and management of cloud resources. It allows you to define infrastructure in a cloud-agnostic way, making it easy to deploy and manage infrastructure across different cloud platforms.

Continuous Delivery: Ansible can be integrated into a continuous delivery pipeline to automate the deployment and testing of applications. It allows for efficient and repeatable deployments, reducing manual errors and accelerating the delivery of software updates.

Google Cloud Deployment Manager

Google Cloud Deployment Manager is a robust Infrastructure as Code (IaC) solution offered by Google Cloud Platform (GCP). It empowers users to define and manage their infrastructure resources using Deployment Manager templates, which facilitate automated and consistent provisioning and configuration.

By utilizing YAML or Jinja2-based templates, Deployment Manager enables the definition and configuration of infrastructure resources. These templates specify the desired state of resources, encompassing various GCP services, networks, virtual machines, storage, and more. Users can leverage templates to define properties, establish dependencies, and establish relationships between resources, facilitating the creation of intricate infrastructures.

Deployment Manager seamlessly integrates with a diverse range of GCP services and ecosystems, providing comprehensive resource management capabilities. It supports GCP's native services, including Compute Engine, Cloud Storage, Cloud SQL, Cloud Pub/Sub, among others, enabling users to effectively manage their entire infrastructure.

Puppet

Puppet is a widely adopted configuration management tool that helps automate the management and deployment of infrastructure resources. It provides a declarative language and a flexible framework for defining and enforcing desired system configurations across multiple servers and environments.

Puppet enables efficient and centralized management of infrastructure configurations, making it easier to maintain consistency and enforce desired states across a large number of servers. It automates repetitive tasks, such as software installations, package updates, file management, and service configurations, saving time and reducing manual errors.

Puppet operates using a client-server model, where Puppet agents (client nodes) communicate with a central Puppet server to retrieve configurations and apply them locally. The Puppet server acts as a repository for configurations and distributes them to the agents based on predefined rules.

Pulumi

Pulumi is a modern Infrastructure as Code (IaC) tool that enables users to define, deploy, and manage infrastructure resources using familiar programming languages. It combines the concepts of IaC with the power and flexibility of general-purpose programming languages to provide a seamless and intuitive infrastructure management experience.

Pulumi has a growing ecosystem of libraries and plugins, offering additional functionality and integrations with external tools and services. Users can leverage existing libraries and modules from their programming language ecosystems, enhancing the capabilities of their infrastructure code.

There are often situations where it is necessary to deploy an application simultaneously across multiple clouds, combine cloud infrastructure with a managed Kubernetes cluster, or anticipate future service migration. One possible solution for creating a universal configuration is to use the Pulumi project, which allows for deploying applications to various clouds (GCP, Amazon, Azure, AliCloud), Kubernetes, providers (such as Linode, Digital Ocean), virtual infrastructure management systems (OpenStack), and local Docker environments.

Pulumi integrates with popular CI/CD systems and Git repositories, allowing for the creation of infrastructure as code pipelines.

Users can automate the deployment and management of infrastructure resources as part of their overall software delivery process.

SaltStack

SaltStack is a powerful Infrastructure as Code (IaC) tool that automates the management and configuration of infrastructure resources at scale. It provides a comprehensive solution for orchestrating and managing infrastructure through a combination of remote execution, configuration management, and event-driven automation.

SaltStack enables remote execution across a large number of servers, allowing administrators to execute commands, run scripts, and perform tasks on multiple machines simultaneously. It provides a robust configuration management framework, allowing users to define desired states for infrastructure resources and ensure their continuous enforcement.

SaltStack is designed to handle massive infrastructures efficiently, making it suitable for organizations with complex and distributed environments.

The SaltStack solution stands out compared to others mentioned in this article. When creating SaltStack, the primary goal was to achieve high speed. To ensure high performance, the architecture of the solution is based on the interaction between the Salt-master server components and Salt-minion clients, which operate in push mode using Salt-SSH.

The project is developed in Python and is hosted in the repository at https://github.com/saltstack/salt.

The high speed is achieved through asynchronous task execution. The idea is that the Salt Master communicates with Salt Minions using a publish/subscribe model, where the master publishes a task and the minions receive and asynchronously execute it. They interact through a shared bus, where the master sends a single message specifying the criteria that minions must meet, and they start executing the task. The master simply waits for information from all sources, knowing how many minions to expect a response from. To some extent, this operates on a "fire and forget" principle.

In the event of the master going offline, the minion will still complete the assigned work, and upon the master's return, it will receive the results.

The interaction architecture can be quite complex, as illustrated in the vRealize Automation SaltStack Config diagram below.

When comparing SaltStack and Ansible, due to architectural differences, Ansible spends more time processing messages. However, unlike SaltStack's minions, which essentially act as agents, Ansible does not require agents to function. SaltStack is significantly easier to deploy compared to Ansible, which requires a series of configurations to be performed. SaltStack does not require extensive script writing for its operation, whereas Ansible is quite reliant on scripting for interacting with infrastructure.

Additionally, SaltStack can have multiple masters, so if one fails, control is not lost. Ansible, on the other hand, can have a secondary node in case of failure. Finally, SaltStack is supported by GitHub, while Ansible is supported by Red Hat.

SaltStack integrates seamlessly with cloud platforms, virtualization technologies, and infrastructure services.

It provides built-in modules and functions for interacting with popular cloud providers, making it easier to manage and provision resources in cloud environments.

SaltStack offers a highly extensible framework that allows users to create custom modules, states, and plugins to extend its functionality.

It has a vibrant community contributing to a rich ecosystem of Salt modules and extensions.

Chef

Chef is a widely recognized and powerful Infrastructure as Code (IaC) tool that automates the management and configuration of infrastructure resources. It provides a comprehensive framework for defining, deploying, and managing infrastructure across various platforms and environments.

Chef allows users to define infrastructure configurations as code, making it easier to manage and maintain consistent configurations across multiple servers and environments.

It uses a declarative language called Chef DSL (Domain-Specific Language) to define the desired state of resources and systems.

Chef Solo

Chef also offers a standalone mode called Chef Solo, which does not require a central Chef server.

Chef Solo allows for the local execution of cookbooks and recipes on individual systems without the need for a server-client setup.

Benefits of Infrastructure as Code Tools

Infrastructure as Code (IaC) tools offer numerous benefits that contribute to efficient, scalable, and reliable infrastructure management.

IaC tools automate the provisioning, configuration, and management of infrastructure resources. This automation eliminates manual processes, reducing the potential for human error and increasing efficiency.

With IaC, infrastructure configurations are defined and deployed consistently across all environments. This ensures that infrastructure resources adhere to desired states and defined standards, leading to more reliable and predictable deployments.

IaC tools enable easy scalability by providing the ability to define infrastructure resources as code. Scaling up or down becomes a matter of modifying the code or configuration, allowing for rapid and flexible infrastructure adjustments to meet changing demands.

Infrastructure code can be stored and version-controlled using tools like Git. This enables collaboration among team members, tracking of changes, and easy rollbacks to previous configurations if needed.

Infrastructure code can be structured into reusable components, modules, or templates. These components can be shared across projects and environments, promoting code reusability, reducing duplication, and speeding up infrastructure deployment.

Infrastructure as Code tools automate the provisioning and deployment processes, significantly reducing the time required to set up and configure infrastructure resources. This leads to faster application deployment and delivery cycles.

Infrastructure as Code tools provide an audit trail of infrastructure changes, making it easier to track and document modifications. They also assist in achieving compliance by enforcing predefined policies and standards in infrastructure configurations.

Infrastructure code can be used to recreate and recover infrastructure quickly in the event of a disaster. By treating infrastructure as code, organizations can easily reproduce entire environments, reducing downtime and improving disaster recovery capabilities.

IaC tools abstract infrastructure configurations from specific cloud providers, allowing for portability across multiple cloud platforms. This flexibility enables organizations to leverage different cloud services based on specific requirements or to migrate between cloud providers easily.

Infrastructure as Code tools provide visibility into infrastructure resources and their associated costs. This visibility enables organizations to optimize resource allocation, identify unused or underutilized resources, and make informed decisions for cost optimization.

Considerations for Choosing an IaC Tool

When selecting an Infrastructure as Code (IaC) tool, it's essential to consider various factors to ensure it aligns with your specific requirements and goals.

Compatibility with Infrastructure and Environments

Determine if the IaC tool supports the infrastructure platforms and technologies you use, such as public clouds (AWS, Azure, GCP), private clouds, containers, or on-premises environments.

Check if the tool integrates well with existing infrastructure components and services you rely on, such as databases, load balancers, or networking configurations.

Supported Programming Languages

Consider the programming languages supported by the IaC tool. Choose a tool that offers support for languages that your team is familiar with and comfortable using.

Ensure that the tool's supported languages align with your organization's coding standards and preferences.

Learning Curve and Ease of Use

Evaluate the learning curve associated with the IaC tool. Consider the complexity of its syntax, the availability of documentation, tutorials, and community support.

Determine if the tool provides an intuitive and user-friendly interface or a command-line interface (CLI) that suits your team's preferences and skill sets.

Declarative or Imperative Approach

Decide whether you prefer a declarative or imperative approach to infrastructure management.

Declarative tools focus on defining the desired state of infrastructure resources, while imperative Infrastructure as Code tools allow more procedural control over infrastructure changes.

Consider which approach aligns better with your team's mindset and infrastructure management style.

Extensibility and Customization

Evaluate the extensibility and customization options provided by the IaC tool. Check if it allows the creation of custom modules, plugins, or extensions to meet specific requirements.

Consider the availability of a vibrant community and ecosystem around the tool, providing additional resources, libraries, and community-contributed content.

Collaboration and Version Control

Assess the tool's collaboration features and support for version control systems like Git.

Determine if it allows multiple team members to work simultaneously on infrastructure code, provides conflict resolution mechanisms, and supports code review processes.

Security and Compliance

Examine the tool's security features and its ability to meet security and compliance requirements.

Consider features like access controls, encryption, secrets management, and compliance auditing capabilities to ensure the tool aligns with your organization's security standards.

Community and Support

Evaluate the size and activity of the tool's community, as it can greatly impact the availability of resources, forums, and support.

Consider factors like the frequency of updates, bug fixes, and the responsiveness of the tool's maintainers to address issues or feature requests.

Cost and Licensing

Assess the licensing model of the IaC tool. Some Infrastructure as Code Tools may have open-source versions with community support, while others offer enterprise editions with additional features and support.

Consider the total cost of ownership, including licensing fees, training costs, infrastructure requirements, and ongoing maintenance.

Roadmap and Future Development

Research the tool's roadmap and future development plans to ensure its continued relevance and compatibility with evolving technologies and industry trends.

By considering these factors, you can select Infrastructure as Code Tools that best fits your organization's needs, infrastructure requirements, team capabilities, and long-term goals.