IT systems hold the data, apps, and networks that keep a business running. If they fail or get hacked, everything can stop.

IT infrastructure security means protecting these systems from attacks and mistakes. It covers hardware, software, networks, and data.

Cyberattacks are growing. They are not rare events but everyday risks. If a company is not ready, it can lose money, face lawsuits, and damage its reputation.

This matters for any business—big or small. Good security builds trust with customers, protects sensitive data, and keeps operations stable.

Key Threats to IT Infrastructure Security

Organizations face a range of evolving cyber threats:

Malware and ransomware: Still among the most common, causing operational shutdowns and costly recovery.

DDoS attacks: Overwhelm systems, disrupt services, and affect customer experience.

Phishing and human error: A recurring weak link, often opening the door to larger breaches.

Exploited vulnerabilities in poorly secured networks and outdated softwarerozi,+83.

Notably, 70% of IT security experts interviewed in the study identified human error as the primary factor in incidents, underscoring the need for awareness training and stronger organizational security culture.

Malware and Ransomware Attacks

Malware and ransomware attacks present considerable risks to the security of IT infrastructure. Malicious programs like viruses, worms, and Trojan horses can infiltrate systems through diverse vectors such as email attachments, infected websites, or software downloads. Once within the infrastructure, malware can compromise sensitive data, disrupt operations, and even grant unauthorized access to malicious actors. Ransomware, a distinct form of malware, encrypts vital files and extorts a ransom for their decryption, potentially resulting in financial losses and operational disruptions.

Phishing and Social Engineering Attacks

Phishing and social engineering attacks target individuals within an organization, exploiting their trust and manipulating them into divulging sensitive information or performing actions that compromise security. These attacks often come in the form of deceptive emails, messages, or phone calls, impersonating legitimate entities. By tricking employees into sharing passwords, clicking on malicious links, or disclosing confidential data, cybercriminals can gain unauthorized access to the IT infrastructure and carry out further malicious activities.

Insider Threats

Insider threats refer to security risks that arise from within an organization. They can occur due to intentional actions by disgruntled employees or unintentional mistakes made by well-meaning staff members. Insider threats can involve unauthorized data access, theft of sensitive information, sabotage, or even the introduction of malware into the infrastructure. These threats are challenging to detect, as insiders often have legitimate access to critical systems and may exploit their privileges to carry out malicious actions.

Distributed Denial of Service (DDoS) Attacks

DDoS attacks aim to disrupt the availability of IT infrastructure by overwhelming systems with a flood of traffic or requests. Attackers utilize networks of compromised computers, known as botnets, to generate massive amounts of traffic directed at a target infrastructure. This surge in traffic overwhelms the network, rendering it unable to respond to legitimate requests, causing service disruptions and downtime. DDoS attacks can impact businesses financially, tarnish their reputation, and impede normal operations.

Data Breaches and Theft

Data breaches and theft transpire when unauthorized individuals acquire entry to sensitive information housed within the IT infrastructure. This encompasses personally identifiable information (PII), financial records, intellectual property, and trade secrets. Perpetrators may exploit software vulnerabilities, weak access controls, or inadequate encryption to infiltrate the infrastructure and extract valuable data. The ramifications of data breaches are far-reaching and encompass legal liabilities, financial repercussions, and harm to the organization's reputation.

Vulnerabilities in Software and Hardware

Software and hardware vulnerabilities introduce weaknesses in the IT infrastructure that can be exploited by attackers. These vulnerabilities can arise from coding errors, misconfigurations, or outdated software and firmware. Attackers actively search for and exploit these weaknesses to gain unauthorized access, execute arbitrary code, or perform other malicious activities. Regular patching, updates, and vulnerability assessments are critical to mitigating these risks and ensuring a secure IT infrastructure.

Strategies for Optimizing IT Infrastructure Security

The study highlights three pillars of a successful IT security strategy: policy, technology, and training.

1. Implementing Security Frameworks

Frameworks like the NIST Cybersecurity Framework and ISO/IEC 27001 help organizations identify, protect, detect, respond to, and recover from threats. They provide a structured roadmap for resilience.

2. Adopting Modern Defense Technologies

Encryption ensures data confidentiality.

Next-generation firewalls block evolving threats.

AI-driven threat detection improves speed and accuracy, with reports showing it can cut incident response time by 50%rozi,+83.

Intrusion detection systems (IDS) add an extra layer of monitoring and defense.

3. Prioritizing Human-Centric Security

Policies and awareness programs are as critical as technical defenses. Regular training reduces human error, phishing susceptibility, and careless data handling.

https://youtu.be/NFVCpGQFjgA?si=D8cA2q2dPR9UBpWl

Real-World Case Study: How Gart Transformed IT Infrastructure Security for a Client

The entertainment software platform SoundCampaign approached Gart with a twofold challenge: optimizing their AWS costs and automating their CI/CD processes. Additionally, they were experiencing conflicts and miscommunication between their development and testing teams, which hindered their productivity and caused inefficiencies within their IT infrastructure.

As a trusted DevOps company, Gart devised a comprehensive solution that addressed both the cost optimization and automation needs, while also improving the client's IT infrastructure security and fostering better collaboration within their teams.

To streamline the client's CI/CD processes, Gart introduced an automated pipeline using modern DevOps tools. We leveraged technologies such as Jenkins, Docker, and Kubernetes to enable seamless code integration, automated testing, and deployment. This eliminated manual errors, reduced deployment time, and enhanced overall efficiency.

Recognizing the importance of IT infrastructure security, Gart implemented robust security measures to minimize risks and improve collaboration within the client's teams. By implementing secure CI/CD pipelines and automated security checks, we ensured a clear and traceable code deployment process. This clarity minimized conflicts between developers and testers, as it became evident who made changes and when. Additionally, we implemented strict access controls, encryption mechanisms, and continuous monitoring to enhance overall security posture.

Are you concerned about the security of your IT infrastructure? Protect your valuable digital assets by partnering with Gart, your trusted IT security provider.

Best Practices for IT Infrastructure Security

Good security is not only about technology. It also needs clear rules, user awareness, and regular checks. Here are the basics:

Access controls and authentication: Use strong passwords, multi-factor authentication, and manage who has access to what. This limits the risk of someone breaking in.

Updates and patches: Keep software and hardware up to date. Fixing known issues quickly reduces the chance of attacks.

Monitoring and auditing: Watch network traffic for anything unusual. Tools like SIEM can help spot problems early and limit damage.

Data encryption: Encrypt sensitive data both when stored and when sent. This keeps information safe if it gets intercepted.

Firewalls and intrusion detection: Firewalls block unwanted traffic. IDS tools alert you when something suspicious happens. Together they protect the network.

Employee training: Most attacks start with human error. Regular training helps staff avoid phishing, scams, and careless mistakes.

Backups and disaster recovery: Back up data on schedule and test recovery plans often. This ensures you can restore critical systems if something goes wrong.

Our team of experts specializes in securing networks, servers, cloud environments, and more. Contact us today to fortify your defenses and ensure the resilience of your IT infrastructure.

Network Infrastructure

A strong network is key to protecting business systems. Here are the main steps:

Secure wireless networks: Use WPA2 or WPA3 encryption, change default passwords, and turn off SSID broadcasting. Add MAC filtering and always keep access points updated.

Use VPNs: VPNs create an encrypted tunnel for remote access. This keeps data private when employees connect over public networks.

Segment and isolate networks: Split the network into smaller parts based on roles or functions. This limits how far an attacker can move if one system is breached. Each segment should have its own rules and controls.

Monitor and log activity: Watch network traffic for unusual behavior. Keep logs of events to help with investigations and quick response to incidents.

Server Infrastructure

Servers run the core systems of any organization, so they need strong protection. Key practices include:

Harden server settings: Turn off unused services and ports, limit permissions, and set firewalls to only allow needed traffic. This reduces the attack surface.

Strong authentication and access control: Use unique, complex passwords and multi-factor authentication. Apply role-based access control (RBAC) so only the right people can reach sensitive resources.

Keep servers updated: Apply patches and firmware updates as soon as vendors release them. Staying current helps block known exploits and emerging threats.

Monitor logs and activity: Collect and review server logs to spot unusual activity or failed access attempts. Real-time monitoring helps catch and respond to threats faster.

Cloud Infrastructure Security

By choosing a reputable cloud service provider, implementing strong access controls and encryption, regularly monitoring and auditing cloud infrastructure, and backing up data stored in the cloud, organizations can enhance the security of their cloud infrastructure. These measures help protect sensitive data, maintain data availability, and ensure the overall integrity and resilience of cloud-based systems and applications.

Choosing a reputable and secure cloud service provider is a critical first step in ensuring cloud infrastructure security. Organizations should thoroughly assess potential providers based on their security certifications, compliance with industry standards, data protection measures, and track record for security incidents. Selecting a trusted provider with robust security practices helps establish a solid foundation for securing data and applications in the cloud.

Implementing strong access controls and encryption for data in the cloud is crucial to protect against unauthorized access and data breaches. This includes using strong passwords, multi-factor authentication, and role-based access control (RBAC) to ensure that only authorized users can access cloud resources. Additionally, sensitive data should be encrypted both in transit and at rest within the cloud environment to safeguard it from potential interception or compromise.

Regular monitoring and auditing of cloud infrastructure is vital to detect and respond to security incidents promptly. Organizations should implement tools and processes to monitor cloud resources, network traffic, and user activities for any suspicious or anomalous behavior. Regular audits should also be conducted to assess the effectiveness of security controls, identify potential vulnerabilities, and ensure compliance with security policies and regulations.

Backing up data stored in the cloud is essential for ensuring business continuity and data recoverability in the event of data loss, accidental deletion, or cloud service disruptions. Organizations should implement regular data backups and verify their integrity to mitigate the risk of permanent data loss. It is important to establish backup procedures and test data recovery processes to ensure that critical data can be restored effectively from the cloud backups.

Incident Response and Recovery

A well-prepared and practiced incident response capability enables timely response, minimizes the impact of incidents, and improves overall resilience in the face of evolving cyber threats.

Developing an Incident Response Plan

Developing an incident response plan is crucial for effectively handling security incidents in a structured and coordinated manner. The plan should outline the roles and responsibilities of the incident response team, the procedures for detecting and reporting incidents, and the steps to be taken to mitigate the impact and restore normal operations. It should also include communication protocols, escalation procedures, and coordination with external stakeholders, such as law enforcement or third-party vendors.

Detecting and Responding to Security Incidents

Prompt detection and response to security incidents are vital to minimize damage and prevent further compromise. Organizations should deploy security monitoring tools and establish real-time alerting mechanisms to identify potential security incidents. Upon detection, the incident response team should promptly assess the situation, contain the incident, gather evidence, and initiate appropriate remediation steps to mitigate the impact and restore security.

Conducting Post-Incident Analysis and Implementing Improvements

After the resolution of a security incident, conducting a post-incident analysis is crucial to understand the root causes, identify vulnerabilities, and learn from the incident. This analysis helps organizations identify weaknesses in their security posture, processes, or technologies, and implement improvements to prevent similar incidents in the future. Lessons learned should be documented and incorporated into updated incident response plans and security measures.

Testing Incident Response and Recovery Procedures

Regularly testing incident response and recovery procedures is essential to ensure their effectiveness and identify any gaps or shortcomings. Organizations should conduct simulated exercises, such as tabletop exercises or full-scale incident response drills, to assess the readiness and efficiency of their incident response teams and procedures. Testing helps uncover potential weaknesses, validate response plans, and refine incident management processes, ensuring a more robust and efficient response during real incidents.

IT Infrastructure Security

AspectDescriptionThreatsCommon threats include malware/ransomware, phishing/social engineering, insider threats, DDoS attacks, data breaches/theft, and vulnerabilities in software/hardware.Best PracticesImplementing strong access controls, regularly updating software/hardware, conducting security audits/risk assessments, encrypting sensitive data, using firewalls/intrusion detection systems, educating employees, and regularly backing up data/testing disaster recovery plans.Network SecuritySecuring wireless networks, implementing VPNs, network segmentation/isolation, and monitoring/logging network activities.Server SecurityHardening server configurations, implementing strong authentication/authorization, regularly updating software/firmware, and monitoring server logs/activities.Cloud SecurityChoosing a reputable cloud service provider, implementing strong access controls/encryption, monitoring/auditing cloud infrastructure, and backing up data stored in the cloud.Incident Response/RecoveryDeveloping an incident response plan, detecting/responding to security incidents, conducting post-incident analysis/implementing improvements, and testing incident response/recovery procedures.Emerging Trends/TechnologiesArtificial Intelligence (AI)/Machine Learning (ML) in security, Zero Trust security model, blockchain technology for secure transactions, and IoT security considerations.Here's a table summarizing key aspects of IT infrastructure security

Emerging Trends and Technologies in IT Infrastructure Security

Artificial Intelligence (AI) and Machine Learning (ML) in Security

Artificial Intelligence (AI) and Machine Learning (ML) are emerging trends in IT infrastructure security. These technologies can analyze vast amounts of data, detect patterns, and identify anomalies or potential security threats in real-time. AI and ML can be used for threat intelligence, behavior analytics, user authentication, and automated incident response. By leveraging AI and ML in security, organizations can enhance their ability to detect and respond to sophisticated cyber threats more effectively.

Zero Trust Security Model

The Zero Trust security model is gaining popularity as a comprehensive approach to IT infrastructure security. Unlike traditional perimeter-based security models, Zero Trust assumes that no user or device should be inherently trusted, regardless of their location or network. It emphasizes strong authentication, continuous monitoring, and strict access controls based on the principle of "never trust, always verify." Implementing a Zero Trust security model helps organizations reduce the risk of unauthorized access and improve overall security posture.

Blockchain Technology for Secure Transactions

Blockchain technology is revolutionizing secure transactions by providing a decentralized and tamper-resistant ledger. Its cryptographic mechanisms ensure the integrity and immutability of transaction data, reducing the reliance on intermediaries and enhancing trust. Blockchain can be used in various industries, such as finance, supply chain, and healthcare, to secure transactions, verify identities, and protect sensitive data. By leveraging blockchain technology, organizations can enhance security, transparency, and trust in their transactions.

Internet of Things (IoT) Security Considerations

As the Internet of Things (IoT) continues to proliferate, securing IoT devices and networks is becoming a critical challenge. IoT devices often have limited computing resources and may lack robust security features, making them vulnerable to exploitation. Organizations need to consider implementing strong authentication, encryption, and access controls for IoT devices. They should also ensure that IoT networks are separate from critical infrastructure networks to mitigate potential risks. Proactive monitoring, patch management, and regular updates are crucial to address IoT security vulnerabilities and protect against potential IoT-related threats.

These advancements enable organizations to proactively address evolving threats, enhance data protection, and improve overall resilience in the face of a dynamic and complex cybersecurity landscape.

Supercharge your IT landscape with our Infrastructure Consulting! We specialize in efficiency, security, and tailored solutions. Contact us today for a consultation – your technology transformation starts here.

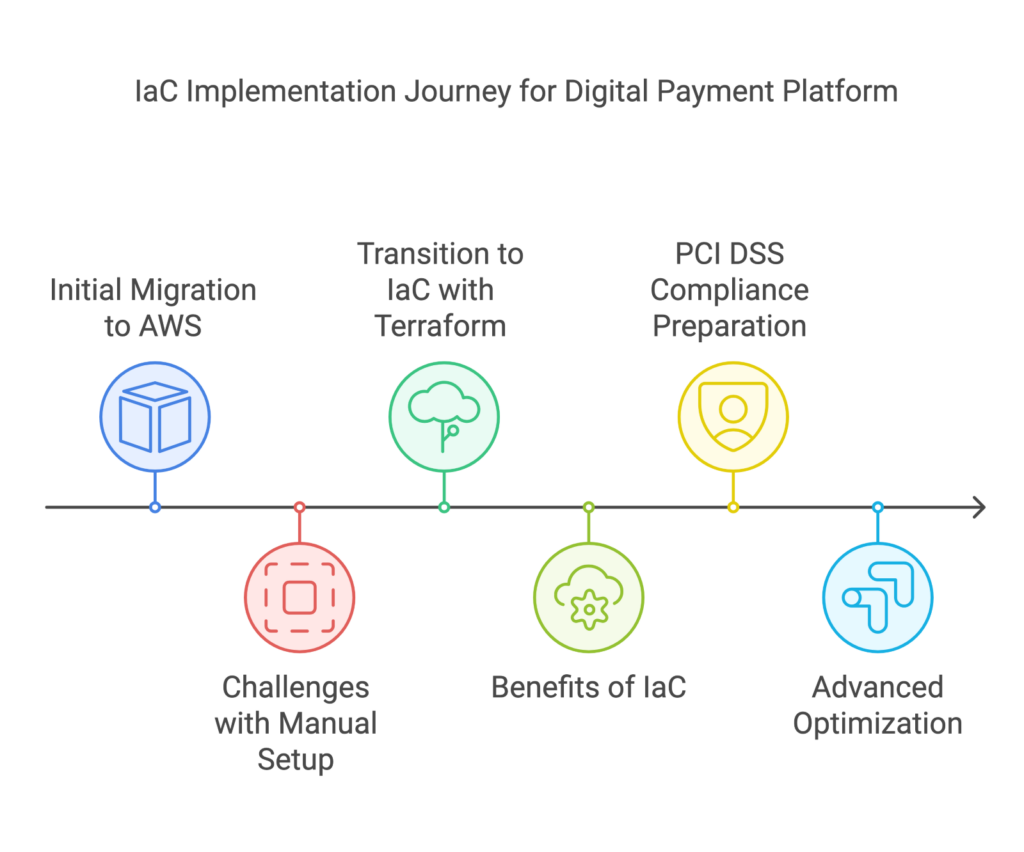

By treating infrastructure as software code, IaC empowers teams to leverage the benefits of version control, automation, and repeatability in their cloud deployments.

This article explores the key concepts and benefits of IaC, shedding light on popular tools such as Terraform, Ansible, SaltStack, and Google Cloud Deployment Manager. We'll delve into their features, strengths, and use cases, providing insights into how they enable developers and operations teams to streamline their infrastructure management processes.

IaC Tools Comparison Table

IaC ToolDescriptionSupported Cloud ProvidersTerraformOpen-source tool for infrastructure provisioningAWS, Azure, GCP, and moreAnsibleConfiguration management and automation platformAWS, Azure, GCP, and moreSaltStackHigh-speed automation and orchestration frameworkAWS, Azure, GCP, and morePuppetDeclarative language-based configuration managementAWS, Azure, GCP, and moreChefInfrastructure automation frameworkAWS, Azure, GCP, and moreCloudFormationAWS-specific IaC tool for provisioning AWS resourcesAmazon Web Services (AWS)Google Cloud Deployment ManagerInfrastructure management tool for Google Cloud PlatformGoogle Cloud Platform (GCP)Azure Resource ManagerAzure-native tool for deploying and managing resourcesMicrosoft AzureOpenStack HeatOrchestration engine for managing resources in OpenStackOpenStackInfrastructure as a Code Tools Table

Exploring the Landscape of IaC Tools

The IaC paradigm is widely embraced in modern software development, offering a range of tools for deployment, configuration management, virtualization, and orchestration. Prominent containerization and orchestration tools like Docker and Kubernetes employ YAML to express the desired end state. HashiCorp Packer is another tool that leverages JSON templates and variables for creating system snapshots.

The most popular configuration management tools, namely Ansible, Chef, and Puppet, adopt the IaC approach to define the desired state of the servers under their management.

Ansible functions by bootstrapping servers and orchestrating them based on predefined playbooks. These playbooks, written in YAML, outline the operations Ansible will execute and the targeted resources it will operate on. These operations can include starting services, installing packages via the system's package manager, or executing custom bash commands.

Both Chef and Puppet operate through central servers that issue instructions for orchestrating managed servers. Agent software needs to be installed on the managed servers. While Chef employs Ruby to describe resources, Puppet has its own declarative language.

Terraform seamlessly integrates with other IaC tools and DevOps systems, excelling in provisioning infrastructure resources rather than software installation and initial server configuration.

Unlike configuration management tools like Ansible and Chef, Terraform is not designed for installing software on target resources or scheduling tasks. Instead, Terraform utilizes providers to interact with supported resources.

Terraform can operate on a single machine without the need for a master or managed servers, unlike some other tools. It does not actively monitor the actual state of resources and automatically reapply configurations. Its primary focus is on orchestration. Typically, the workflow involves provisioning resources with Terraform and using a configuration management tool for further customization if necessary.

For Chef, Terraform provides a built-in provider that configures the client on the orchestrated remote resources. This allows for automatic addition of all orchestrated servers to the master server and further customization using Chef cookbooks (Chef's infrastructure declarations).

Optimize your infrastructure management with our DevOps expertise. Harness the power of IaC tools for streamlined provisioning, configuration, and orchestration. Scale efficiently and achieve seamless deployments. Contact us now.

Popular Infrastructure as Code Tools

Terraform

Terraform, introduced by HashiCorp in 2014, is an open-source Infrastructure as Code (IaC) solution. It operates based on a declarative approach to managing infrastructure, allowing you to define the desired end state of your infrastructure in a configuration file. Terraform then works to bring the infrastructure to that desired state. This configuration is applied using the PUSH method. Written in the Go programming language, Terraform incorporates its own language known as HashiCorp Configuration Language (HCL), which is used for writing configuration files that automate infrastructure management tasks.

Download: https://github.com/hashicorp/terraform

Terraform operates by analyzing the infrastructure code provided and constructing a graph that represents the resources and their relationships. This graph is then compared with the cached state of resources in the cloud. Based on this comparison, Terraform generates an execution plan that outlines the necessary changes to be applied to the cloud in order to achieve the desired state, including the order in which these changes should be made.

Within Terraform, there are two primary components: providers and provisioners. Providers are responsible for interacting with cloud service providers, handling the creation, management, and deletion of resources. On the other hand, provisioners are used to execute specific actions on the remote resources created or on the local machine where the code is being processed.

Terraform offers support for managing fundamental components of various cloud providers, such as compute instances, load balancers, storage, and DNS records. Additionally, Terraform's extensibility allows for the incorporation of new providers and provisioners.

In the realm of Infrastructure as Code (IaC), Terraform's primary role is to ensure that the state of resources in the cloud aligns with the state expressed in the provided code. However, it's important to note that Terraform does not actively track deployed resources or monitor the ongoing bootstrapping of prepared compute instances. The subsequent section will delve into the distinctions between Terraform and other tools, as well as how they complement each other within the workflow.

Real-World Examples of Terraform Usage

Terraform has gained immense popularity across various industries due to its versatility and user-friendly nature. Here are a few real-world examples showcasing how Terraform is being utilized:

CI/CD Pipelines and Infrastructure for E-Health Platform

For our client, a development company specializing in Electronic Medical Records Software (EMRS) for government-based E-Health platforms and CRM systems in medical facilities, we leveraged Terraform to create the infrastructure using VMWare ESXi. This allowed us to harness the full capabilities of the local cloud provider, ensuring efficient and scalable deployments.

Implementation of Nomad Cluster for Massively Parallel Computing

Our client, S-Cube, is a software development company specializing in creating a product based on a waveform inversion algorithm for building Earth models. They sought to enhance their infrastructure by separating the software from the underlying infrastructure, allowing them to focus solely on application development without the burden of infrastructure management.

To assist S-Cube in achieving their goals, Gart Solutions stepped in and leveraged the latest cloud development techniques and technologies, including Terraform. By utilizing Terraform, Gart Solutions helped restructure the architecture of S-Cube's SaaS platform, making it more economically efficient and scalable.

The Gart Solutions team worked closely with S-Cube to develop a new approach that takes infrastructure management to the next level. By adopting Terraform, they were able to define their infrastructure as code, enabling easy provisioning and management of resources across cloud and on-premises environments. This approach offered S-Cube the flexibility to run their workloads in both containerized and non-containerized environments, adapting to their specific requirements.

Streamlining Presale Processes with ChatOps Automation

Our client, Beyond Risk, is a dynamic technology company specializing in enterprise risk management solutions. They faced several challenges related to environmental management, particularly in managing the existing environment architecture and infrastructure code conditions, which required significant effort.

To address these challenges, Gart implemented ChatOps Automation to streamline the presale processes. The implementation involved utilizing the Slack API to create an interactive flow, AWS Lambda for implementing the business logic, and GitHub Action + Terraform Cloud for infrastructure automation.

One significant improvement was the addition of a Notification step, which helped us track the success or failure of Terraform operations. This allowed us to stay informed about the status of infrastructure changes and take appropriate actions accordingly.

Unlock the full potential of your infrastructure with our DevOps expertise. Maximize scalability and achieve flawless deployments. Drop us a line right now!

AWS CloudFormation

AWS CloudFormation is a powerful Infrastructure as Code (IaC) tool provided by Amazon Web Services (AWS). It simplifies the provisioning and management of AWS resources through the use of declarative CloudFormation templates. Here are the key features and benefits of AWS CloudFormation, its declarative infrastructure management approach, its integration with other AWS services, and some real-world case studies showcasing its adoption.

Key Features and Advantages:

Infrastructure as Code: CloudFormation enables you to define and manage your infrastructure resources using templates written in JSON or YAML. This approach ensures consistent, repeatable, and version-controlled deployments of your infrastructure.

Automation and Orchestration: CloudFormation automates the provisioning and configuration of resources, ensuring that they are created, updated, or deleted in a controlled and predictable manner. It handles resource dependencies, allowing for the orchestration of complex infrastructure setups.

Infrastructure Consistency: With CloudFormation, you can define the desired state of your infrastructure and deploy it consistently across different environments. This reduces configuration drift and ensures uniformity in your infrastructure deployments.

Change Management: CloudFormation utilizes stacks to manage infrastructure changes. Stacks enable you to track and control updates to your infrastructure, ensuring that changes are applied consistently and minimizing the risk of errors.

Scalability and Flexibility: CloudFormation supports a wide range of AWS resource types and features. This allows you to provision and manage compute instances, databases, storage volumes, networking components, and more. It also offers flexibility through custom resources and supports parameterization for dynamic configurations.

Case studies showcasing CloudFormation adoption

Netflix leverages CloudFormation for managing their infrastructure deployments at scale. They use CloudFormation templates to provision resources, define configurations, and enable repeatable deployments across different regions and accounts.

Yelp utilizes CloudFormation to manage their AWS infrastructure. They use CloudFormation templates to provision and configure resources, enabling them to automate and simplify their infrastructure deployments.

Dow Jones, a global news and business information provider, utilizes CloudFormation for managing their AWS resources. They leverage CloudFormation to define and provision their infrastructure, enabling faster and more consistent deployments.

Ansible

Perhaps Ansible is the most well-known configuration management system used by DevOps engineers. This system is written in the Python programming language and uses a declarative markup language to describe configurations. It utilizes the PUSH method for automating software configuration and deployment.

What are the main differences between Ansible and Terraform? Ansible is a versatile automation tool that can be used to solve various tasks, while Terraform is a tool specifically designed for "infrastructure as code" tasks, which means transforming configuration files into functioning infrastructure.

Use cases highlighting Ansible's versatility

Configuration Management: Ansible is commonly used for configuration management, allowing you to define and enforce the desired configurations across multiple servers or network devices. It ensures consistency and simplifies the management of configuration drift.

Application Deployment: Ansible can automate the deployment of applications by orchestrating the installation, configuration, and updates of application components and their dependencies. This enables faster and more reliable application deployments.

Cloud Provisioning: Ansible integrates seamlessly with various cloud providers, enabling the provisioning and management of cloud resources. It allows you to define infrastructure in a cloud-agnostic way, making it easy to deploy and manage infrastructure across different cloud platforms.

Continuous Delivery: Ansible can be integrated into a continuous delivery pipeline to automate the deployment and testing of applications. It allows for efficient and repeatable deployments, reducing manual errors and accelerating the delivery of software updates.

Google Cloud Deployment Manager

Google Cloud Deployment Manager is a robust Infrastructure as Code (IaC) solution offered by Google Cloud Platform (GCP). It empowers users to define and manage their infrastructure resources using Deployment Manager templates, which facilitate automated and consistent provisioning and configuration.

By utilizing YAML or Jinja2-based templates, Deployment Manager enables the definition and configuration of infrastructure resources. These templates specify the desired state of resources, encompassing various GCP services, networks, virtual machines, storage, and more. Users can leverage templates to define properties, establish dependencies, and establish relationships between resources, facilitating the creation of intricate infrastructures.

Deployment Manager seamlessly integrates with a diverse range of GCP services and ecosystems, providing comprehensive resource management capabilities. It supports GCP's native services, including Compute Engine, Cloud Storage, Cloud SQL, Cloud Pub/Sub, among others, enabling users to effectively manage their entire infrastructure.

Puppet

Puppet is a widely adopted configuration management tool that helps automate the management and deployment of infrastructure resources. It provides a declarative language and a flexible framework for defining and enforcing desired system configurations across multiple servers and environments.

Puppet enables efficient and centralized management of infrastructure configurations, making it easier to maintain consistency and enforce desired states across a large number of servers. It automates repetitive tasks, such as software installations, package updates, file management, and service configurations, saving time and reducing manual errors.

Puppet operates using a client-server model, where Puppet agents (client nodes) communicate with a central Puppet server to retrieve configurations and apply them locally. The Puppet server acts as a repository for configurations and distributes them to the agents based on predefined rules.

Pulumi

Pulumi is a modern Infrastructure as Code (IaC) tool that enables users to define, deploy, and manage infrastructure resources using familiar programming languages. It combines the concepts of IaC with the power and flexibility of general-purpose programming languages to provide a seamless and intuitive infrastructure management experience.

Pulumi has a growing ecosystem of libraries and plugins, offering additional functionality and integrations with external tools and services. Users can leverage existing libraries and modules from their programming language ecosystems, enhancing the capabilities of their infrastructure code.

There are often situations where it is necessary to deploy an application simultaneously across multiple clouds, combine cloud infrastructure with a managed Kubernetes cluster, or anticipate future service migration. One possible solution for creating a universal configuration is to use the Pulumi project, which allows for deploying applications to various clouds (GCP, Amazon, Azure, AliCloud), Kubernetes, providers (such as Linode, Digital Ocean), virtual infrastructure management systems (OpenStack), and local Docker environments.

Pulumi integrates with popular CI/CD systems and Git repositories, allowing for the creation of infrastructure as code pipelines.

Users can automate the deployment and management of infrastructure resources as part of their overall software delivery process.

SaltStack

SaltStack is a powerful Infrastructure as Code (IaC) tool that automates the management and configuration of infrastructure resources at scale. It provides a comprehensive solution for orchestrating and managing infrastructure through a combination of remote execution, configuration management, and event-driven automation.

SaltStack enables remote execution across a large number of servers, allowing administrators to execute commands, run scripts, and perform tasks on multiple machines simultaneously. It provides a robust configuration management framework, allowing users to define desired states for infrastructure resources and ensure their continuous enforcement.

SaltStack is designed to handle massive infrastructures efficiently, making it suitable for organizations with complex and distributed environments.

The SaltStack solution stands out compared to others mentioned in this article. When creating SaltStack, the primary goal was to achieve high speed. To ensure high performance, the architecture of the solution is based on the interaction between the Salt-master server components and Salt-minion clients, which operate in push mode using Salt-SSH.

The project is developed in Python and is hosted in the repository at https://github.com/saltstack/salt.

The high speed is achieved through asynchronous task execution. The idea is that the Salt Master communicates with Salt Minions using a publish/subscribe model, where the master publishes a task and the minions receive and asynchronously execute it. They interact through a shared bus, where the master sends a single message specifying the criteria that minions must meet, and they start executing the task. The master simply waits for information from all sources, knowing how many minions to expect a response from. To some extent, this operates on a "fire and forget" principle.

In the event of the master going offline, the minion will still complete the assigned work, and upon the master's return, it will receive the results.

The interaction architecture can be quite complex, as illustrated in the vRealize Automation SaltStack Config diagram below.

When comparing SaltStack and Ansible, due to architectural differences, Ansible spends more time processing messages. However, unlike SaltStack's minions, which essentially act as agents, Ansible does not require agents to function. SaltStack is significantly easier to deploy compared to Ansible, which requires a series of configurations to be performed. SaltStack does not require extensive script writing for its operation, whereas Ansible is quite reliant on scripting for interacting with infrastructure.

Additionally, SaltStack can have multiple masters, so if one fails, control is not lost. Ansible, on the other hand, can have a secondary node in case of failure. Finally, SaltStack is supported by GitHub, while Ansible is supported by Red Hat.

SaltStack integrates seamlessly with cloud platforms, virtualization technologies, and infrastructure services.

It provides built-in modules and functions for interacting with popular cloud providers, making it easier to manage and provision resources in cloud environments.

SaltStack offers a highly extensible framework that allows users to create custom modules, states, and plugins to extend its functionality.

It has a vibrant community contributing to a rich ecosystem of Salt modules and extensions.

Chef

Chef is a widely recognized and powerful Infrastructure as Code (IaC) tool that automates the management and configuration of infrastructure resources. It provides a comprehensive framework for defining, deploying, and managing infrastructure across various platforms and environments.

Chef allows users to define infrastructure configurations as code, making it easier to manage and maintain consistent configurations across multiple servers and environments.

It uses a declarative language called Chef DSL (Domain-Specific Language) to define the desired state of resources and systems.

Chef Solo

Chef also offers a standalone mode called Chef Solo, which does not require a central Chef server.

Chef Solo allows for the local execution of cookbooks and recipes on individual systems without the need for a server-client setup.

Benefits of Infrastructure as Code Tools

Infrastructure as Code (IaC) tools offer numerous benefits that contribute to efficient, scalable, and reliable infrastructure management.

IaC tools automate the provisioning, configuration, and management of infrastructure resources. This automation eliminates manual processes, reducing the potential for human error and increasing efficiency.

With IaC, infrastructure configurations are defined and deployed consistently across all environments. This ensures that infrastructure resources adhere to desired states and defined standards, leading to more reliable and predictable deployments.

IaC tools enable easy scalability by providing the ability to define infrastructure resources as code. Scaling up or down becomes a matter of modifying the code or configuration, allowing for rapid and flexible infrastructure adjustments to meet changing demands.

Infrastructure code can be stored and version-controlled using tools like Git. This enables collaboration among team members, tracking of changes, and easy rollbacks to previous configurations if needed.

Infrastructure code can be structured into reusable components, modules, or templates. These components can be shared across projects and environments, promoting code reusability, reducing duplication, and speeding up infrastructure deployment.

Infrastructure as Code tools automate the provisioning and deployment processes, significantly reducing the time required to set up and configure infrastructure resources. This leads to faster application deployment and delivery cycles.

Infrastructure as Code tools provide an audit trail of infrastructure changes, making it easier to track and document modifications. They also assist in achieving compliance by enforcing predefined policies and standards in infrastructure configurations.

Infrastructure code can be used to recreate and recover infrastructure quickly in the event of a disaster. By treating infrastructure as code, organizations can easily reproduce entire environments, reducing downtime and improving disaster recovery capabilities.

IaC tools abstract infrastructure configurations from specific cloud providers, allowing for portability across multiple cloud platforms. This flexibility enables organizations to leverage different cloud services based on specific requirements or to migrate between cloud providers easily.

Infrastructure as Code tools provide visibility into infrastructure resources and their associated costs. This visibility enables organizations to optimize resource allocation, identify unused or underutilized resources, and make informed decisions for cost optimization.

Considerations for Choosing an IaC Tool

When selecting an Infrastructure as Code (IaC) tool, it's essential to consider various factors to ensure it aligns with your specific requirements and goals.

Compatibility with Infrastructure and Environments

Determine if the IaC tool supports the infrastructure platforms and technologies you use, such as public clouds (AWS, Azure, GCP), private clouds, containers, or on-premises environments.

Check if the tool integrates well with existing infrastructure components and services you rely on, such as databases, load balancers, or networking configurations.

Supported Programming Languages

Consider the programming languages supported by the IaC tool. Choose a tool that offers support for languages that your team is familiar with and comfortable using.

Ensure that the tool's supported languages align with your organization's coding standards and preferences.

Learning Curve and Ease of Use

Evaluate the learning curve associated with the IaC tool. Consider the complexity of its syntax, the availability of documentation, tutorials, and community support.

Determine if the tool provides an intuitive and user-friendly interface or a command-line interface (CLI) that suits your team's preferences and skill sets.

Declarative or Imperative Approach

Decide whether you prefer a declarative or imperative approach to infrastructure management.

Declarative tools focus on defining the desired state of infrastructure resources, while imperative Infrastructure as Code tools allow more procedural control over infrastructure changes.

Consider which approach aligns better with your team's mindset and infrastructure management style.

Extensibility and Customization

Evaluate the extensibility and customization options provided by the IaC tool. Check if it allows the creation of custom modules, plugins, or extensions to meet specific requirements.

Consider the availability of a vibrant community and ecosystem around the tool, providing additional resources, libraries, and community-contributed content.

Collaboration and Version Control

Assess the tool's collaboration features and support for version control systems like Git.

Determine if it allows multiple team members to work simultaneously on infrastructure code, provides conflict resolution mechanisms, and supports code review processes.

Security and Compliance

Examine the tool's security features and its ability to meet security and compliance requirements.

Consider features like access controls, encryption, secrets management, and compliance auditing capabilities to ensure the tool aligns with your organization's security standards.

Community and Support

Evaluate the size and activity of the tool's community, as it can greatly impact the availability of resources, forums, and support.

Consider factors like the frequency of updates, bug fixes, and the responsiveness of the tool's maintainers to address issues or feature requests.

Cost and Licensing

Assess the licensing model of the IaC tool. Some Infrastructure as Code Tools may have open-source versions with community support, while others offer enterprise editions with additional features and support.

Consider the total cost of ownership, including licensing fees, training costs, infrastructure requirements, and ongoing maintenance.

Roadmap and Future Development

Research the tool's roadmap and future development plans to ensure its continued relevance and compatibility with evolving technologies and industry trends.

By considering these factors, you can select Infrastructure as Code Tools that best fits your organization's needs, infrastructure requirements, team capabilities, and long-term goals.

To maintain smooth operation, you need to scale your resources. This article delves into the two main scaling strategies - horizontal scaling (spreading out) and vertical scaling (gearing up) - Horizontal vs. Vertical Scaling.

Even if a company pauses its processes, does not grow or develop, the amount of data will still accumulate, and information systems will become more complex. Computing requests require storing large amounts of data in the server's memory and allocating significant resources.

When corporate servers can no longer handle the load, a company has two options: purchase additional capacity for existing equipment or buy another server to offload some of the load. In this article, we will discuss the advantages and disadvantages of both approaches to building IT infrastructure.

Cloud Scalability

What is scaling? It is the ability to increase project performance in minimal time by adding resources.

Therefore, one of the priority tasks of IT specialists is to ensure the scalability of the infrastructure, i.e., the ability to quickly and without unnecessary expenses expand the volume and performance of the IT solution.

Usually, scaling does not involve rewriting the code, but either adding servers or increasing the resources of the existing one. According to this type, vertical and horizontal scaling are distinguished.

Vertical Scaling or Scale Up Infrastructure

Vertical scaling involves adding more RAM, disks, etc., to an existing server. This approach is used when the performance limit of infrastructure elements is exhausted.

Advantages of vertical scaling:

If a company lacks the resources of its existing equipment, its components can be replaced with more powerful ones.

Increasing the performance of each component within a single node increases the performance of the IT infrastructure as a whole.

However, vertical scaling also has disadvantages. The most obvious one is the limitation in increasing performance. When a company reaches its limits, it will need to purchase a more powerful system and then migrate its IT infrastructure to it. Such a transfer requires time and money and increases the risks of downtime during the system transfer.

The second disadvantage of vertical scaling is that if a virtual machine fails, the software will stop working. The company will need time to restore its functionality. Therefore, with vertical scaling, expensive hardware is often chosen that will work without downtime.

When to Scale Up Infrastructure

While scaling out offers advantages in many scenarios, scaling up infrastructure remains relevant in specific situations. Here are some key factors to consider when deciding when to scale up:

Limited growth

If your application experiences predictable and limited growth, scaling up can be a simpler and more efficient solution. Upgrading existing hardware with increased processing power, memory, and storage can often handle the anticipated growth without the complexities of managing a distributed system.

Single server bottleneck

Scaling up can be effective if you experience a performance bottleneck confined to a single server or resource type. For example, if your application primarily suffers from CPU limitations, adding more cores to the existing server might be sufficient to address the bottleneck.

Simplicity and familiarity

If your team possesses expertise and experience in managing a single server environment, scaling up might be a more familiar and manageable approach compared to the complexities of setting up and managing a distributed system with multiple nodes.

Limited resources

In scenarios with limited financial or physical resources, scaling up may be the more feasible option compared to the initial investment required for additional hardware and the ongoing costs associated with managing a distributed system.

Latency-sensitive applications

Applications with real-time processing requirements and low latency needs, such as high-frequency trading platforms or online gaming servers, can benefit from the reduced communication overhead associated with a single server architecture. Scaling up with high-performance hardware can ensure minimal latency and responsiveness.

Stateless applications

For stateless applications that don't require storing data on individual servers, scaling up can be a viable option. These applications can typically be easily migrated to a more powerful server without significant configuration changes.

Scaling up ( or verticalscaling) provides a sufficient and manageable solution for your specific needs and infrastructure constraints.

Example Situations of When to Scale Up:

E-commerce platform experiencing increased traffic during holiday seasons

Consider an e-commerce platform that experiences a surge in traffic during holiday seasons or special sales events. As more users flock to the website to make purchases, the existing infrastructure may struggle to handle the sudden influx of requests, leading to slow response times and potential downtime.

To address this issue, the e-commerce platform can opt to scale up its resources by upgrading its servers or adding more powerful processing units. By bolstering its infrastructure, the platform can better accommodate the heightened traffic load, ensuring that users can seamlessly browse, add items to their carts, and complete transactions without experiencing delays or disruptions.

Database management system for a growing social media platform

Imagine a social media platform that is rapidly gaining users and generating vast amounts of user-generated content, such as posts, comments, and media uploads. As the platform's database accumulates more data, the performance of the database management system (DBMS) may start to degrade, leading to slower query execution times and reduced responsiveness.

In response to this growth, the social media platform can choose to scale up its database infrastructure by deploying more powerful servers with higher processing capabilities and additional storage capacity. By upgrading its DBMS hardware, the platform can efficiently handle the increasing volume of user data, ensuring that users can swiftly retrieve and interact with content on the platform without experiencing delays or downtime.

Financial institution processing a growing number of transactions

Consider a financial institution, such as a bank or credit card company, that processes a large volume of transactions daily. As the institution's customer base expands and the number of transactions continues to grow, the existing processing infrastructure may struggle to keep up with the increasing workload, leading to delays in transaction processing and potential system failures.

To maintain smooth and efficient operations, the financial institution can opt to scale up its transaction processing systems by investing in more robust hardware solutions. By upgrading its servers, networking equipment, and database systems, the institution can enhance its processing capabilities, ensuring that transactions are processed quickly and accurately, and that customers have uninterrupted access to banking services.

Horizontal Scaling or Scale-Out

Horizontal scaling involves adding new nodes to the IT infrastructure. Instead of increasing the capacity of individual components of a node, the company adds new servers. With each additional node, the load is redistributed between all nodes.

Advantages of horizontal scaling:

This type of scaling allows you to use inexpensive equipment that provides enough power for workloads.

There is no need to migrate the infrastructure.

If necessary, virtual machines can be migrated to another infrastructure without stopping operation.

The company can organize work without downtime due to the fact that software instances operate on several nodes of the IT infrastructure. If one of them fails, the load will be distributed between the remaining nodes, and the program will continue to work.

With horizontal scaling, you can refuse to purchase expensive equipment and reduce hardware costs by 20 times.

When to scale out infrastructure

There are several key factors to consider when deciding when to scale out infrastructure:

Horizontal growth

If your application or service anticipates sustained growth in data, users, or workload over time, scaling out offers a more scalable and cost-effective approach than repeated scaling up. Adding new nodes allows you to incrementally increase capacity as needed, rather than investing in significantly larger hardware upgrades each time.

Performance bottlenecks

If you experience performance bottlenecks due to resource limitations (CPU, memory, storage) spread across multiple servers, scaling out can help distribute the workload and alleviate the bottleneck. This is particularly beneficial for stateful applications where data needs to be stored on individual servers.

Distributed processing

When dealing with large datasets or complex tasks that require parallel processing, scaling out allows you to distribute the workload across multiple nodes, significantly reducing processing time and improving efficiency. This is often used in big data processing and scientific computing.

Fault tolerance and redundancy

Scaling out can enhance fault tolerance and redundancy. If one server fails, the remaining nodes can handle the workload, minimizing downtime and ensuring service continuity. This is crucial for mission-critical applications where downtime can have significant consequences.

Microservices architecture

If your application employs a microservices architecture, where each service is independent and modular, scaling out individual microservices allows you to scale specific functionalities based on their specific needs. This offers greater flexibility and efficiency compared to scaling the entire application as a single unit.

Cost-effectiveness

While scaling out may require an initial investment in additional servers, in the long run, it can be more cost-effective than repeatedly scaling up. Additionally, cloud-based solutions often offer pay-as-you-go models which allow you to scale resources dynamically and only pay for what you use.

In summary, scaling out infrastructure is a good choice when you anticipate sustained growth, encounter performance bottlenecks due to resource limitations, require distributed processing for large tasks, prioritize fault tolerance and redundancy, utilize a microservices architecture, or seek cost-effective long-term scalability. Remember to carefully assess your specific needs and application characteristics to determine the optimal approach for your infrastructure.

Example Situations of When to Scale Out

Cloud-based software-as-a-service (SaaS) application facing increased demand

Consider a cloud-based SaaS application that provides project management tools to businesses of all sizes. As the application gains popularity and attracts more users, the demand for its services may skyrocket, putting strain on the existing infrastructure and causing performance degradation.

To meet the growing demand and maintain optimal performance, the SaaS provider can scale out its infrastructure by leveraging cloud computing resources such as auto-scaling groups and load balancers. By dynamically adding more virtual servers or container instances based on demand, the provider can ensure that users have access to the application's features and functionalities without experiencing slowdowns or service disruptions.

Content delivery network (CDN) handling a surge in internet traffic

Imagine a content delivery network (CDN) that delivers multimedia content, such as videos, images, and web pages, to users around the world. During peak traffic periods, such as major events or viral content trends, the CDN may experience a significant increase in incoming requests, leading to congestion and delays in content delivery.

To cope with the surge in internet traffic, the CDN can scale out its infrastructure by deploying additional edge servers or caching nodes in strategic locations. By expanding its network footprint and distributing content closer to end users, the CDN can reduce latency and improve the speed and reliability of content delivery, ensuring a seamless browsing experience for users worldwide.

E-commerce shopping cart

An e-commerce platform utilizes microservices architecture, where each service is independent and responsible for specific tasks like managing shopping carts. Scaling out individual microservices allows for handling increased user traffic and order volume without impacting other functionalities of the platform. This approach provides better flexibility and scalability compared to scaling up the entire system as a single unit.

These examples demonstrate situations where scaling out by adding more nodes horizontally is better suited to handle situations with unpredictable workloads, distributed processing needs, and independent service scaling within a larger system.

Choosing the Right Approach

The decision between horizontal and vertical scaling should be based on specific system requirements, constraints, and objectives.

Some considerations include:

Workload characteristics: Consider the nature of your workload. Horizontal scaling is well-suited for distributed and stateless workloads, while vertical scaling may be preferable for single-threaded or stateful workloads.

Cost and budget: Evaluate your budget and resource availability. Horizontal scaling can be cost-effective, especially when using commodity hardware, while vertical scaling may require a more significant upfront investment in high-performance hardware.

Performance and maintenance: Assess the performance gains and management complexity associated with each approach. Consider how well each option aligns with your operational capabilities and objectives.

Future growth: Think about your system's long-term scalability needs. If you anticipate significant growth, horizontal scaling may provide greater flexibility.

Here are some additional tips for choosing the right scaling approach:

Start with a small-scale deployment and monitor performance: This will help you understand your workload's requirements and identify any potential bottlenecks.

Use a combination of horizontal and vertical scaling: This can provide the best balance of performance, cost, and flexibility.

Consider using a cloud-based platform: Cloud providers offer a variety of scalable and cost-effective solutions that can be tailored to your specific needs.

By carefully considering all of these factors, you can choose the best scaling approach for your company's needs.

How Gart Can Help You with Cloud Scalability

Ultimately, the determining factors are your cloud needs and cost structure. Without the ability to predict the true aspects of these components, each business can fall into the trap of choosing the wrong scaling strategy for them. Therefore, cost assessment should be a priority. Additionally, optimizing cloud costs remains a complex task regardless of which scaling system you choose.

Here are some ways Gart can help you with cloud scalability:

Assess your cloud needs and cost structure: We can help you understand your current cloud usage and identify areas where you can optimize your costs.

Develop a cloud scaling strategy: We can help you choose the right scaling approach for your specific needs and budget.

Implement your cloud scaling strategy: We can help you implement your chosen scaling strategy and provide ongoing support to ensure that it meets your needs.

Optimize your cloud costs: We can help you identify and implement cost-saving measures to reduce your cloud bill.

Gart has a team of experienced cloud experts who can help you with all aspects of cloud scalability. We have a proven track record of helping businesses optimize their cloud costs and improve their cloud performance.

Contact Gart today to learn more about how we can help you with cloud scalability.

We look forward to hearing from you!